I have a S3 bucket with paths of the form {productId}/{store}/description.txt. Here's what the bucket might look like at the top level

ABC123/Store1/description.txt

ABC123/Store2/description.txt

ABC123/Store3/description.txt

DEF123/Store1/description.txt

DEF123/Store2/description.txt

If i had to read all the files pertaining to a certain product ID (for ex: ABC123) do I have to navigate into ABC123, list all folders and iterate over it for each store and download each file separately? Or is there a way I can do this with a single API call?

PS: I need to do this programmatically

CodePudding user response:

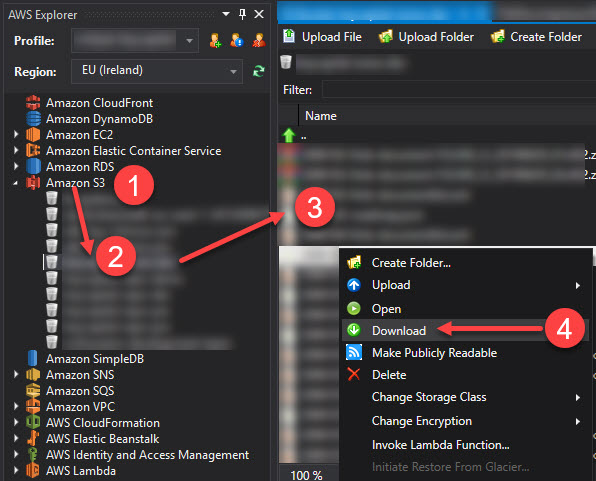

The S3 service has no meaningful limits on simultaneous downloads (easily several hundred downloads at a time are possible) and there is no policy setting related to this... but the S3 console only allows you to select one file for downloading at a time.

Once the download starts, you can start another and another, as many as your browser will let you attempt simultaneously.

In case someone is still looking for an S3 browser and downloader I have just tried Filezilla Pro (it's a paid version). It worked great.

I created a connection to S3 with the Access key and secret key set up via IAM. The connection was instant and downloading all folders and files was fast.