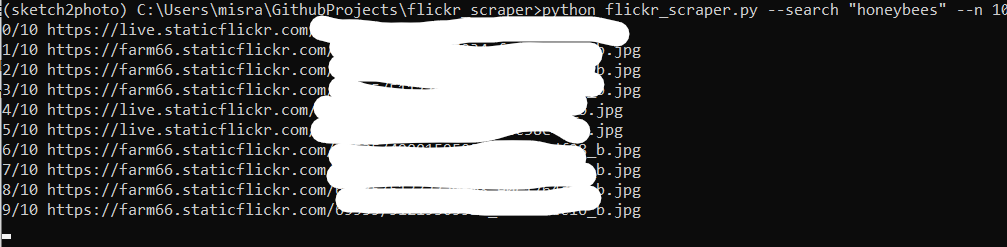

I am trying to scrape images from Flickr using the FlickrAPI. What is happening is that the command line just stays there and nothing happens after the image URLs have been scraped. It's something like the following:

Nothing happens after this screen, it stays here for a long time, somewhere in the range of 1200 seconds or more sometimes.

For scraping I used the following code:

def get_urls(search='honeybees on flowers', n=10, download=False):

t = time.time()

flickr = FlickrAPI(key, secret)

license = () # https://www.flickr.com/services/api/explore/?method=flickr.photos.licenses.getInfo

photos = flickr.walk(text=search, # http://www.flickr.com/services/api/flickr.photos.search.html

extras='url_o',

per_page=500, # 1-500

license=license,

sort='relevance')

if download:

dir = os.getcwd() os.sep 'images' os.sep search.replace(' ', '_') os.sep # save directory

if not os.path.exists(dir):

os.makedirs(dir)

urls = []

for i, photo in enumerate(photos):

if i < n:

try:

# construct url https://www.flickr.com/services/api/misc.urls.html

url = photo.get('url_o') # original size

if url is None:

url = 'https://farm%s.staticflickr.com/%s/%s_%s_b.jpg' % \

(photo.get('farm'), photo.get('server'), photo.get('id'), photo.get('secret')) # large size

download

if download:

download_uri(url, dir)

urls.append(url)

print('%g/%g %s' % (i, n, url))

except:

print('%g/%g error...' % (i, n))

# import pandas as pd

# urls = pd.Series(urls)

# urls.to_csv(search "_urls.csv")

print('Done. (%.1fs)' % (time.time() - t) ('\nAll images saved to %s' % dir if download else ''))

This function is called as follows:

if __name__ == '__main__':

parser = argparse.ArgumentParser()

parser.add_argument('--search', type=str, default='honeybees on flowers', help='flickr search term')

parser.add_argument('--n', type=int, default=10, help='number of images')

parser.add_argument('--download', action='store_true', help='download images')

opt = parser.parse_args()

get_urls(search=opt.search, # search term

n=opt.n, # max number of images

download=opt.download) # download images

I tried going through the function code multiple times but I can't seem to understand why nothing happens after the scraping is done, as everything else is working fine.

CodePudding user response:

I can't run it but I think all problem is that it gets information about 500 photos - because you have per_page=500 - and it runs for-loop for all 500 photos and you have to wait for the end of for-loop.

You should use break to exit this loop after n images

for i, photo in enumerate(photos):

if i >= n:

break

else:

try:

# ...code ...

Or simply you should use photos[:n] and then you don't have to check i < n

for i, photo in enumerate(photos[:n]):

try:

# ...code ...

Eventually you should use per_page=n

BTW:

You can use os.path.join to create path

dir = os.path.join(os.getcwd(), 'images', search.replace(' ', '_'))

If you use exist_ok=True in makedirs() then you don't have to check if not os.path.exists(dir):

if download:

dir = os.path.join(os.getcwd(), 'images', search.replace(' ', '_'))

os.makedirs(dir, exist_ok=True)

If you use enumerate(photos, 1) then you get values 1,2,3,... instead of 0,1,2,...