I have a dataset like below:

--- ----------

|id |t_date |

--- ----------

|1 |1635234395|

|1 |1635233361|

--- ----------

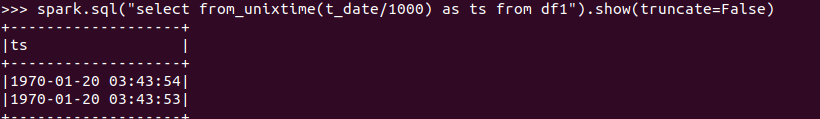

Where t_date consists of epoch seconds of today's date. Now, I want to convert it to timestamp. I tried the below code but it is giving the wrong output:

I referred to the below two links but had no luck:

- How do I convert column of unix epoch to Date in Apache spark DataFrame using Java?

- Converting epoch to datetime in PySpark data frame using udf

CodePudding user response:

You dont have to divide it by 1000 , You can easily use from_unixtime

Data Preparation

input_str = """

1,1635234395,

1,1635233361

""".split(",")

input_values = list(map(lambda x: x.strip() if x.strip() != 'null' else None, input_str))

cols = list(map(lambda x: x.strip() if x.strip() != 'null' else None, "id,t_date".split(',')))

n = len(input_values)

n_col = 2

input_list = [tuple(input_values[i:i n_col]) for i in range(0,n,n_col)]

input_list

sparkDF = sql.createDataFrame(input_list, cols)

sparkDF = sparkDF.withColumn('t_date',F.col('t_date').cast('long'))

sparkDF.show()

--- ----------

| id| t_date|

--- ----------

| 1|1635234395|

| 1|1635233361|

--- ----------

From Unix Time

sparkDF.withColumn('t_date_parsed',F.from_unixtime(F.col('t_date'))).show()

--- ---------- -------------------

| id| t_date| t_date_parsed|

--- ---------- -------------------

| 1|1635234395|2021-10-26 13:16:35|

| 1|1635233361|2021-10-26 12:59:21|

--- ---------- -------------------