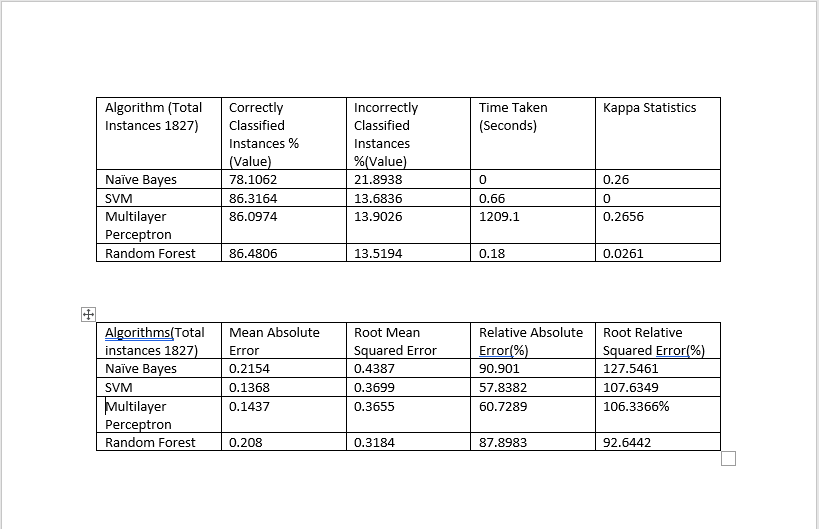

I ran different classifiers on the same dataset. I got some statistical values after run the classifiers.

This is the summary of all classifiers

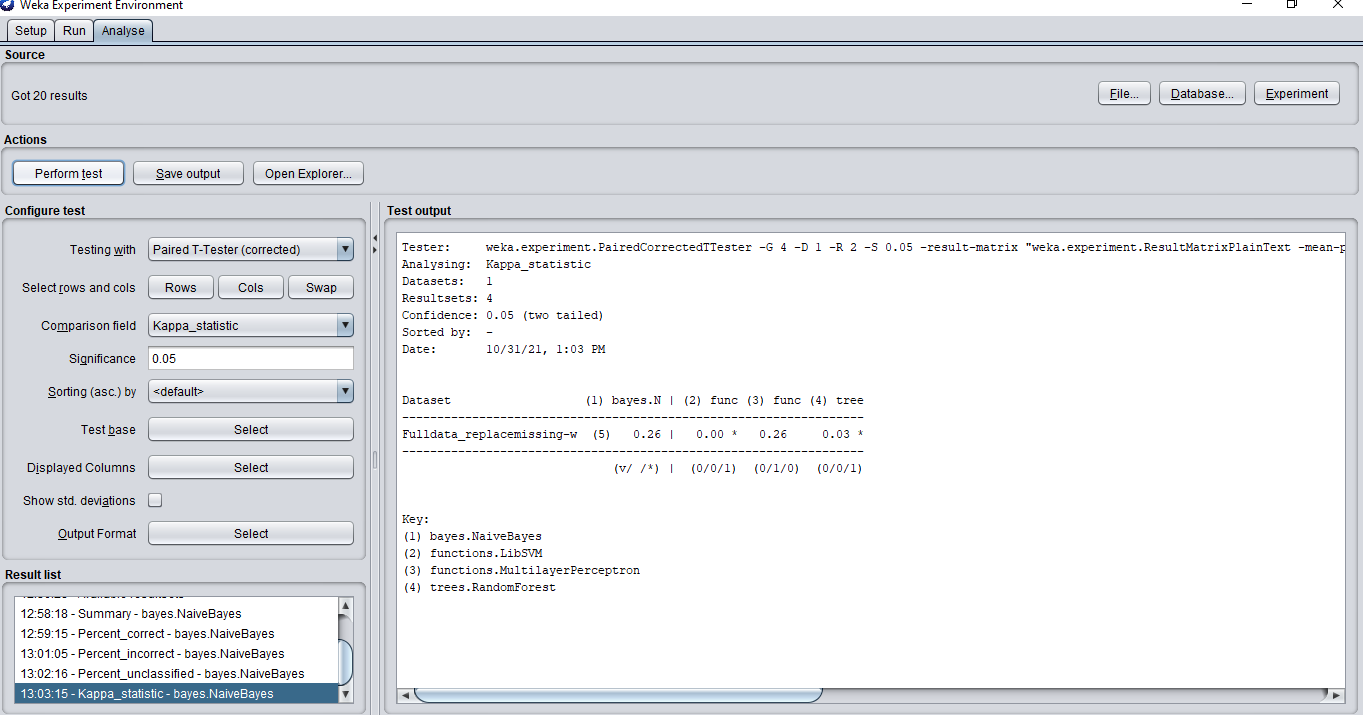

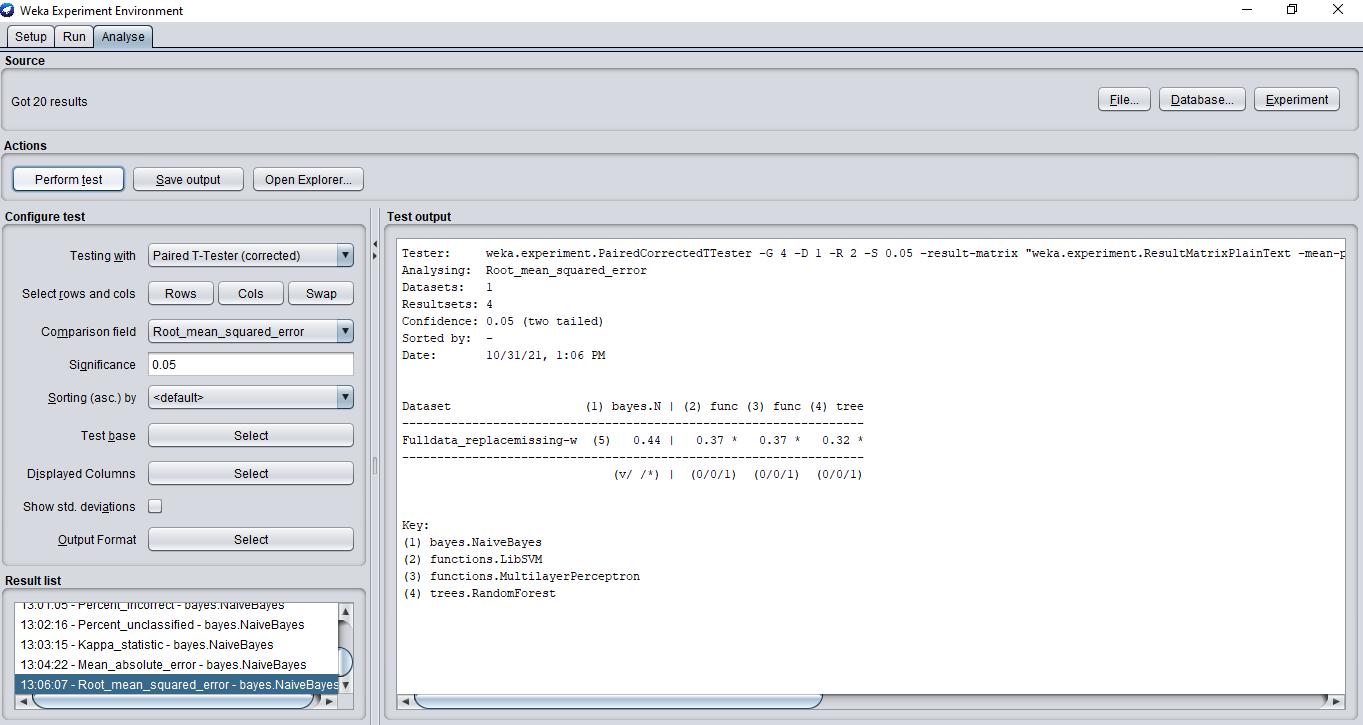

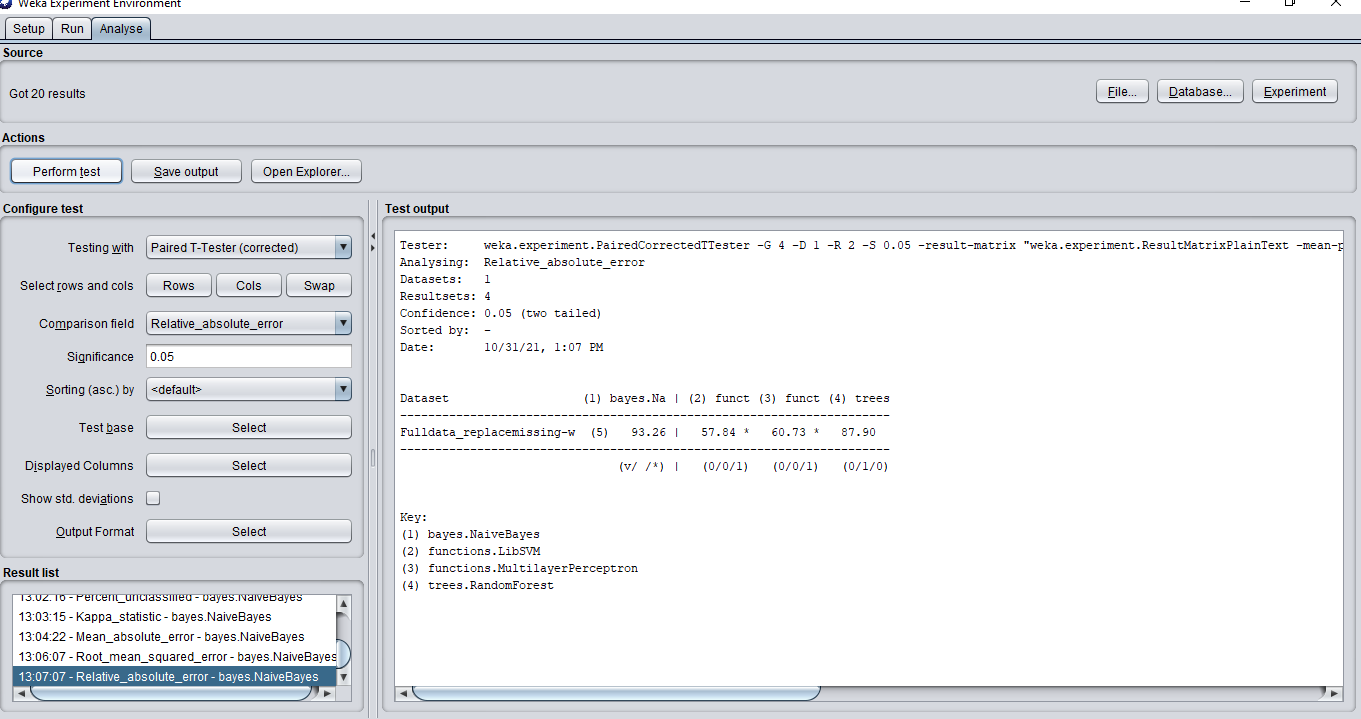

I am using Weka to trained the model. Weka itself has a method to compare different algorithms. For that we need to use the Experiment tab. I have done with this option as well for the same dataset.

Weka gave me the result for Kappa statistics when use Experiment tab

Rootmean squared error is

Relative absolute error

and so on.....

Now I am unable to understand that the values I got from Experiment tab how does those are similar to the values that I have shared in the table format in the first picture?

CodePudding user response:

I presume that the initial table was populated with statistics obtained from cross-validation runs in the Weka Explorer.

The Explorer aggregates the predictions across a single cross-validation run so that it appears that you had a single test set of that size. It is only to be used as an explorative tool, hence the name.

The Experimenter records the metrics (like accuracy, rmse, etc) generated from each fold pair across the number of runs that you perform during your experiment. The metrics collected across multiple classifiers and/or datasets can then be analyzed using significance tests. By default, 10 runs of 10-fold CV are used, which is recommended for such comparisons. This results in 100 individual values for each metric from which mean and standard deviation are generated. */v indicate whether there is a statistically significant loss/win.