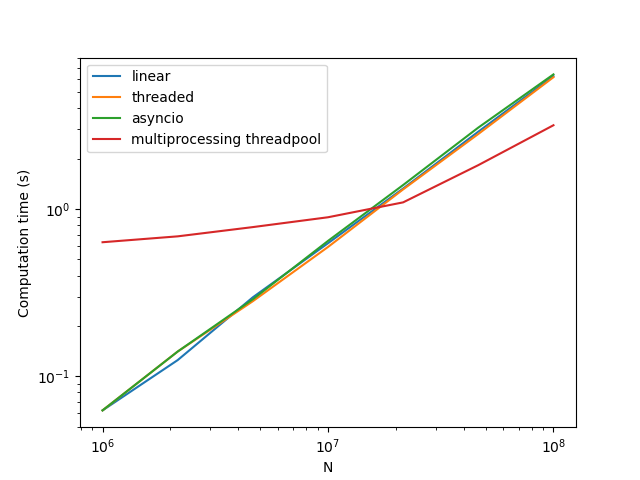

I am trying to streamline a program that involves a set of short tasks that can be done in parallel, where the results of the set of tasks must be compared before moving onto the next step (which again involves a set of short tasks, and then another set, etc.). Due to the level of complexity of these tasks, it's not worthwhile to use multiprocessing due to the set-up time. I am wondering if there is another way to do these short tasks in parallel that is faster than linear. The

CodePudding user response:

My first observation is that the running time of function numbers can be cut roughly in half by simply defining it as:

def numbers(a, b):

return range(a, b)

Second, a task that is 100% CPU-intensive as is computing the sum of numbers can never perform significantly better using pure Python without the aid of a C-language runtime library (such as numpy) because of contention for the Global Interpreter Lock (GIL), which prevents any sort of parallelization from occurring (and asyncio only uses a single thread to being with).

Third, the only way you can achieve a performance improvement running pure Python code against a 100% CPU task is with multiprocessing. But there is CPU overhead in creating the process pool and CPU overhead in passing arguments from the main process to the the address space in which the process pool's processes are running in and overhead again in passing back the results. So for there to be any performance improvement, the worker function linear_sum, cannot be trivial; it must require enough CPU processing to warrant the additional overhead I just mentioned.

The following benchmark runs the worker function, renamed to compute_sum and which now accepts as its argument a range. To further reduce overhead, I have introduced a function split that will take the passed range argument and generate multiple range instances removing the need to use numpy and to generate arrays. The benchmark computes the sum using a single thread (linear), a multithreading pool and a multiprocessing pool and is run twice for n = 2000 and n = 50_000_000. The benchmark displays the elapsed time and total CPU time across all processes.

For n = 2000, multiprocessing, as expected, performs worse than both linear and multithreading. For n = 50_000_000, multiprocessing's total CPU time is a bit higher than for linear and multithreading as is expected due to the additional aforementioned overhead. But now the elapsed time has gone down significantly. For both values of n, multithreading is a loser.

from multiprocessing.pool import Pool, ThreadPool

import time

def split(iterable, n):

k, m = divmod(len(iterable), n)

return (iterable[i * k min(i, m):(i 1) * k min(i 1, m)] for i in range(n))

def compute_sum(r):

t = time.process_time()

return (sum(r), time.process_time() - t)

if __name__ == '__main__':

for n in (2000, 50_000_000):

r = range(0, n 1)

t1 = time.time()

s, cpu = compute_sum(r)

elapsed = time.time() - t1

print(f'n = {n}, linear elapsed time = {elapsed}, total cpu time = {cpu}, sum = {s}')

t1 = time.time()

t2 = time.process_time()

thread_pool = ThreadPool(4)

s = 0

for return_value, process_time in thread_pool.imap_unordered(compute_sum, split(r, 4)):

s = return_value

elapsed = time.time() - t1

cpu = time.process_time() - t2

print(f'n = {n}, thread pool elapsed time = {elapsed}, total cpu time = {cpu}, sum = {s}')

thread_pool.close()

thread_pool.join()

t1 = time.time()

t2 = time.process_time()

pool = Pool(4)

s = 0

cpu = 0

for return_value, process_time in pool.imap_unordered(compute_sum, split(r, 4)):

s = return_value

cpu = process_time

elapsed = time.time() - t1

cpu = time.process_time() - t2

print(f'n = {n}, multiprocessing elapsed time = {elapsed}, total cpu time = {cpu}, sum = {s}')

pool.close()

pool.join()

print()

Prints:

n = 2000, linear elapsed time = 0.0, total cpu time = 0.0, sum = 2001000

n = 2000, thread pool elapsed time = 0.00700068473815918, total cpu time = 0.015625, sum = 2001000

n = 2000, multiprocessing elapsed time = 0.13200139999389648, total cpu time = 0.015625, sum = 2001000

n = 50000000, linear elapsed time = 2.0311124324798584, total cpu time = 2.03125, sum = 1250000025000000

n = 50000000, thread pool elapsed time = 2.050999164581299, total cpu time = 2.046875, sum = 1250000025000000

n = 50000000, multiprocessing elapsed time = 0.7579991817474365, total cpu time = 2.359375, sum = 125000002500000