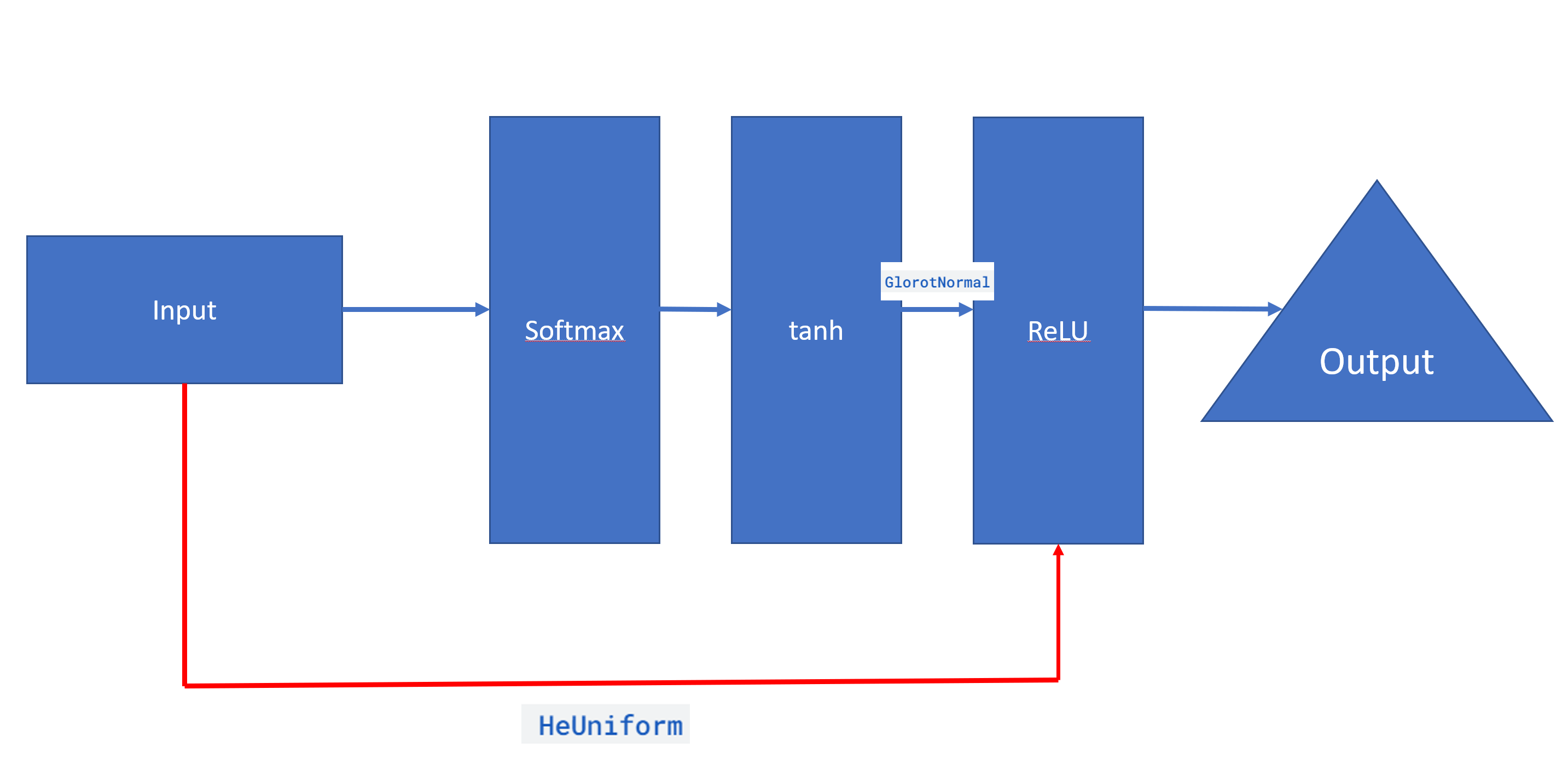

I was wondering if it is possible to create a customized network structure where the input layer has an extra connection to a hidden layer that is not adjacent to the input layer by using tensorflow. As an example, suppose I have a simple network structure as shown below.

import numpy as np

import random

import tensorflow as tf

from tensorflow import keras

m = 200

n = 5

my_input= np.random.random([m,n])

my_output = np.random.random([m,1])

my_model = tf.keras.Sequential([

tf.keras.layers.Flatten(input_shape=(my_input.shape[1],)),

tf.keras.layers.Dense(32, activation='softmax'),

tf.keras.layers.Dense(32, activation='tanh'),

tf.keras.layers.Dense(32, activation='relu'),

tf.keras.layers.Dense(1)

])

my_model.compile(loss='mse',optimizer = tf.keras.optimizers.Adam(learning_rate=0.001))

res = my_model.fit(my_input, my_output, epochs=50, batch_size=1,verbose=0)

Is there a way that the first layer having the input values can have an extra connection to the third layer that has the ReLU activation? While doing so, I'd like to have different constraints in each connection. For example, for the connection coming from the previous layer, I'd like to use GlorotNormal as my weight initialization. As for the extra connection coming from the input layer, I'd like to use HeUniform initialization.

I tried to visualize what I have in mind below.

CodePudding user response:

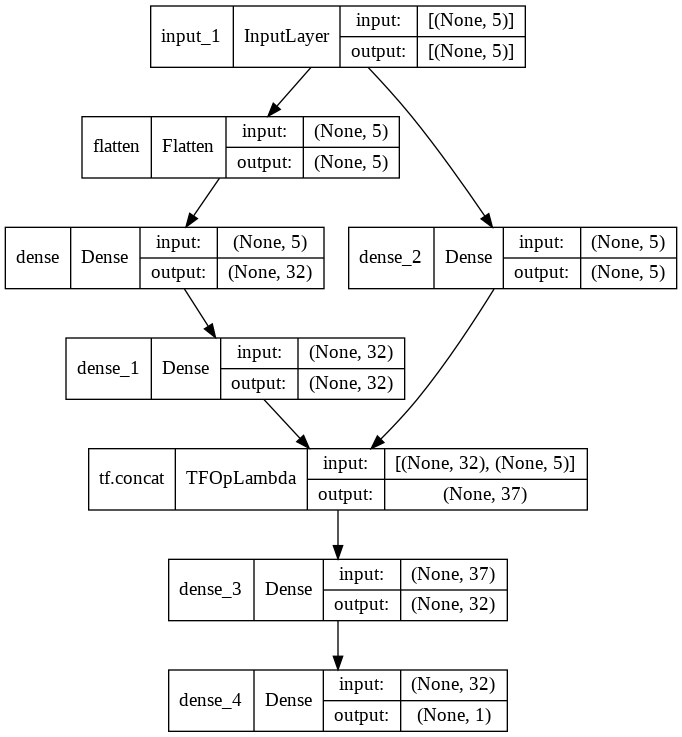

Use the Keras functional API and tf.concat:

import numpy as np

import random

import tensorflow as tf

from tensorflow import keras

m = 200

n = 5

my_input= np.random.random([m,n])

my_output = np.random.random([m,1])

inputs = tf.keras.layers.Input((my_input.shape[1],))

x = tf.keras.layers.Flatten()(inputs)

x = tf.keras.layers.Dense(32, activation='softmax')(x)

x = tf.keras.layers.Dense(32, activation='tanh', kernel_initializer=tf.keras.initializers.GlorotNormal())(x)

y = tf.keras.layers.Dense(my_input.shape[1], kernel_initializer=tf.keras.initializers.HeUniform())(inputs)

x = tf.keras.layers.Dense(32, activation='relu')(tf.concat([x, y], axis=1))

outputs = tf.keras.layers.Dense(1)(x)

my_model = tf.keras.Model(inputs, outputs)

dot_img_file = 'model_1.png'

tf.keras.utils.plot_model(my_model, to_file=dot_img_file, show_shapes=True)

my_model.compile(loss='mse',optimizer = tf.keras.optimizers.Adam(learning_rate=0.001))

res = my_model.fit(my_input, my_output, epochs=50, batch_size=1,verbose=0)