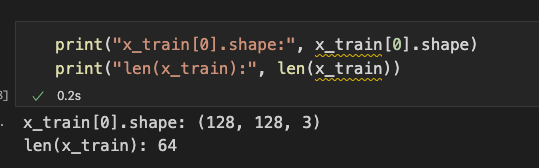

I have two batches of length 64. Each index is an ndarray of size size (128, 128, 3).

My Code:

ae_encoder = Conv2D(32, (2,2), padding='same')(input)

ae_encoder = LeakyReLU()(ae_encoder)

ae_encoder = Conv2D(64, (3, 3), padding='same',strides =(2,2))(ae_encoder)

ae_encoder = LeakyReLU()(ae_encoder)

ae_encoder = Conv2D(128, (3, 3), padding='same',strides =(2,2))(ae_encoder)

ae_encoder = LeakyReLU()(ae_encoder)

ae_encoder = Conv2D(256, (3, 3), padding='same',strides =(2,2))(ae_encoder)

ae_encoder = LeakyReLU()(ae_encoder)

ae_encoder = Conv2D(512, (3, 3), padding='same',strides =(2,2))(ae_encoder)

ae_encoder = LeakyReLU()(ae_encoder)

ae_encoder = Conv2D(1024, (3, 3), padding='same',strides =(2,2))(ae_encoder)

ae_encoder = LeakyReLU()(ae_encoder)

#Flattening for the bottleneck

vol = ae_encoder.shape

ae_encoder = Flatten()(ae_encoder)

ae_encoder_output = Dense(Z_DIM, activation='relu')(ae_encoder)

I can't seem to find why it is treating the entire batch of size 64) as different channels. Shouldn't it is supposed to be iterating over the ndarray inside these batches?

Error:

ValueError: Layer "model_3" expects 1 input(s), but it received 64 input tensors.

Update-1 x_train and y_train are both lists of length 64 and each index is of shape (128, 128, 3).

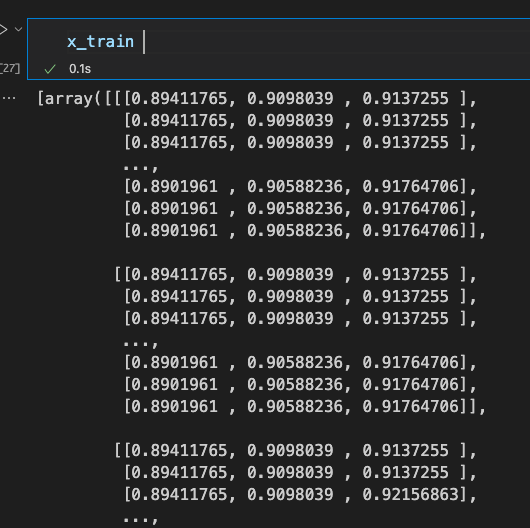

Sample input (Input is quite large so can't copy it entirely)

CodePudding user response:

If you are trying to implement a vanilla Autoencoder, where the input shape should equals the output shape then you have to change the last decoder layer to this:

ae_decoder_output = tf.keras.layers.Conv2D(3, (3,3), activation='sigmoid', padding='same',strides=(1,1))(ae_decoder)

resulting in the output shape (None, 128, 128, 3). Also, you need to make sure that your data has the shape (samples, 128, 128, 3).