I have an VB.NET application that uses an Oracle OleDbDataReader to pull ~15 million rows from an Oracle database and write them to a | delimited text file.

Private Sub GenerateTextSqlReportWithCurrent(report As TblreportEntity, filename As String)

Const batchSize = 20000

Dim encryption As New ClassEncrypt

'get data

LogEvent($"INFO: Opening DataReader for report {report.ReportName}")

Dim reader As OleDbDataReader = IMOracle2.GetDataReader(report.Sql, IMOracle2.GetConnectString(My.Settings.DB_Instance, encryption.Decrypt(My.Settings.DB_UserID), encryption.Decrypt(My.Settings.DB_PWD)))

LogEvent($"INFO: Finished Opening DataReader for report {report.ReportName}")

LogEvent($"INFO: writing {report.ReportName} to {filename}")

WriteToFile(filename, GetColumnTitlesHeader(reader), False)

Dim batch As New StringBuilder()

Dim lastReport As DateTime = DateTime.Now()

Dim rowCount As Integer

While reader.Read()

For i = 0 To reader.FieldCount - 1

Dim output As String

'' output = Replace(reader(i).ToString, vbCr, "")

output = Replace(reader.GetValue(i).ToString, vbCr, String.Empty)

output = Replace(output, vbLf, String.Empty)

output = Replace(output, "|", String.Empty)

batch.Append(output)

If i < reader.FieldCount - 1 Then

batch.Append("|")

End If

Next i

batch.Append(vbCrLf)

rowCount = 1

If rowCount Mod batchSize = 0 Then

Dim now = Date.Now

Dim sinceLastSeconds = DateDiff(DateInterval.Second, lastReport, now)

lastReport = now

LogEvent($"INFO: Processing row {rowCount} {sinceLastSeconds}s since last")

Dim fileWriteStart = Date.Now

'LogEvent($"INFO: Starting Writing {rowCount} row(s) to file for {report.ReportName}. {sinceLastSeconds}s since last")

WriteToFile(filename, batch.ToString(), True)

Dim fileWriteSeconds = DateDiff(DateInterval.Second, fileWriteStart, Date.Now)

LogEvent($"INFO: Finished Writing another {batchSize} row(s) to file in {fileWriteSeconds}s for {report.ReportName}")

batch.Clear()

End If

End While

'LogEvent($"INFO: Starting Writing {rowCount} row(s) to {filename} for {report.ReportName}")

WriteToFile(filename, batch.ToString(), True)

LogEvent($"INFO: Finished Writing last row(s) to {filename} for {report.ReportName}")

End Sub

Public Shared Function GetDataReader(ByVal strSQL As String, ByVal strConnection As String) As OleDb.OleDbDataReader

Dim cnn As New OleDb.OleDbConnection(strConnection)

Dim cmd As New OleDbCommand(strSQL, cnn)

cnn.Open()

GetDataReader = cmd.ExecuteReader(CommandBehavior.CloseConnection)

End Function

When this Sub starts it writes a batch of rows to the text file in less than 1s

07/12/2021 16:41:03: INFO: Finished Writing another 20000 row(s) to file in 0s for TAG_ATTRIBUTES

07/12/2021 16:41:03: INFO: Processing row 100000 0s since last

Each batch is slightly slower than the one before and by 2.5 million rows this has slowed to ~9s per batch:

07/12/2021 16:51:47: INFO: Processing row 2560000 9s since last

07/12/2021 16:51:37: INFO: Finished Writing another 20000 row(s) to file in 0s for TAG_ATTRIBUTES

and by 15,000,000:

08/12/2021 05:23:07: INFO: Processing row 15000000 145s since last

08/12/2021 05:20:42: INFO: Finished Writing another 20000 row(s) to file in 0s for TAG_ATTRIBUTES

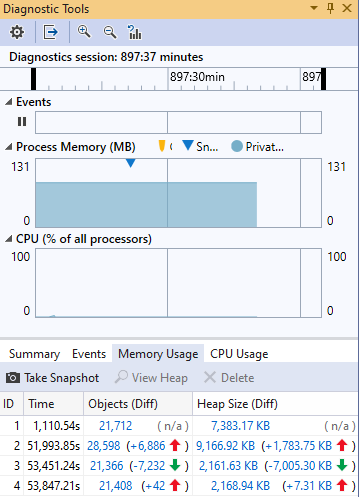

Process Memory usage in the app remains below 100MB throughout when monitored in the Visual Studio Diagnostic tools.

This is .Net Framework 4. AnyCPU

I wonder what might be causing the gradual slow down?

I've investigated building the entire content of the output file in a StringBuilder. The same gradual slow down happens but memory usage is in the GBytes as the StringBuilder populates.

CodePudding user response:

To demonstrate what I said in comments. Using blocks close and dispose the objects.

Public Shared Function GetDataTable(ByVal strSQL As String, ByVal strConnection As String) As OleDb.OleDbDataTable

Dim dt As New DataTable

Using cnn As New OleDb.OleDbConnection(strConnection),

cmd As New OleDbCommand(strSQL, cnn)

cnn.Open()

Using reader = cmd.ExecuteReader

dt.Load(reader)

End Using

End Using

Return dt

End Function

The parts of GenerateTextSqlReportWithCurrent that would change.

Dim ColumnNames As String() = From dc As DataColumn In dt.Columns

Select dc.ColumnName

Dim strNames = String.Join(", ", ColumnNames)

WriteToFile(filename, strNames, False)

Dim i As Integer

For Each row As DataRow In dt.Rows

For i = 0 To dt.Columns.Count - 1

Dim output As String

output = Replace(row(i).ToString, vbCr, String.Empty)

output = Replace(output, vbLf, String.Empty)

output = Replace(output, "|", String.Empty)

batch.Append(output)

If i < dt.Columns.Count - 1 Then

batch.Append("|")

End If

Next

Next

CodePudding user response:

Implementing Mary's suggestion, as follows, requires that all the data from the query is loaded into memory (15 million DataRow objects). After a few million DataRows were created the application slowed down and after 1 hour had only loaded 5 million rows with 3 GBytes of Process Memory in use. The application was executing the dt.Load(reader) line. So unfortunately this is not a practical when dealing with such large quantities of records.

I will go with a simple SSIS package to do this work. I do not understand how, but it is able to export 15 million rows to a text file in around 10 mins in my environment.

Private Sub GenerateTextSqlReportWithCurrent(report As TblreportEntity, filename As String)

Const batchSize = 20000

Dim encryption As New ClassEncrypt

LogEvent($"INFO: Filling DataTable for report {report.ReportName}")

Dim dt as DataTable = GetDataTable(report.Sql,IMOracle2.GetConnectString(My.Settings.DB_Instance, encryption.Decrypt(My.Settings.DB_UserID), encryption.Decrypt(My.Settings.DB_PWD)))

LogEvent($"INFO: Finished Filling DataTable for report {report.ReportName}")

Dim columnNames = From dc As DataColumn In dt.Columns

Select dc.ColumnName

Dim strNames = String.Join("|", columnNames)

WriteToFile(filename, strNames, False)

Dim batch As New StringBuilder()

Dim rowCount As Integer

Dim i As Integer

For Each row As DataRow In dt.Rows

For i = 0 To dt.Columns.Count - 1

Dim output As String

output = Replace(row(i).ToString, vbCr, String.Empty)

output = Replace(output, vbLf, String.Empty)

output = Replace(output, "|", String.Empty)

batch.Append(output)

If i < dt.Columns.Count - 1 Then

batch.Append("|")

End If

Next i

batch.Append(vbCrLf)

rowCount = 1

Dim lastReport As DateTime = DateTime.Now()

If rowCount Mod batchSize = 0 Then

Dim now = Date.Now

Dim sinceLastSeconds = DateDiff(DateInterval.Second, lastReport, now)

lastReport = now

LogEvent($"INFO: Processing row {rowCount} {sinceLastSeconds}s since last")

Dim fileWriteStart = Date.Now

'LogEvent($"INFO: Starting Writing {rowCount} row(s) to file for {report.ReportName}. {sinceLastSeconds}s since last")

WriteToFile(filename, batch.ToString(), True)

Dim fileWriteSeconds = DateDiff(DateInterval.Second, fileWriteStart, Date.Now)

LogEvent($"INFO: Finished Writing another {batchSize} row(s) to file in {fileWriteSeconds}s for {report.ReportName}")

batch.Clear()

End If

Next row

WriteToFile(filename, batch.ToString(), True)

LogEvent($"INFO: Finished Writing last row(s) to {filename} for {report.ReportName}")

End Sub