I am using data flow activity to convert MongoDB data to SQL. As of now MongoDB/Atlas is not supported as a source in dataflow. I am converting MongoDB data to JSON file in AzureBlob Storage and then using that json file as a source in dataflow.

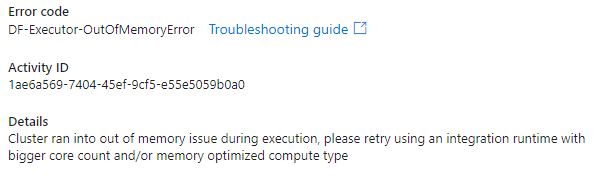

for a json source file whose size is around/more than 4Gb, whenever I try to import projection, the Azure Integration Runtime is throwing following error. I have changed the core size to 16 16 and cluster type to memory optimized.

Is there any other way to import projection ?

CodePudding user response:

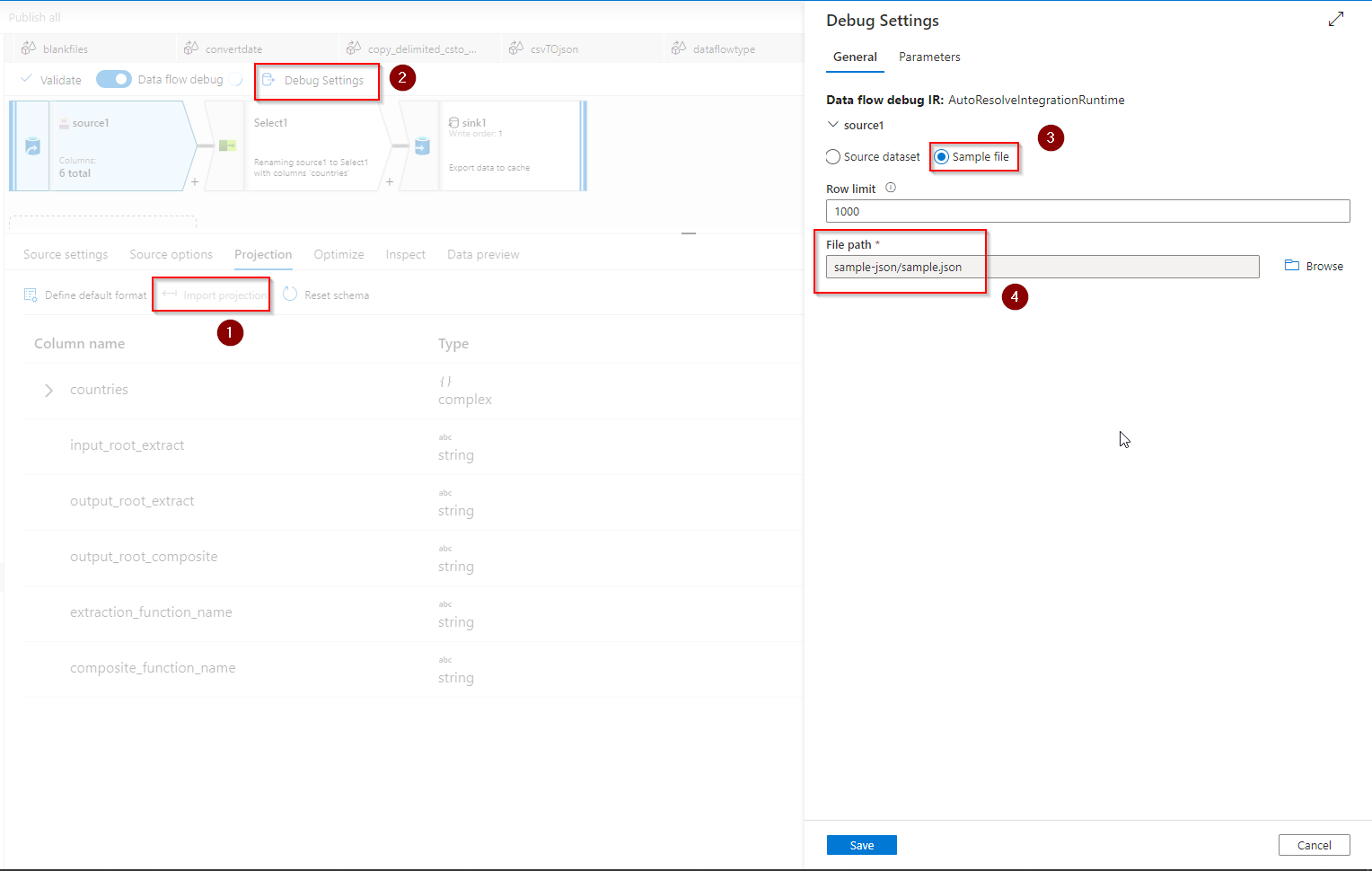

Since your source data is one large file that contains lots of rows with maybe complex schemas, you can create a temporary file with a few rows that contain all the columns you want to read, and then do the following:

1. From the data flow source Debug Settings -> Import projection with sample file to get the complete schema.

Now, Select Import projection.

2. Next, rollback the Debug Settings to use the source dataset for the remaining data movement/transformation.

If you want to map data types as well, you can follow this official MS recommendation doc, as map data type cannot be directly supported in JSON source.