So I made a program that'll get the schema from a table in a MySQL Server, create a table based on the said schema, and insert the data rows which are saved in a CSV file in a Google Cloud Storage.

Code for getting the schema from MySQL:

foreach (string table in listOfTableNames)

{

MySqlCommand query = new MySqlCommand($"desc {table}", openedMySQLConnection);

MySqlDataReader reader = query.ExecuteReader();

DataTable dt = new DataTable(table);

dt.Load(reader);

reader.Close();

object[][] result = dt.AsEnumerable().Select(x => x.ItemArray).ToArray();

TableSchemas.Add(table, result);

}

Google BigQuery table maker from schema looped per table:

var schemaBuilder = new TableSchemaBuilder();

foreach (var column in dictionaryOfSchemas[myTablename])

{

string columnType = column[1].ToString().ToLower();

schemaBuilder.Add(

column[0].ToString(),

columnType.Contains("varchar") ? BigQueryDbType.String :

(columnType.Contains("int") ? BigQueryDbType.Int64 :

(columnType.Contains("decimal") ? BigQueryDbType.Float64 :

(columnType.Contains("timestamp") ? BigQueryDbType.DateTime :

(columnType.Contains("datetime") ? BigQueryDbType.DateTime :

(columnType.Contains("date") ? BigQueryDbType.Date :

BigQueryDbType.String)))))

);

}

TableSchema schema = schemaBuilder.Build();

BigQueryTable newTable = bigquery.GetDataset(myDataset).CreateTable(myTablename, schema);

CSV to created GBQ table looped per table:

bigquery.CreateLoadJob(

$"gs://{myProjectIdString}/{myBucketNameString}/{myCSVFilename}",

newTable.Reference,

schema,

new CreateLoadJobOptions()

{

SourceFormat = FileFormat.Csv,

SkipLeadingRows = 1

}

).PollUntilCompleted();

No error shows up after running the CreateLoadJob method.

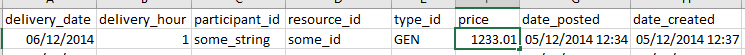

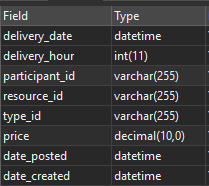

Schema of the new table seems to be good and matches the CSV and the MySQL table:

But there's still no data in the table:

Am I doing something wrong? I'm still learning Google services so any help and insight would be appreciated. :)

CodePudding user response:

There are a few things wrong here, but it's mostly to do with the data rather than with the code itself.

In terms of code, when a job has completed, it may still have completed with errors. You can call ThrowOnAnyError() to observe that. You can get detailed errors via job.Resource.Status.Errors. (I believe the first of those detailed errors is the one in job.Resource.Status.ErrorResults.)

Once the correct storage URL is provided (which would be observed that way as well) you'll see errors like this, with the CSV file you provided:

- Error while reading data, error message: CSV processing encountered too many errors, giving up. Rows: 1; errors: 1; max bad: 0; error percent: 0

- Could not parse '06/12/2014' as DATE for field delivery_date (position 0) starting at location 95 with message 'Unable to parse'

At that point, the problem is in your CSV file. There are two issues here:

- The date format is expected to be ISO-8601, e.g. "2014-06-12" rather than "06/12/2014" for example

- The date/time format is expected to include seconds as well, so "2014-05-12 12:37:00" rather than "05/12/2014 12:37" for example

Hopefully you're able to run a preprocessing job to fix the data in your CSV file.

This is assuming that the schema you've created is correct, of course - we can't tell that from your post, but here's the schema that worked for me:

var schema = new TableSchemaBuilder

{

{ "delivery_date", BigQueryDbType.Date },

{ "delivery_hour", BigQueryDbType.Int64 },

{ "participant_id", BigQueryDbType.String },

{ "resource_id", BigQueryDbType.String },

{ "type_id", BigQueryDbType.String },

{ "price", BigQueryDbType.Numeric },

{ "date_posted", BigQueryDbType.DateTime },

{ "date_created", BigQueryDbType.DateTime }

}.Build();

CodePudding user response:

After fiddling with my variables and links, I figured out that making a link to your bucket folder should be gs://<bucket_name>/<csv_filename> instead of gs://<project_id>/<bucket_name>/<csv_filename>.

My code's running ok for now and successfully transferred data.