My dataframe looks like this:

v1 v2 distance

0 be belong 0.666667

4 increase decrease 0.666667

9 analyze assay 0.666667

11 bespeak circulate 0.769231

21 induce generate 0.800000

24 decrease delay 0.750000

26 cause trip 0.666667

27 isolate distinguish 0.750000

28 give infect 0.666667

29 result prove 0.800000

31 describe explain 0.714286

33 report circulate 0.666667

36 affect expose 0.666667

40 explain intercede 0.705882

41 suppress restrict 0.833333

With v1 and v2 being verbs and distance is their similarity. I want to create clusters of similar words, based on their appearance in the dataframe.

For example, the word circulate appears be similar with both bespeak and report. So I would like to have a cluster of these 3 words. Groupby doesn't help since they are string values. Can someone help?

CodePudding user response:

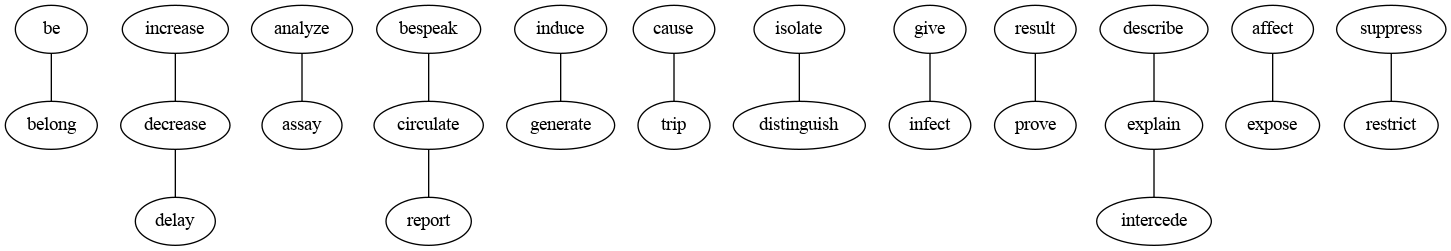

This seems like a graph problem.

Small function to plot the graph in jupyter:

def nxplot(G):

from networkx.drawing.nx_agraph import to_agraph

A = to_agraph(G)

A.layout('dot')

A.draw('/tmp/graph.png')

from IPython.display import Image

return Image(filename='/tmp/graph.png')

CodePudding user response:

The following line would select only the rows containing the string target_string:

rows = df[df.applymap(lambda element: element == target_string).any(axis = 1)]

Concatenate them and find the unique elements:

cluster = pd.concat([rows[['v1', 'v2']]], axis = 1).unique()

If you want to find clusters with all the words, repeat this for all the unique elements. An inefficient example:

clusters = pd.DataFrame()

for target_string in df.v1.unique():

rows = df[df.applymap(lambda element: element == target_string).any(axis = 1)]

clusters.append(pd.concat([rows[['v1', 'v2']]], axis = 1).unique())