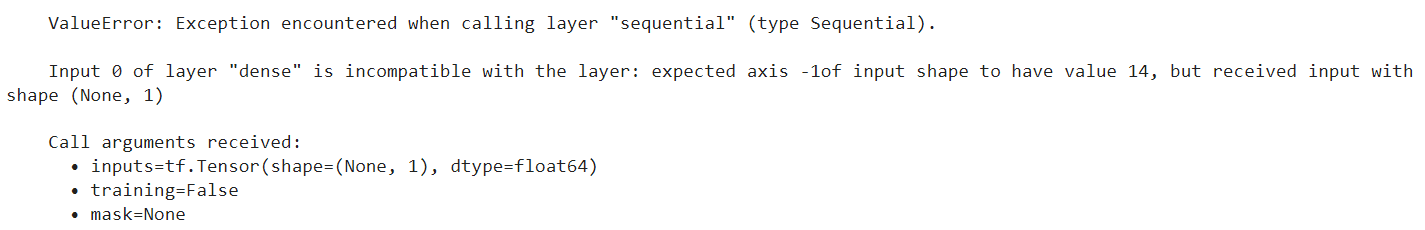

I implement an MLP neural network model on the data, for optimization 4 variables a function base on the MLP model is defined, and simulated annealing run on this function. I don't know why I get this error (attached below).

Neural network code:

# mlp for regression

from numpy import sqrt

from pandas import read_csv

from sklearn.model_selection import train_test_split

from tensorflow.keras import Sequential

from tensorflow.keras.layers import Dense

import tensorflow

from tensorflow import keras

from matplotlib import pyplot

from keras.layers import Dropout

from tensorflow.keras import regularizers

# determine the number of input features

n_features = X_train.shape[1]

# define model

model = Sequential()

model.add(Dense(150, activation='tanh', kernel_initializer='zero',kernel_regularizer=regularizers.l2(0.001), input_shape=(n_features,))) #relu/softmax/tanh

model.add(Dense(100, activation='tanh', kernel_initializer='zero',kernel_regularizer=regularizers.l2(0.001)))

model.add(Dense(50, activation='tanh', kernel_initializer='zero',kernel_regularizer=regularizers.l2(0.001)))

model.add(Dropout(0.0))

model.add(Dense(1))

# compile the model

opt= keras.optimizers.Adam(learning_rate=0.001)

#opt = tensorflow.keras.optimizers.RMSprop(learning_rate=0.001,rho=0.9,momentum=0.0,epsilon=1e-07,centered=False,name="RMSprop")

model.compile(optimizer=opt, loss='mse')

# fit the model

history=model.fit(X_train, y_train, validation_data = (X_test,y_test), epochs=100, batch_size=10, verbose=0,validation_split=0.3)

# evaluate the model

error = model.evaluate(X_test, y_test, verbose=0)

print('MSE: %.3f, RMSE: %.3f' % (error, sqrt(error)))

# plot learning curves

pyplot.title('Learning Curves')

pyplot.xlabel('Epoch')

pyplot.ylabel('Cross Entropy')

pyplot.plot(history.history['loss'], label='train')

pyplot.plot(history.history['val_loss'], label='val')

pyplot.legend()

pyplot.show()

function code:

def objective_function(X):

wob = X[0]

torque= X[1]

RPM = X[2]

pump = X[3]

input=[wob,torque,RPM, 0.00017,0.027,pump,0,0.5,0.386,0.026,0.0119,0.33,0.83,0.48]

input = pd.DataFrame(input)

obj= model.predict(input)

return obj

simulated annealing for optimization:

import time

import random

import math

import numpy as np

## custom section

initial_temperature = 100

cooling = 0.8 # cooling coef.

number_variables = 4

upper_bounds = [1,1,1,1]

lower_bounds = [0,0,0,0]

computing_time = 1 # seconds

## simulated Annealing algorithm

## 1. Genertate an initial solution randomly

initial_solution = np.zeros((number_variables))

for v in range(number_variables):

initial_solution[v] = random.uniform(lower_bounds[v], upper_bounds[v])

current_solution = initial_solution

best_solution = initial_solution

n=1 # no of solutions accepted

best_fitness = objective_function(best_solution)

current_temperature = initial_temperature # current temperature

start = time.time()

no_attemps = 100 # number of attemps in each level of temperature

record_best_fitness = []

for i in range(9999999):

for j in range(no_attemps):

for k in range(number_variables):

## 2. generate a candidate solution y randomly based on solution x

current_solution[k] = best_solution[k] 0.1*(random.uniform(lower_bounds[k], upper_bounds[k]))

current_solution[k] = max(min(current_solution[k], upper_bounds[k]), lower_bounds[k]) # repaire the solution respecting the bounds

## 3. check if y is better than x

current_fitness = objective_function(current_solution)

E = abs(current_fitness - best_solution)

if i==0 and j==0:

EA = E

if current_fitness < best_fitness:

p = math.exp(-E/(EA*current_temperature))

# make a decision to accept the worse solution or not

## 4. make a decision whether r < p

if random.random()<p:

accept = True # this worse solution is not accepted

else:

accept = False # this worse solution is not accepted

else:

accept = True # accept better solution

## 5. make a decision whether step comdition of inner loop is met

if accept == True:

best_solution = current_solution # update the best solution

best_fitness = objective_function(best_solution)

n = n 1 #count the solutions accepted

EA = (EA*(n-1) E)/n # accept EA

print('interation : {}, best_solution:{}, best_fitness:{}'. format(i, best_solution, best_fitness))

record_best_fitness.append(best_fitness)

## 6. decrease the temperature

current_temperature = current_temperature * cooling

## 7. stop condition of outer loop is met

end = time.time()

if end-start >= computing_time:

break

CodePudding user response:

it's for your input shape, in MLP neural network your input shape is [none,14], but in your function's input id [14,1], so you need transpose it.

def objective_function(X):

wob = X[0]

torque= X[1]

RPM = X[2]

pump = X[3]

input=[wob,torque,RPM, 0.00017,0.027,pump,0,0.5,0.386,0.026,0.0119,0.33,0.83,0.48]

input = pd.DataFrame(input)

input=input.T

model1.predict(input)

return obj