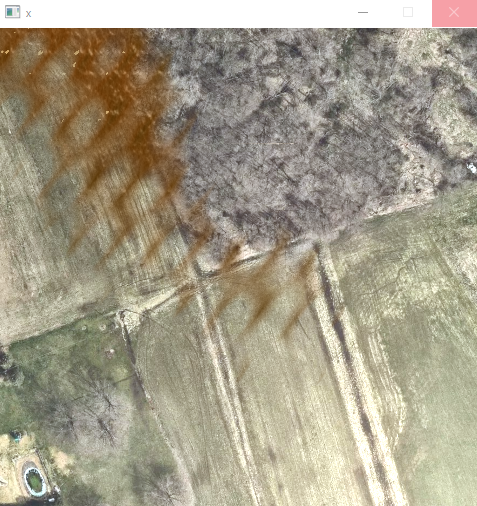

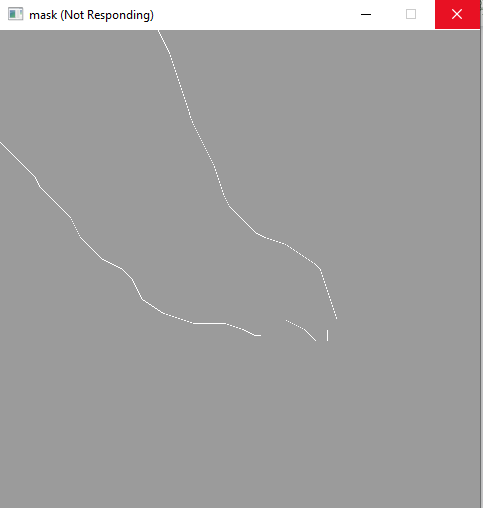

I have generated a synthetic semantic image segmentation dataset using unity. I have noticed that the shader I used seems to have averaged the pixel values of my classes in some cases along the borders. For example below the first image is an input X generated by unity, the second image is my Y generated by unity. The issue is that pixel values are averaged between the classes. The thrid picture is a mask where the white values where not found in the key set of mask values and the grey values are in the key set.

I have 6 classes in total so its not as simple as just assigning to one class. My question is does any know of an effecent way to fill these values based on the nearest valid neighbors?

here is my related code

ix = k.preprocessing.image.load_img(os.path.join(self.x_path,imgFile))

ix = k.preprocessing.image.img_to_array(ix)

iy = k.preprocessing.image.load_img(os.path.join(self.y_path,imgFile))

iy = k.preprocessing.image.img_to_array(iy)

#encode all of the RGB values to a class number

for mask_val, enc in self.dataSet.mask2class_encoding.items():

iy[np.all(iy==mask_val,axis=2)] = enc

#there were some that didnt encode and so I found that unity averages rgb values along class edge

foobarMask = None

for mask_val in self.dataSet.mask2class_encoding:

if foobarMask is None:

foobarMask = np.any(iy[:,:]!=mask_val,axis=2)

else:

foobarMask = np.logical_and(foobarMask,np.any(iy[:,:]!=mask_val,axis=2))

#mask2class_encoding{(255, 0, 0): 0, (45, 45, 45): 1, (255, 90, 0): 2, (0, 0, 255): 3, (111, 63, 12): 4, (255, 255, 0): 5}

now I want to basically do some kind of nearest neighbor interpolation based on the mask but Im kinda stuck on how to do this? Any help is greatly appreacated. Bonus points if there is a way to stop the unity shader from doing this in the first place!

CodePudding user response:

Turns out this is fairly simple

indices = nd.distance_transform_edt(foobarMask, return_distances=False, return_indices=True)

iy = iy[tuple(indices)]