I was looking at the activation maps of vgg19 in pytorch. I found that all the values of the maps are positive even before I applied the ReLU.

This seems very strange to me... If this would be correct (could be that I not used the register_forward_hook method correctly?) why would one then apply ReLu at all?

This is my code to produce this:

import torch

import torchvision

import torchvision.models as models

import torchvision.transforms as transforms

from torchsummary import summary

import os, glob

import matplotlib.pyplot as plt

import numpy as np

# settings:

batch_size = 4

# load the model

model = models.vgg19(pretrained=True)

summary(model.cuda(), (3, 32, 32))

model.cpu()

# how to preprocess??? See here:

# https://discuss.pytorch.org/t/how-to-preprocess-input-for-pre-trained-networks/683/2

normalize = transforms.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])

transform = transforms.Compose(

[transforms.ToTensor(),

normalize])

# build data loader

trainset = torchvision.datasets.CIFAR10(root='./data', train=True,

download=True, transform=transform)

trainloader = torch.utils.data.DataLoader(trainset, batch_size=batch_size,

shuffle=True, num_workers=2)

# show one image

dataiter = iter(trainloader)

images, labels = dataiter.next()

# set a hook

activation = {}

def get_activation(name):

def hook(model, input, output):

activation[name] = output.detach()

return hook

# hook at the first conv layer

hook = model.features[0].register_forward_hook(get_activation("firstConv"))

model(images)

hook.remove()

# show results:

flatted_feat_maps = activation["firstConv"].detach().numpy().flatten()

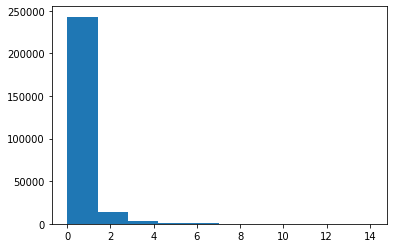

print("All positiv??? --> ",np.all(flatted_feat_maps >= 0))

plt.hist(flatted_feat_maps)

plt.show()

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [-1, 64, 32, 32] 1,792

ReLU-2 [-1, 64, 32, 32] 0

Conv2d-3 [-1, 64, 32, 32] 36,928

ReLU-4 [-1, 64, 32, 32] 0

MaxPool2d-5 [-1, 64, 16, 16] 0

Conv2d-6 [-1, 128, 16, 16] 73,856

ReLU-7 [-1, 128, 16, 16] 0

Conv2d-8 [-1, 128, 16, 16] 147,584

ReLU-9 [-1, 128, 16, 16] 0

MaxPool2d-10 [-1, 128, 8, 8] 0

Conv2d-11 [-1, 256, 8, 8] 295,168

ReLU-12 [-1, 256, 8, 8] 0

Conv2d-13 [-1, 256, 8, 8] 590,080

ReLU-14 [-1, 256, 8, 8] 0

Conv2d-15 [-1, 256, 8, 8] 590,080

ReLU-16 [-1, 256, 8, 8] 0

Conv2d-17 [-1, 256, 8, 8] 590,080

ReLU-18 [-1, 256, 8, 8] 0

MaxPool2d-19 [-1, 256, 4, 4] 0

Conv2d-20 [-1, 512, 4, 4] 1,180,160

ReLU-21 [-1, 512, 4, 4] 0

Conv2d-22 [-1, 512, 4, 4] 2,359,808

ReLU-23 [-1, 512, 4, 4] 0

Conv2d-24 [-1, 512, 4, 4] 2,359,808

ReLU-25 [-1, 512, 4, 4] 0

Conv2d-26 [-1, 512, 4, 4] 2,359,808

ReLU-27 [-1, 512, 4, 4] 0

MaxPool2d-28 [-1, 512, 2, 2] 0

Conv2d-29 [-1, 512, 2, 2] 2,359,808

ReLU-30 [-1, 512, 2, 2] 0

Conv2d-31 [-1, 512, 2, 2] 2,359,808

ReLU-32 [-1, 512, 2, 2] 0

Conv2d-33 [-1, 512, 2, 2] 2,359,808

ReLU-34 [-1, 512, 2, 2] 0

Conv2d-35 [-1, 512, 2, 2] 2,359,808

ReLU-36 [-1, 512, 2, 2] 0

MaxPool2d-37 [-1, 512, 1, 1] 0

AdaptiveAvgPool2d-38 [-1, 512, 7, 7] 0

Linear-39 [-1, 4096] 102,764,544

ReLU-40 [-1, 4096] 0

Dropout-41 [-1, 4096] 0

Linear-42 [-1, 4096] 16,781,312

ReLU-43 [-1, 4096] 0

Dropout-44 [-1, 4096] 0

Linear-45 [-1, 1000] 4,097,000

================================================================

Total params: 143,667,240

Trainable params: 143,667,240

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 0.01

Forward/backward pass size (MB): 5.25

Params size (MB): 548.05

Estimated Total Size (MB): 553.31

----------------------------------------------------------------

Could it be that I somehow did not use the register_forward_hook correctly?

CodePudding user response:

You should clone the output in

def get_activation(name):

def hook(model, input, output):

activation[name] = output.detach().clone() #

return hook

Note that Tensor.detach only detaches the tensor from the graph, but both tensors will still share the same underlying storage.

Returned Tensor shares the same storage with the original one. In-place modifications on either of them will be seen, and may trigger errors in correctness checks. IMPORTANT NOTE: Previously, in-place size / stride / storage changes (such as resize_ / resize_as_ / set_ / transpose_) to the returned tensor also update the original tensor. Now, these in-place changes will not update the original tensor anymore, and will instead trigger an error. For sparse tensors: In-place indices / values changes (such as zero_ / copy_ / add_) to the returned tensor will not update the original tensor anymore, and will instead trigger an error.