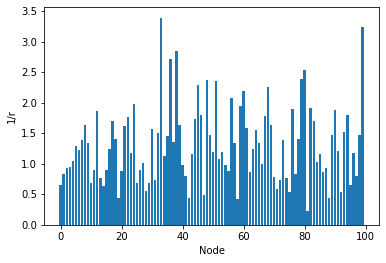

I have a matrix and I plotted all the elements of the matrix as shown below. How do I check if the elements follow a log-normal distribution?

import numpy as np

import matplotlib.pyplot as plt

inv_r=np.array([[0.64823518, 0.83720202, 0.93563635, 0.94477343, 1.05253592,

1.28367679, 1.22086603, 1.3869073 , 1.63835474, 1.3472428 ],

[0.67779819, 0.89049499, 1.87163307, 0.76616821, 0.63859816,

0.88970981, 1.24933222, 1.70244884, 1.41288773, 0.4352665 ],

[0.87478114, 1.61466754, 1.76209121, 1.18354069, 1.98489809,

0.68434697, 0.89164925, 1.01179243, 0.55344252, 0.67770014],

[1.57398937, 0.73402648, 1.50853046, 3.39180481, 1.12097252,

1.44690537, 2.71361645, 1.35833663, 2.8387416 , 1.63599491],

[0.98352121, 0.8034909 , 0.43440047, 1.15819716, 1.72482182,

2.29668575, 1.79702295, 0.48908485, 2.3722099 , 1.46943279],

[1.185387 , 2.36026176, 1.08328055, 1.18599108, 0.97528197,

0.8820969 , 2.08205908, 1.34425083, 0.4146258 , 1.95035103],

[2.19711571, 1.58786973, 0.87048445, 1.24324117, 1.55456239,

1.34691756, 1.00360813, 1.77622545, 2.25403217, 1.63541101],

[0.77615636, 0.58180174, 0.74028442, 1.39470309, 0.76768667,

0.54203086, 1.89078838, 0.82748047, 1.39862745, 2.39547037],

[2.53200407, 0.23182676, 1.91493715, 1.69375212, 1.02297358,

1.15553262, 0.86335816, 0.93200029, 0.43445186, 1.47100631],

[1.88479544, 1.21261474, 0.53477358, 1.51359341, 1.79260722,

0.64794657, 1.17978372, 0.80116473, 1.46847931, 3.24720226]]).flatten()

nodes=np.arange(len(inv_r))

plt.bar(nodes, inv_r)

plt.xlabel('Node')

plt.ylabel('1/r')

plt.show()

CodePudding user response:

Assuming you have a concrete theoretical distribution to check against (i.e., the parameters of the log-normal distribution), you can use a one-sample Kolmogorov-Smirnov test.

scipy.stats.kstest can do that, something like:

stats.kstest(data, 'lognorm')

If the test results in a p-value of greater than your accepted level of significance, you can reject the hypothesis of the data being generated from a log-normal.

CodePudding user response:

You can generate a random probability which follows the required distribution, e.g., the log-normal as in your case, and then use one of two options:

calculate the Kullback-Liebler divergencefrom the

scipy.specialmodule, which basically checks how similar values from one some random distribution follows some known distribution. I.e., if they are similar - the value will be small, and as they differ - the output value will be larger.Alternatively, you can use the Kolmogorov-Smirnov test, which computes the mean difference between two distributions, and outputs the probability that the two distributions differ:

It may be done as follows:

import numpy as np

import scipy

inv_r=np.array([[0.64823518, 0.83720202, 0.93563635, 0.94477343, 1.05253592, 1.28367679, 1.22086603, 1.3869073 , 1.63835474, 1.3472428 ], [0.67779819, 0.89049499, 1.87163307, 0.76616821, 0.63859816, 0.88970981, 1.24933222, 1.70244884, 1.41288773, 0.4352665 ], [0.87478114, 1.61466754, 1.76209121, 1.18354069, 1.98489809, 0.68434697, 0.89164925, 1.01179243, 0.55344252, 0.67770014], [1.57398937, 0.73402648, 1.50853046, 3.39180481, 1.12097252, 1.44690537, 2.71361645, 1.35833663, 2.8387416 , 1.63599491], [0.98352121, 0.8034909 , 0.43440047, 1.15819716, 1.72482182, 2.29668575, 1.79702295, 0.48908485, 2.3722099 , 1.46943279], [1.185387 , 2.36026176, 1.08328055, 1.18599108, 0.97528197, 0.8820969 , 2.08205908, 1.34425083, 0.4146258 , 1.95035103], [2.19711571, 1.58786973, 0.87048445, 1.24324117, 1.55456239, 1.34691756, 1.00360813, 1.77622545, 2.25403217, 1.63541101], [0.77615636, 0.58180174, 0.74028442, 1.39470309, 0.76768667, 0.54203086, 1.89078838, 0.82748047, 1.39862745, 2.39547037], [2.53200407, 0.23182676, 1.91493715, 1.69375212, 1.02297358, 1.15553262, 0.86335816, 0.93200029, 0.43445186, 1.47100631], [1.88479544, 1.21261474, 0.53477358, 1.51359341, 1.79260722, 0.64794657, 1.17978372, 0.80116473, 1.46847931, 3.24720226]]).flatten()

log_norm_dist = np.random.lognormal(0, 1, 100)

KL-Divergence:

scipy.special.kl_div(log_norm_dist, inv_r).mean()

Output: 1.69

scipy.special.kl_div(log_norm_dist, log_norm_dist).mean()

Output: 0.0

scipy.special.kl_div(inv_r, inv_r).mean()

Output: 0.0

KS-Test

from scipy.stats import kstest

kstest(log_norm_dist, inv_r)

Output: KstestResult(statistic=0.2, pvalue=0.03638428787491733)

kstest(log_norm_dist, log_norm_dist)

Output: KstestResult(statistic=0.0, pvalue=1.0)

kstest(inv_r, inv_r)

Output: KstestResult(statistic=0.0, pvalue=1.0)