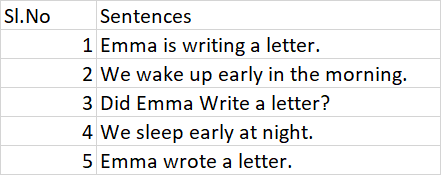

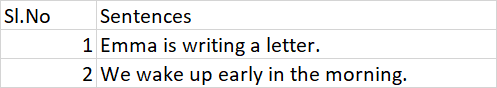

I have two CSV, one is the Master-Data and the other is the Component-Data, Master-Data has Two Rows and two columns, where as Component-Data has 5 rows and two Columns.

I'm trying to find the cosine-similarity between each of them after Tokenization, Stemming and Lemmatization and then append the similarity index to the new columns, I'm unable to append the corresponding values to the column in the data-frame which is further needs to be converted to CSV.

My Approach:

import re

from nltk.corpus import stopwords

from nltk.stem import PorterStemmer,WordNetLemmatizer

from collections import Counter

import pandas as pd

portStemmer=PorterStemmer()

wordNetLemmatizer = WordNetLemmatizer()

fields = ['Sentences']

cosineSimilarityList = []

def fetchLemmantizedWords():

eliminatePunctuation = re.sub('[^a-zA-Z]', ' ',value)

convertLowerCase = eliminatePunctuation.lower()

tokenizeData = convertLowerCase.split()

eliminateStopWords = [word for word in tokenizeData if not word in set(stopwords.words('english'))]

stemWords= list(set([portStemmer.stem(value) for value in eliminateStopWords]))

wordLemmatization = [wordNetLemmatizer.lemmatize(x) for x in stemWords]

return wordLemmatization

def fetchCosine(eachMasterData,eachComponentData):

masterDataValues = Counter(eachMasterData)

componentDataValues = Counter(eachComponentData)

bagOfWords = list(masterDataValues.keys() | componentDataValues.keys())

masterDataVector = [masterDataValues.get(bagOfWords, 0) for bagOfWords in bagOfWords]

componentDataVector = [componentDataValues.get(bagOfWords, 0) for bagOfWords in bagOfWords]

masterDataLength = sum(contractElement*contractElement for contractElement in masterDataVector) ** 0.5

componentDataLength = sum(questionElement*questionElement for questionElement in componentDataVector) ** 0.5

dotProduct = sum(contractElement*questionElement for contractElement,questionElement in zip(masterDataVector, componentDataVector))

cosine = int((dotProduct / (masterDataLength * componentDataLength))*100)

return cosine

masterData = pd.read_csv('C:\\Similarity\\MasterData.csv', skipinitialspace=True)

componentData = pd.read_csv('C:\\Similarity\\ComponentData.csv', skipinitialspace=True)

for value in masterData['Sentences']:

eachMasterData = fetchLemmantizedWords()

for value in componentData['Sentences']:

eachComponentData = fetchLemmantizedWords()

cosineSimilarity = fetchCosine(eachMasterData,eachComponentData)

cosineSimilarityList.append(cosineSimilarity)

for value in cosineSimilarityList:

componentData = componentData.append(pd.DataFrame(cosineSimilarityList, columns=['Cosine Similarity']), ignore_index=True)

#componentData['Cosine Similarity'] = value

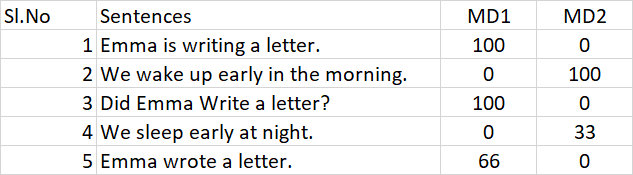

expected output after converting the df to CSV,

Facing issues while appending the values to the Data-frame, Please assist me with an approach for this. Thanks.

CodePudding user response:

Here's what I came up with:

Sample set up

csv_master_data = \

"""

SI.No;Sentences

1;Emma is writing a letter.

2;We wake up early in the morning.

"""

csv_component_data = \

"""

SI.No;Sentences

1;Emma is writing a letter.

2;We wake up early in the morning.

3;Did Emma Write a letter?

4;We sleep early at night.

5;Emma wrote a letter.

"""

import pandas as pd

from io import StringIO

df_md = pd.read_csv(StringIO(csv_master_data), delimiter=';')

df_cd = pd.read_csv(StringIO(csv_component_data), delimiter=';')

We end up with 2 dataframes (showing df_cd):

| SI.No | Sentences | |

|---|---|---|

| 0 | 1 | Emma is writing a letter. |

| 1 | 2 | We wake up early in the morning. |

| 2 | 3 | Did Emma Write a letter? |

| 3 | 4 | We sleep early at night. |

| 4 | 5 | Emma wrote a letter. |

I replaced the 2 functions you used by the following dummy functions:

import random

def fetchLemmantizedWords(words):

return [random.randint(1,30) for x in words]

def fetchCosine(lem_md, lem_cd):

return 100 if len(lem_md) == len(lem_cd) else random.randint(0,100)

Processing data

First, we apply the fetchLemmantizedWords function on each dataframe. The regex replace, lowercase and split of the sentences is done by Pandas instead of doing them in the function itself.

By making the sentence lowercase first, we can simplify the regex to only consider lowercase letters.

for df in (df_md, df_cd):

df['lem'] = df.apply(lambda x: fetchLemmantizedWords(x.Sentences

.lower()

.replace(r'[^a-z]', ' ')

.split()),

result_type='reduce',

axis=1)

Result for df_cd:

| SI.No | Sentences | lem | |

|---|---|---|---|

| 0 | 1 | Emma is writing a letter. | [29, 5, 4, 9, 28] |

| 1 | 2 | We wake up early in the morning. | [16, 8, 21, 14, 13, 4, 6] |

| 2 | 3 | Did Emma Write a letter? | [30, 9, 23, 16, 5] |

| 3 | 4 | We sleep early at night. | [8, 25, 24, 7, 3] |

| 4 | 5 | Emma wrote a letter. | [30, 30, 15, 7] |

Next, we use a cross-join to make a dataframe with all possible combinations of md and cd data.

df_merged = pd.merge(df_md[['SI.No', 'lem']],

df_cd[['SI.No', 'lem']],

how='cross',

suffixes=('_md','_cd')

)

df_merged contents:

| SI.No_md | lem_md | SI.No_cd | lem_cd | |

|---|---|---|---|---|

| 0 | 1 | [14, 22, 9, 21, 4] | 1 | [3, 4, 8, 17, 2] |

| 1 | 1 | [14, 22, 9, 21, 4] | 2 | [29, 3, 10, 2, 19, 18, 21] |

| 2 | 1 | [14, 22, 9, 21, 4] | 3 | [20, 22, 29, 4, 3] |

| 3 | 1 | [14, 22, 9, 21, 4] | 4 | [17, 7, 1, 27, 19] |

| 4 | 1 | [14, 22, 9, 21, 4] | 5 | [17, 5, 3, 29] |

| 5 | 2 | [12, 30, 10, 11, 7, 11, 8] | 1 | [3, 4, 8, 17, 2] |

| 6 | 2 | [12, 30, 10, 11, 7, 11, 8] | 2 | [29, 3, 10, 2, 19, 18, 21] |

| 7 | 2 | [12, 30, 10, 11, 7, 11, 8] | 3 | [20, 22, 29, 4, 3] |

| 8 | 2 | [12, 30, 10, 11, 7, 11, 8] | 4 | [17, 7, 1, 27, 19] |

| 9 | 2 | [12, 30, 10, 11, 7, 11, 8] | 5 | [17, 5, 3, 29] |

Next, we calculate the cosine value:

df_merged['cosine'] = df_merged.apply(lambda x: fetchCosine(x.lem_md,

x.lem_cd),

axis=1)

In the last step, we pivot the data and merge the original df_cd with the calculated results :

pd.merge(df_cd.drop(columns='lem').set_index('SI.No'),

df_merged.pivot_table(index='SI.No_cd',

columns='SI.No_md').droplevel(0, axis=1),

how='inner',

left_index=True,

right_index=True)

Result (again, these are dummy calculations):

| SI.No | Sentences | 1 | 2 |

|---|---|---|---|

| 1 | Emma is writing a letter. | 100 | 64 |

| 2 | We wake up early in the morning. | 63 | 100 |

| 3 | Did Emma Write a letter? | 100 | 5 |

| 4 | We sleep early at night. | 100 | 17 |

| 5 | Emma wrote a letter. | 35 | 9 |