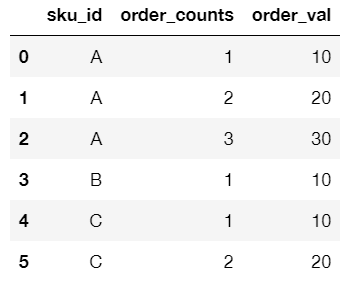

I have this:

df = pd.DataFrame({'sku_id' : ['A','A','A','B','C','C'],

'order_counts' : [1,2,3,1,1,2],

'order_val' : [10,20,30,10,10,20]})

which creates:

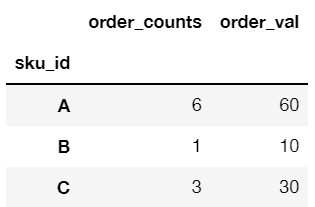

A simple groupby() of sku_id using df.groupby('sku_id').sum() would give:

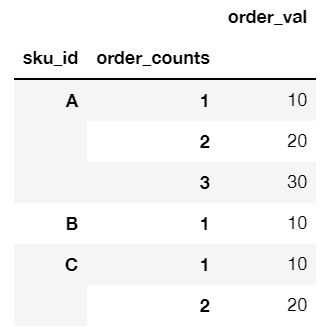

And a two-level grouping df.groupby(['sku_id', 'order_counts']).sum() would give:

But now I want a custom second-level grouping on order_counts such that any order_counts == 1 is considered a group and any order_counts > 1 are grouped together in a group labelled R (for Repeat order)

The result would look like this:

sku_id order_counts order_val

A 1 10

R 50

B 1 10

C 1 10

R 20

Is there a way to supply a custom groupby function to achieve this?

CodePudding user response:

Mask the != 1 values in the order_counts column with R, then use groupby sum

g = df['order_counts'].mask(df['order_counts'] != 1, 'R')

df.groupby(['sku_id', g])['order_val'].sum()

Result

sku_id order_counts

A 1 10

R 50

B 1 10

C 1 10

R 20

Name: order_val, dtype: int64

CodePudding user response:

How about just assign before you groupby?

new_df = (df

.assign(order_counts=lambda x: np.where(x['order_counts'] > 1, 'R', x['order_counts']))

.groupby(['sku_id', 'order_counts'])

.sum()

)

Output:

>>> new_df

order_val

sku_id order_counts

A 1 10

R 50

B 1 10

C 1 10

R 20