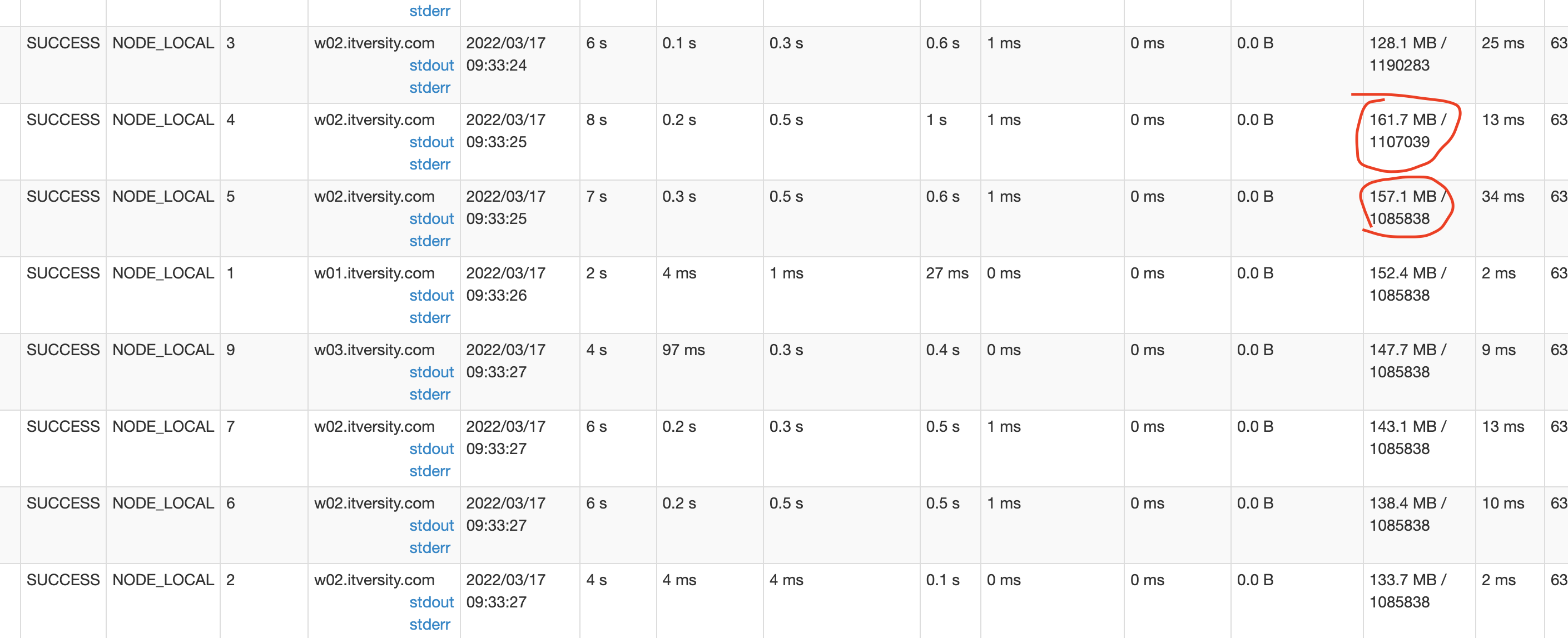

I loaded a file of 9.4 GB so it created 74 partitions which is correct (74 * 128MB = 9472). But I see that few tasks are reading more than 128MB, like 160 MB and all like shown below. How can this be possible? When the partition size is 128 MB, how can it read more than that?

CodePudding user response:

The file lines (rows) are likely not exactly in blocks of 128mb and/or the memory representation of the data types is slightly larger