My company has lots of data(Database: PostgreSQL) and now the requirement is to add search feature in that,we have been asked to use Azure cognitive search. I want to know that how we can transform the data and send it to the Azure search engine. There are few cases which we have to handle:

- How will we transfer and upload on index of search engine for existing data?

- What will be the easy way to update the data on search engine with new records in our Production Database?(For now we are using Java back end code for transforming the data and updating the index, but it is very time consuming.) 3.What will be the best way to manage when there's an update on existing database structure? How will we update the indexer without doing lots of work by creating the indexers every time?

Is there anyway we can automatically update the index whenever there is change in database records.

CodePudding user response:

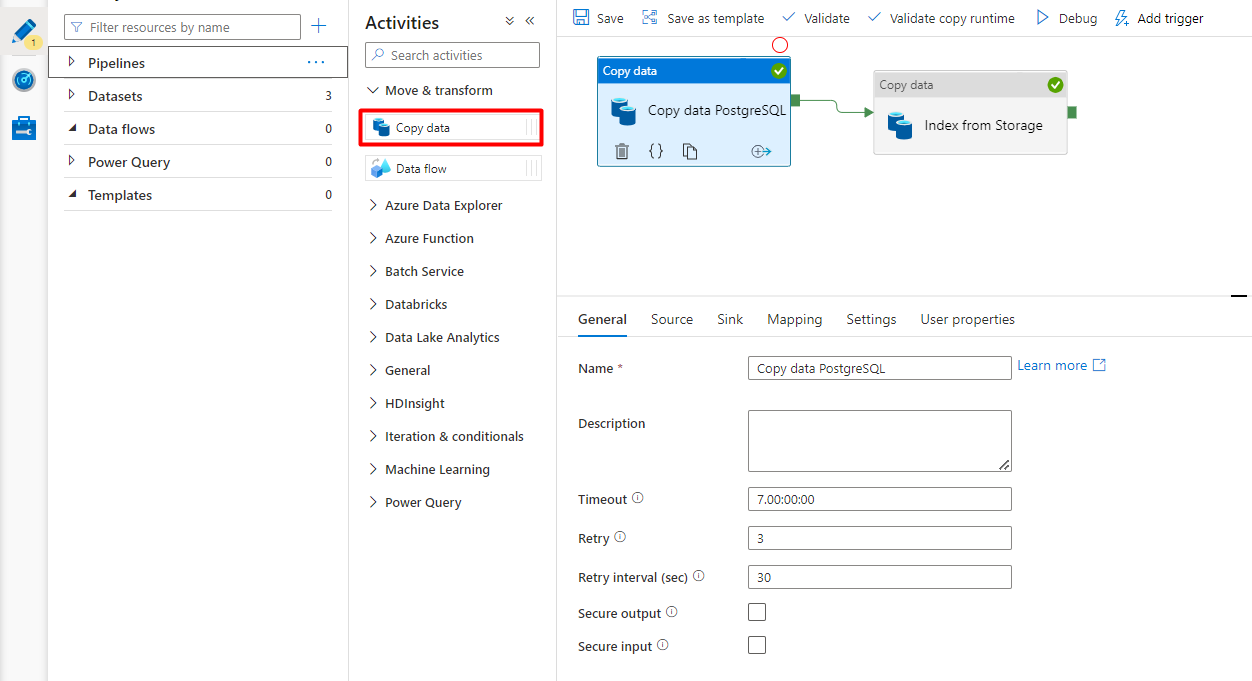

You can either write code to push data from your PostgreSQL database into the Azure Search index via the

Once the staged data is in the storage account in delimitedText format, you can also use built-in Azure Blob indexer with change tracking enabled.