I am trying to use the face-api.js library to do some emotion detection on a live webcam feed. It works perfectly on a local environment but when I try to host it on AWS Amplify, it gives me an error such as

Uncaught (in promise) Error: Based on the provided shape, [1,1,16,32], the tensor should have 512 values but has 231

This is the code that tries to load the models:

useEffect(() => {

if(checked){

const MODEL_URL = './models';

const initModels = async () => {

await face.nets.tinyFaceDetector.loadFromUri(MODEL_URL);

await face.nets.faceLandmark68Net.loadFromUri(MODEL_URL);

await face.nets.faceRecognitionNet.loadFromUri(MODEL_URL);

await face.nets.faceExpressionNet.loadFromUri(MODEL_URL);

enableWebcam();

}

initModels();

}

}, [checked]);

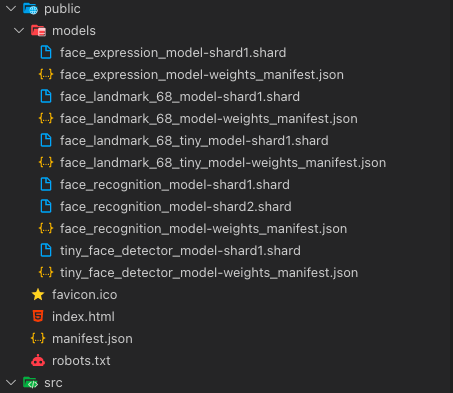

This is the models that are found in the ./public folder:

I found that people had a similiar problem here and I attempted the solution by trying to change all the shard files to a .shard or .bin file extension and changed the manifest.json accordingly but it still gives me the same error.

I've been trying to debug this all day but can't seem to find a solution. Any help to point me in the right direction would be much appreciated. This is my first time working with ML models in a web application environment using React and AWS.

CodePudding user response:

Okay, so after hours of trying to debug it, the solution was fairly simple. I just had to load the models in a different way like shown here:

useEffect(() => {

if (checked) {

const MODEL_URL = `/models`

const initModels = async () => {

Promise.all([

face.loadTinyFaceDetectorModel(MODEL_URL),

face.loadFaceLandmarkModel(MODEL_URL),

face.loadFaceRecognitionModel(MODEL_URL),

face.loadFaceExpressionModel(MODEL_URL)

]).then(enableWebcam);

}

initModels();

}

}, [checked]);