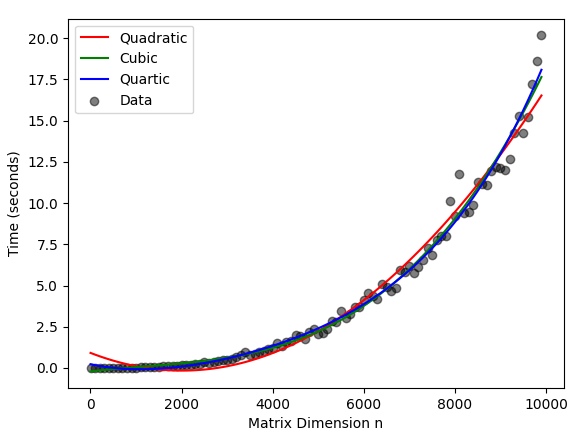

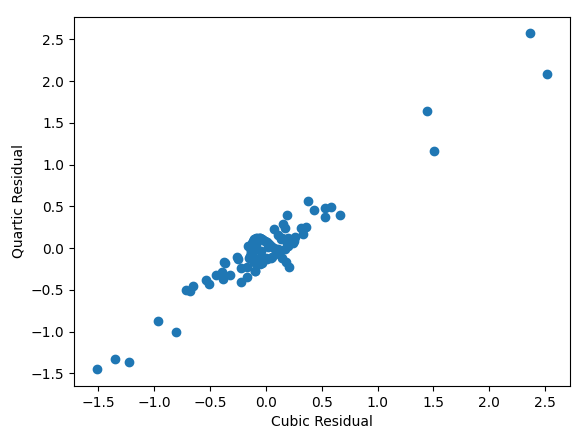

Since none of the available complexities get down to quadratic time, I am unsurprising that visually the quadratic model had the worst fit. Both the cubic and quartic models had excellent visual fit, and unsurprisingly their residuals are closely correlated.

Some related questions exist, but they do not have an answer for this specific implementation.

- Space complexity of matrix inversion, determinant and adjoint

- Time and space complexity of determinant of a matrix

- Experimentally determining computing complexity of matrix determinant

Since this implementation is used by a lot of Python programmers world-wide, it may benefit the understanding of a lot of people if an answer was tracked down.

CodePudding user response:

TL;DR: it is between O(n^2.81) and O(n^3) regarding the target BLAS implementation.

Indeed, Numpy uses a LU decomposition (in the log space). The actual implementation can be found here. It indeed uses the sgetrf/dgetrf primitive of LAPACK. Multiple libraries provides such a libraries. The most famous is the one of NetLib though it is not the fastest. The Intel MKL is an example of library providing a fast implementation. Fast LU decomposition algorithms use tiling methods so to use a matrix multiplication internally. Their do that because the matrix multiplication is one of the most optimized methods linear algebra libraries (for example the MKL, BLIS, and OpenBLAS generally succeed to reach nearly optimal performance on modern processors). More generally, the complexity of the LU decomposition is the one of the matrix multiplication.

The complexity of the naive squared matrix multiplication is O(n^3). Faster algorithms exists like Strassen (running in ~O(n^2.81) time) which is often used for big matrices. The Coppersmith–Winograd algorithm achieves a significantly better complexity (~O(n^2.38)), but no linear algebra libraries actually use it since it is a galactic algorithm. Put it shortly, such algorithm is theoretically asymptotically better than others but the hidden constant make it impractical for any real-world usage. For more information about the complexity of the matrix multiplication, please read this article. Thus, in practice, the complexity of the matrix multiplication is between O(n^2.81) and O(n^3) regarding the target BLAS implementation (which is dependent of your platform and your configuration of Numpy).