I start learning the Azure Logic Apps and my first tasks is to store the result of a specific Kusto query from calling the log analytics of azure https://api.loganalytics.io/v1/workspaces/{guid}/query.

Currently, I can successfully call the log analytics api using Http in Logic App and this is the sample return.

{

"tables": [

{

"name": "PrimaryResult",

"columns": [

{

"name": "UserPrincipalName",

"type": "string"

},

{

"name": "OperationName",

"type": "string"

},

{

"name": "ResultDescription",

"type": "string"

},

{

"name": "AuthMethod",

"type": "string"

},

{

"name": "TimeGenerated",

"type": "string"

}

],

"rows": [

[

"[email protected]",

"Fraud reported - no action taken",

"Successfully reported fraud",

"Phone call approval (Authentication phone)",

"22-05-25 [06:27:31 AM]"

],

[

"[email protected]",

"Fraud reported - no action taken",

"Successfully reported fraud",

"Phone call approval (Authentication phone)",

"22-05-25 [04:19:27 AM]"

]

]

}

]

}

From this result, I'm stuck on how should iterate and save those data in rows property of the json result to Azure Table Storage.

Hope someone can guide me on how to achieve this.

TIA!

CodePudding user response:

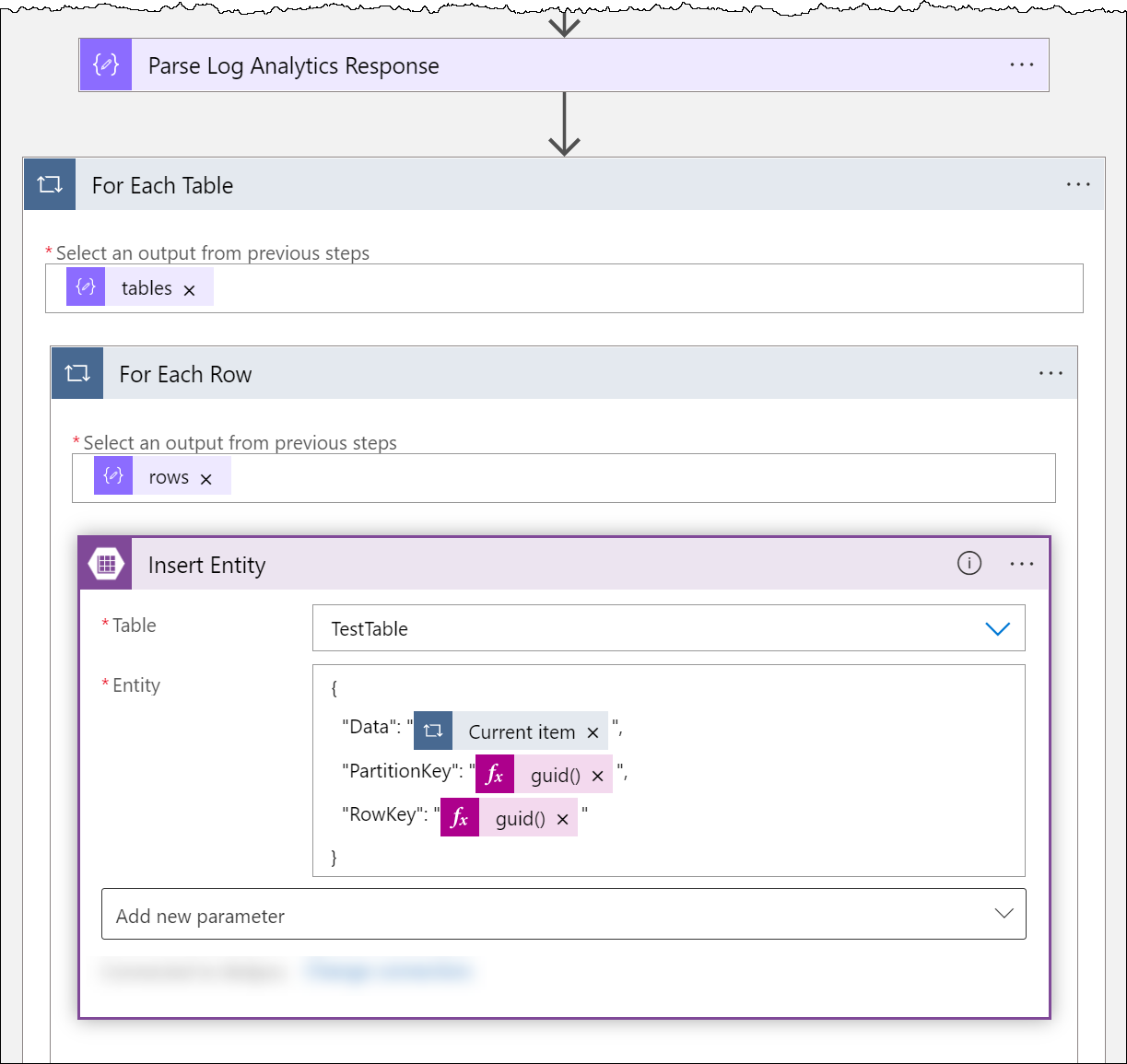

Firstly, use a Parse JSON action and load your JSON in as sample to generate the schema.

Then use a For each (rename them accordingly) to traverse the rows, this will then automatically generate an outer For each for the tables.

This is the trickier part, you need to generate a payload that contains your data with some specific keys that you can then identify in your storage table.

This is my test flow with your data ...

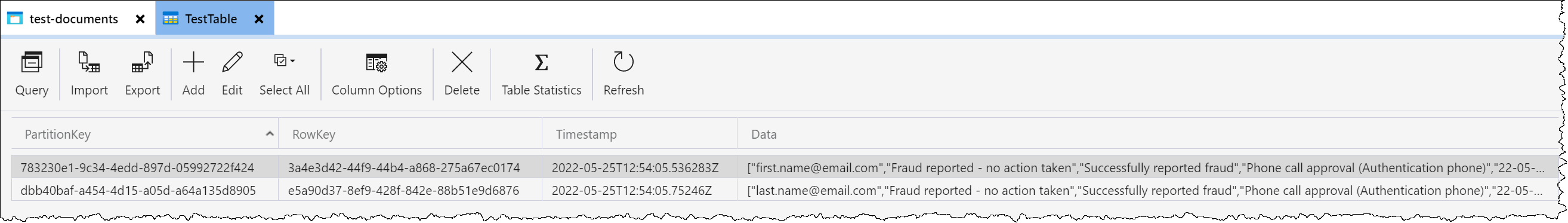

... and this is the end result in storage explorer ...

The JSON within the entity field in the Insert Entity action looks like this ...

{

"Data": "@{items('For_Each_Row')}",

"PartitionKey": "@{guid()}",

"RowKey": "@{guid()}"

}

I simply used GUID's to make it work but you'd want to come up with some kind of key from your data to make it much more rational. Maybe the date field or something.

CodePudding user response:

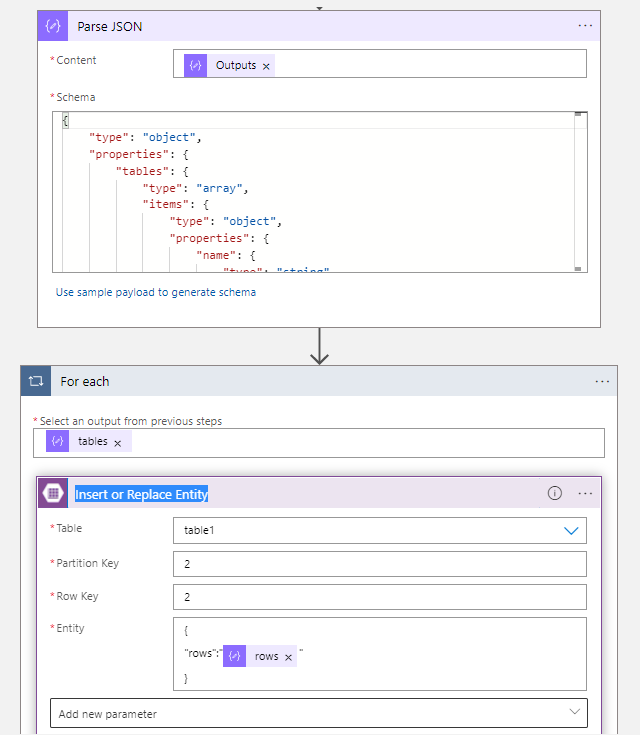

You can use Parse_JSON to extract the inner data of the output provided and then can use Insert or Replace Entity action inside a for_each loop. Here is the screenshot of my logic app

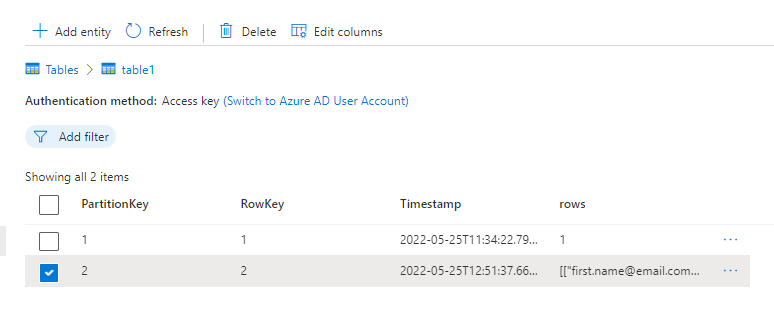

In my storage account