I am in the process of converting a Keras model to PyTorch and would need your help.

Keras Code:

def model(input_shape):

input_layer = keras.layers.Input(input_shape)

conv1 = keras.layers.Conv1D(filters=16, kernel_size=3, padding="same")(input_layer)

conv1 = keras.layers.BatchNormalization()(conv1)

conv1 = keras.layers.ReLU()(conv1)

global_average_pooling = keras.layers.GlobalAveragePooling1D()(conv1)

output_layer = keras.layers.Dense(number_of_classes, activation="softmax")(global_average_pooling )

return keras.models.Model(inputs=input_layer, outputs=output_layer)

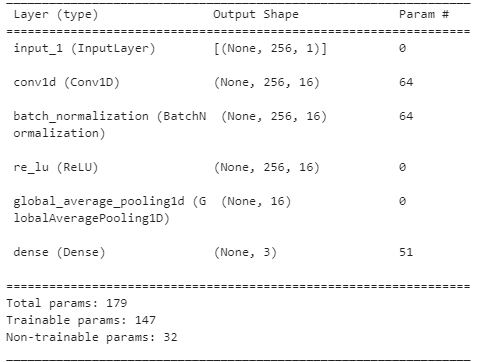

Summary of Model:

My Code Is:

class model(nn.Module):

def __init__(self):

super(CNN, self).__init__()

#number_of_classes = data_config.number_of_classes

self.conv1 = nn.Conv1d(256,128,1) # PyTorch does not support same padding,

self.bn1=nn.BatchNorm1d(128)

#self.relu=nn.functional.relu_(16)

self.avg = nn.AvgPool1d(1)

def forward(self,x):

x = self.conv1(x)

x = self.bn1(x)

#x = self.relu(x)

x = self.avg(x)

output = F.log_softmax(x)

return output

Could someone please help me with this?

Greets!

CodePudding user response:

I write PyTorch code like your model.summary from keras and use from torchsummary for showing your PyTorch summary like below:

import torch

import torch.nn as nn

import torch.nn.functional as F

# pip install torchsummary

from torchsummary import summary

class Net(nn.Module):

def __init__(self):

super(Net,self).__init__()

self.conv1 = nn.Conv1d(1,256,2)

self.batch1 = nn.BatchNorm1d(256)

self.avgpl1 = nn.AvgPool1d(1, stride=1)

self.fc1 = nn.Linear(4096,3)

def forward(self,x):

x = self.conv1(x)

x = self.batch1(x)

x = F.relu(x)

x = self.avgpl1(x)

x = torch.flatten(x,1)

x = F.relu(self.fc1(x))

return x

net = Net()

print(net)

summary(net,(1, 17))

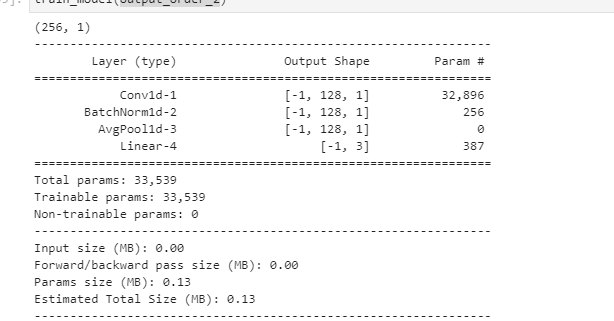

Output:

Net(

(conv1): Conv1d(1, 256, kernel_size=(2,), stride=(1,))

(batch1): BatchNorm1d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(avgpl1): AvgPool1d(kernel_size=(1,), stride=(1,), padding=(0,))

(fc1): Linear(in_features=4096, out_features=3, bias=True)

)

Conv1d-1

BatchNorm1d-2

AvgPool1d-3

Linear-4

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv1d-1 [-1, 256, 16] 768

BatchNorm1d-2 [-1, 256, 16] 512

AvgPool1d-3 [-1, 256, 16] 0

Linear-4 [-1, 3] 12,291

================================================================

Total params: 13,571

Trainable params: 13,571

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 0.00

Forward/backward pass size (MB): 0.09

Params size (MB): 0.05

Estimated Total Size (MB): 0.15

----------------------------------------------------------------

CodePudding user response:

My Current Code is:

class Net(nn.Module):

def __init__(self):

super(Net,self).__init__()

self.conv1 = nn.Conv1d(256,128,1)

self.batch1 = nn.BatchNorm1d(128)

self.avgpl1 = nn.AvgPool1d(1, stride=1)

self.fc1 = nn.Linear(128,3)

def forward(self,x):

x = self.conv1(x)

x = self.batch1(x)

x = F.relu(x)

x = self.avgpl1(x)

x = torch.flatten(x,1)

x = F.log_softmax(self.fc1(x))

return x