I am trying to use structured streaming in databrick with socket as source, and console as the output sink.

However, I am not able to see any output on databrick.

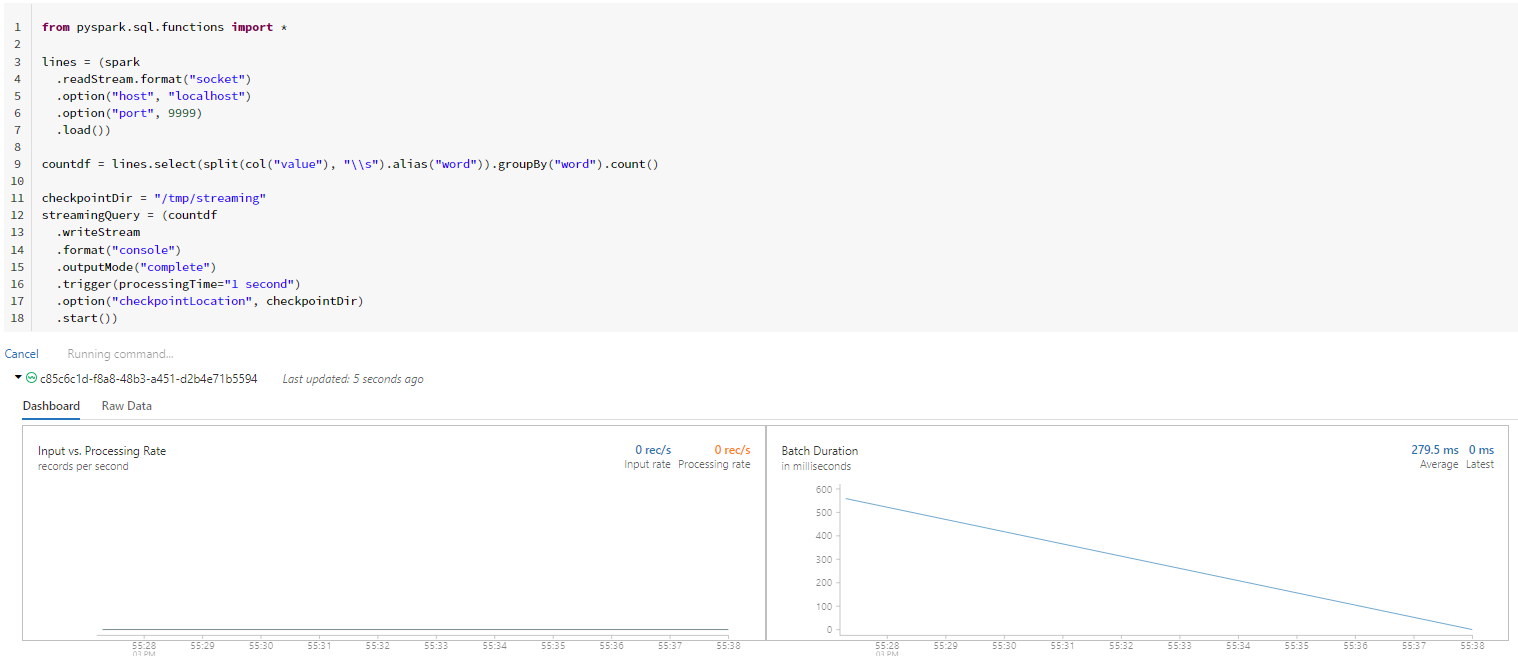

from pyspark.sql.functions import *

lines = (spark

.readStream.format("socket")

.option("host", "localhost")

.option("port", 9999)

.load())

countdf = lines.select(split(col("value"), "\\s").alias("word")).groupBy("word").count()

checkpointDir = "/tmp/streaming"

streamingQuery = (countdf

.writeStream

.format("console")

.outputMode("complete")

.trigger(processingTime="1 second")

.option("checkpointLocation", checkpointDir)

.start())

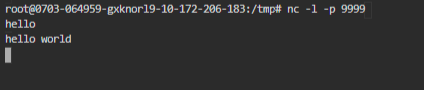

In another terminal, send data via socket

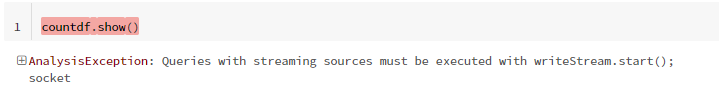

I am not able to see any updates/changes in the dashboard, and there is no output shown. When I try to show the countdf, it is showing AnalysisException: Queries with streaming sources must be executed with writeStream.start();

CodePudding user response:

You can't use .show on the streaming queries. Also, in the case of the console output, it's printed into logs, not into the notebook. If you just want to see the results of your transformations, on Databricks you can use display function that supports visualization of streaming datasets, including settings for checkpoint location & trigger interval.