I am working on one task and have to compute average on N images of format CV_16UC1 using CUDA. when I try that the final result is always black. So, to test I removed the N images and basically sent only one image as input to cuda kernel and copied it back as it is and even that is black. Adding the code snippet here:

#include <cuda_runtime.h>

#include <device_launch_parameters.h>

#include <opencv2/opencv.hpp>

#include <opencv2/core/cuda/common.hpp>

using namespace std;

__global__ void vectorAdd10(unsigned short* input, unsigned short* output, int width, int height, int inStep, int outStep) {

// Index of current thread

const int x = blockIdx.x * blockDim.x threadIdx.x;

const int y = blockIdx.y * blockDim.y threadIdx.y;

// Number of channels

const int in_c = inStep / width;

const int out_c = outStep / width;

// Only valid threads perform memory I/O

if ((x < width) && (y < height)) {

// Location of pixel

const int in_tid = y * inStep (in_c * x);

const int out_tid = y * outStep (out_c * x);

// Invert

for (int i = 0; i < in_c; i) {

output[out_tid i] = static_cast<unsigned short>(input[in_tid i]);

}

}

}

int main() { //Umesh: replace this function with other name

cv::Mat& result = cv::Mat(1024, 1024, CV_16UC1);;

cv::Mat input = cv::imread("Frame1.png");

const int inBytes = input.step * input.rows;

const int outBytes = result.step * result.rows;

unsigned short* b_input, * b_output;

std::cout << "step 1" << std::endl;

b_input = (unsigned short*)malloc(inBytes);

b_output = (unsigned short*)malloc(outBytes);

cout << "step 21" << endl;

cudaMalloc<unsigned short>(&b_input, inBytes);

cudaMalloc<unsigned short>(&b_output, outBytes);

cout << "step 2" << endl;

cudaMemcpy(b_input, input.ptr(), inBytes, cudaMemcpyHostToDevice);

cout << "step 3" << endl;

// Threadblock size

const dim3 block(16, 16);

const dim3 grid(cv::cuda::device::divUp(input.cols, block.x), cv::cuda::device::divUp(input.rows, block.y));

// Grid size

vectorAdd10 << <grid, block >> > (b_input, b_output, input.cols, input.rows, input.step, result.step);

cout << "step 4" << endl;

cudaSafeCall(cudaDeviceSynchronize());

// Copy sum vector from device to host

cudaSafeCall(cudaMemcpy(result.ptr(), b_output, outBytes, cudaMemcpyDeviceToHost));

cout << "step 5" << endl;

cv::imshow("Result", result);

cv::waitKey(3000);

cout << "step 6" << endl;

printf("COMPLETED SUCCESFULLY\n");

}

The expectation is, if you check above I am just sending one image as input and reading it back using cuda kernel, I expect to see the same input image as result.

Please help.

Thank you in advance

CodePudding user response:

There are potentially a number of things to sort out, some of which may be dependent on what kind of image (e.g. dimensions, color channels) you are loading initially.

In host code:

cv::Mat input = cv::imread("Frame1.png");

This pretty much leaves open the interpretation of the image to OpenCV. When I do this, depending on the image, I typically get a 3 channel 8-bit per color image, even for one that I previously saved in OpenCV as 16 bit single channel. So my suggestion would be to force OpenCV at least to create a CV_16UC1 image on the load operation:

cv::Mat input = cv::imread("Frame1.png", CV_16UC1);

So at least we can be confident of the number of color channels (1) and bit depth per channel (16). This is necessary to align with your expectation of unsigned short in your kernel code. I would also suggest you be certain that the dimensions of the image you are loading are 1024 x 1024 to match your output. If not, your code is broken and I'm not going to make this answer longer by discussing how to deal with that.

b_input = (unsigned short*)malloc(inBytes);

b_output = (unsigned short*)malloc(outBytes);

In CUDA, when allocating space for device variables, we don't start out by first allocating host space using the same pointers. These lines of code are just wrong, unnecessary, and need to be removed. This type of mistake can lead to memory leaks.

In device code:

const int in_c = inStep / width;

const int out_c = outStep / width;

Don't compute the number of color channels this way. It's inherently broken, because it is not taking into account the number of bytes per color channel. On your output image, at least, that is 2. So this calculation would lead to an out_c value of 2, which is simply wrong for your CV_16UC1 output image. Furthermore, if you need color channels info, OpenCV provides a convenience function for you to retrieve that, it is for example result.channels(). So if you need it, use that in host code, and then pass that value to device code. In our case we are going to force 1 channel on the input, and you have already forced 1 channel on the output, so we will work with that.

const int in_tid = y * inStep (in_c * x);

const int out_tid = y * outStep (out_c * x);

These calculations are also messed up. First, the previously computed channel info is incorrect. But also, these calculations will be used for pointer arithmetic. For the out_tid calculation (at least) the pointer in question is an unsigned short pointer, but the outStep value is reported by OpenCV in bytes. So that will not work for pointer arithmetic. If we assume non-pitched allocations as you are assuming here (which is fine), then we can simply use width to get a proper pointer offset.

Finally, you haven't indicated what GPU you are running on. Make sure your CUDA install is correct and you are compiling for the correct GPU architecture. This is going to be dependent on your OS (looks like you may be on windows) but there are numerous questions covering this already here on the cuda SO tag.

Combining all those ideas, and supplying a picture of the correct type (basically, a png image of 1024x1024 pixels) like this:

We can modify the code like this:

$ cat t33.cu

#include <opencv2/opencv.hpp>

#include <opencv2/core/cuda/common.hpp>

#include <cassert>

__global__ void vectorAdd10(unsigned short* input, unsigned short* output, int width, int height, int inStep, int outStep) {

// Index of current thread

const int x = blockIdx.x * blockDim.x threadIdx.x;

const int y = blockIdx.y * blockDim.y threadIdx.y;

// Only valid threads perform memory I/O

if ((x < width) && (y < height)) {

// Location of pixel

const int in_tid = (height-1-y) * width (width-1-x);

const int out_tid = y * width x;

// flip image across diagonal

output[out_tid] = input[in_tid];

}

}

int main() {

cv::Mat result = cv::Mat(1024, 1024, CV_16UC1);;

cv::Mat input = cv::imread("Frame1.png", CV_16UC1);

assert(input.rows == result.rows);

assert(input.cols == result.cols);

const int inBytes = input.step * input.rows;

const int outBytes = result.step * result.rows;

std::cout << " input rows: " << input.rows << std::endl;

std::cout << " input cols: " << input.cols << std::endl;

std::cout << " input step: " << input.step << std::endl;

std::cout << "input chnls: " << input.channels() << std::endl;

cv::imshow("input", input);

cv::waitKey(3000);

unsigned short* b_input, * b_output;

std::cout << "step 1" << std::endl;

cudaMalloc<unsigned short>(&b_input, inBytes);

cudaMalloc<unsigned short>(&b_output, outBytes);

cout << "step 2" << endl;

cudaMemcpy(b_input, input.ptr(), inBytes, cudaMemcpyHostToDevice);

cout << "step 3" << endl;

// Threadblock size

const dim3 block(16, 16);

const dim3 grid(cv::cuda::device::divUp(input.cols, block.x), cv::cuda::device::divUp(input.rows, block.y));

// Grid size

vectorAdd10 << <grid, block >> > (b_input, b_output, result.cols, result.rows, input.step, result.step);

cout << "step 4" << endl;

cudaSafeCall(cudaDeviceSynchronize());

// Copy sum vector from device to host

cudaSafeCall(cudaMemcpy(result.ptr(), b_output, outBytes, cudaMemcpyDeviceToHost));

cout << "step 5" << endl;

cv::imshow("Result", result);

cv::waitKey(3000);

cout << "step 6" << endl;

cv::imwrite("Result.png", result);

}

$ nvcc -arch=sm_35 -o t33 t33.cu -lopencv_core -lopencv_highgui -lopencv_imgcodecs

nvcc warning : The 'compute_35', 'compute_37', 'compute_50', 'sm_35', 'sm_37' and 'sm_50' architectures are deprecated, and may be removed in a future release (Use -Wno-deprecated-gpu-targets to suppress warning).

$ cuda-memcheck ./t33

========= CUDA-MEMCHECK

input rows: 1024

input cols: 1024

input step: 2048

input chnls: 1

step 1

step 2

step 3

step 4

step 5

step 6

========= ERROR SUMMARY: 0 errors

$

and get sensible (non-black) output:

CodePudding user response:

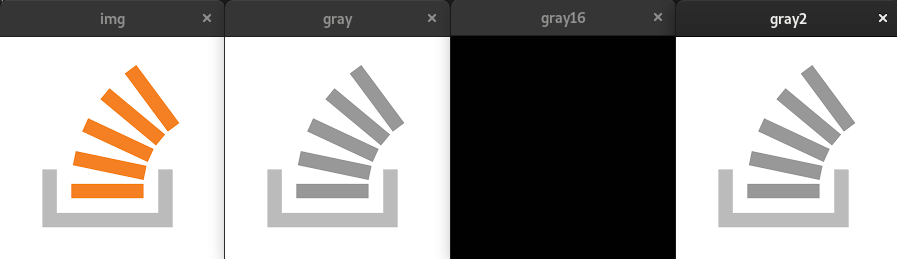

To display an image that is 16 bit grayscale as you are trying to do with cv::imshow first you need to convert the image to 8 bit. cv::imshow cannot display images which are not 8 bit per channel.

Here is a quick test code:

#include <opencv2/opencv.hpp>

int main()

{

cv::Mat img, gray, gray16, gray2;

img = cv::imread("img.png");

cv::cvtColor(img, gray, cv::COLOR_BGR2GRAY);

gray.convertTo(gray16, CV_16UC1);

cv::imshow("img", img);

cv::imshow("gray", gray);

cv::imshow("gray16", gray16);

gray16.convertTo(gray2, CV_8UC1);

cv::imshow("gray2", gray2);

cv::waitKey(0);

return 0;

}

This shows the following result: