I have a dataset like below and I want to estimate the 'Pooled estimate of variance' for this dataset.

This dataset is having two groups of columns (first three columns "A1", "A2", "A3" are one group and next three columns "B1", "B2", "B3" are second group)

structure(list(A1 = c(12305960196.5721, 24169710612.0476, 32972077491.8103,

8553811608.70032, 13176265141.6301, 92994780469.5607), A2 = c(11629654800,

25162283400, 31864546300, 8157173240, 12812379370, 90007498700

), A3 = c(12503938417.8663, 25733015601.0117, 34727094361.2997,

8857104380.18179, 12763602767.3278, 93988723611.341), B1 = c(14380818076.1189,

79582218995.1549, 77615759060.3497, 21749287984.8341, 33342436650.5148,

101254055758.836), B2 = c(21440113143.2507, 78743355495.2545,

81948536416.9992, 23764549283.2733, 34617564902.3219, 99485017820.8478

), B3 = c(20385779423.3852, 80272416055.8845, 77019098847.8474,

23045479130.9574, 32885483296.8046, 90789109337.1181)), row.names = c(NA,

-6L), class = c("tbl_df", "tbl", "data.frame"), na.action = structure(c(`57` = 57L,

`124` = 124L, `125` = 125L, `152` = 152L, `164` = 164L, `167` = 167L,

`168` = 168L, `169` = 169L, `172` = 172L, `174` = 174L, `176` = 176L,

`178` = 178L, `179` = 179L, `180` = 180L, `181` = 181L, `185` = 185L,

`188` = 188L, `190` = 190L, `191` = 191L, `196` = 196L, `197` = 197L,

`199` = 199L, `200` = 200L, `201` = 201L, `202` = 202L, `205` = 205L,

`207` = 207L, `208` = 208L, `210` = 210L, `214` = 214L, `215` = 215L,

`216` = 216L, `217` = 217L, `221` = 221L, `224` = 224L, `226` = 226L,

`227` = 227L, `228` = 228L, `229` = 229L, `231` = 231L, `232` = 232L,

`236` = 236L, `237` = 237L, `239` = 239L, `242` = 242L, `245` = 245L,

`249` = 249L, `253` = 253L, `257` = 257L, `258` = 258L, `273` = 273L,

`274` = 274L, `281` = 281L, `290` = 290L, `317` = 317L, `322` = 322L,

`325` = 325L, `330` = 330L, `336` = 336L, `345` = 345L, `349` = 349L,

`353` = 353L, `354` = 354L, `356` = 356L, `392` = 392L, `403` = 403L,

`404` = 404L, `409` = 409L, `426` = 426L, `434` = 434L, `543` = 543L,

`545` = 545L, `563` = 563L, `574` = 574L, `600` = 600L, `623` = 623L,

`624` = 624L, `632` = 632L, `633` = 633L, `638` = 638L, `642` = 642L,

`663` = 663L, `709` = 709L, `727` = 727L, `746` = 746L, `796` = 796L,

`849` = 849L, `1730` = 1730L), class = "omit"))

Please suggest some R code for calculating pooled estimate of variance.

CodePudding user response:

Maybe you want to use the utilities package with the sample.decomp function like this:

library(utilities)

library(moments)

N <- sapply(df, length)

MEAN <- sapply(df, mean)

VAR <- sapply(df, var)

SKEW <- sapply(df, skewness)

KURT <- sapply(df, kurtosis)

sample.decomp(n = N,

sample.mean = MEAN,

sample.var = VAR,

sample.skew = SKEW,

sample.kurt = KURT,

names = names(df))

#> n sample.mean sample.var sample.skew sample.kurt

#> A 6 30695434253 1.012864e 21 1.48900482 3.629503

#> B 6 29938922635 9.475501e 20 1.46579309 3.593486

#> C 6 31428913190 1.034617e 21 1.44777337 3.548699

#> D 6 54654096088 1.295811e 21 0.08531486 1.341991

#> E 6 56666522844 1.153917e 21 0.08593435 1.253710

#> F 6 54066227682 1.021490e 21 0.01378374 1.145876

#> --pooled-- 36 42908352782 1.078188e 21 0.62178383 1.692347

Created on 2022-07-20 by the reprex package (v2.0.1)

sample.decomp according to documentation:

sample.decomp returns the data-frame of sample statistics for sample groups and their pooled sample

If you want to calculate the Pooled estimate of variance for A and B for example, you can use the following code:

n1 <- length(df$A)

n2 <- length(df$B)

var1 <- var(df$A)

var2 <- var(df$B)

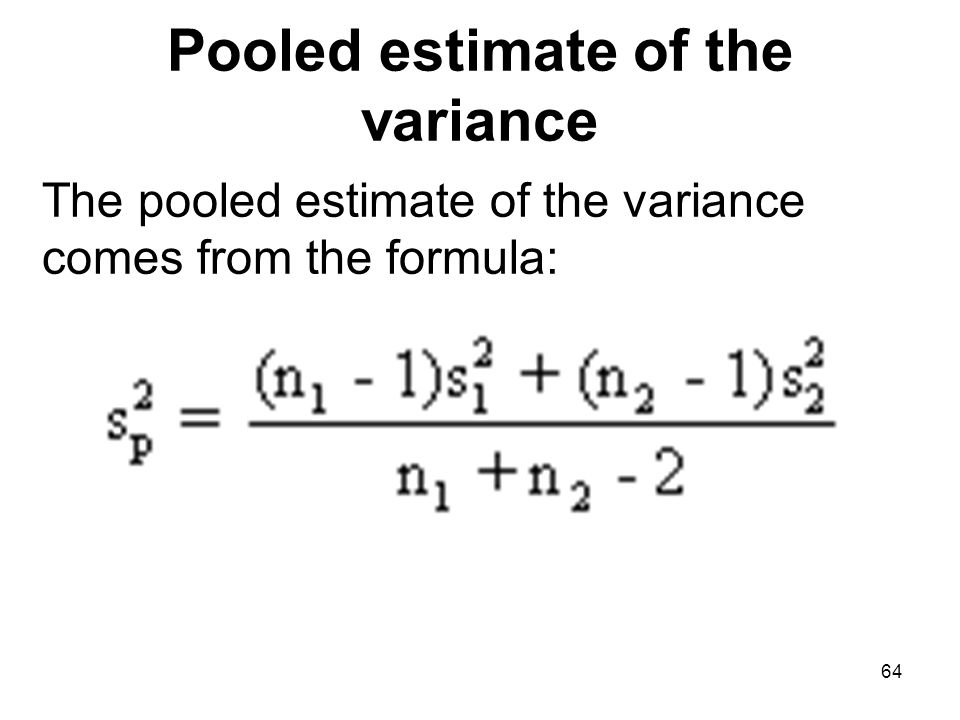

pooled <- ((n1-1)*var1 (n2-1)*var2) / (n1 n2-2)

> pooled

[1] 9.802073e 20

CodePudding user response:

I recommend that you tidy your data, so that each observation has its own row and each column represents a variable.

library(tidyverse)

df <- pivot_longer(df, 1:6)

df <- mutate(df,

group = substr(name,start = 1, stop = 1),

experiment = substr(df$name,start = 2, stop = 2),

.keep = "unused",

.before = 1)

after this, you can use group_by and summarise to achieve the result.

I recommend reading R for data science chapters tidy data and data transformation to understand.