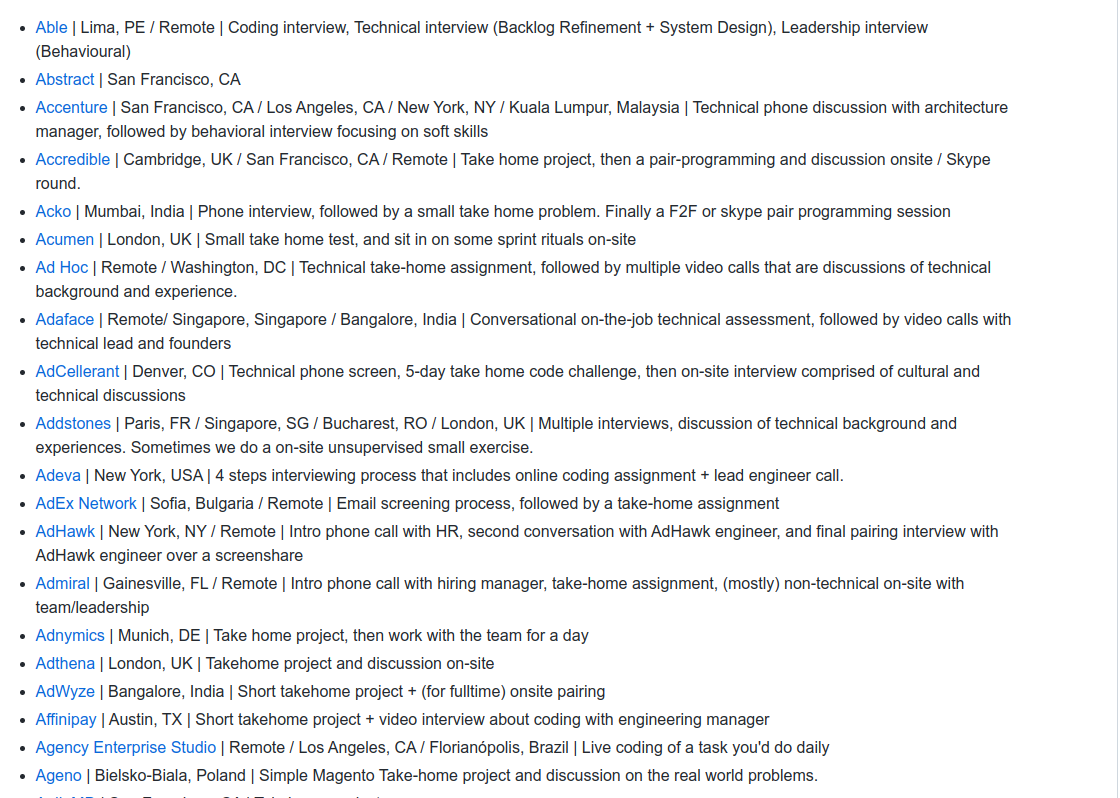

I'm trying to scrape a git .md file. I have made a python scraper but I'm kind of stuck on how to actually get the data I want. The page has a long list of job listings. they are all in separate Li elements. I want to get the A elements. After the A elements, there is just plain text separated by | I want to scrape those as well. I really want this to end up as a CSV file with the A tag as a column, the location text before the | as a column, and the remaining description text as a column.

Here's my code:

from bs4 import BeautifulSoup

import requests

import json

def getLinkData(link):

return requests.get(link).content

content = getLinkData('https://github.com/poteto/hiring-without-whiteboards/blob/master/README.md')

soup = BeautifulSoup(content, 'html.parser')

ul = soup.find_all('ul')

li = soup.find_all("li")

data = []

for uls in ul:

rows = uls.find_all('a')

data.append(rows)

print(data)

When I run this I get the A tags, but obviously not the rest yet. There seem to be a few other ul elements that are included. I just want the one with all the job LIs but the LIs nor the UL have any ids or classes. Any suggestions on how to accomplish what I want? Maybe add Pandas into this(not sure how)

CodePudding user response:

import requests

import pandas as pd

import numpy as np

url = 'https://raw.githubusercontent.com/poteto/hiring-without-whiteboards/master/README.md'

res = requests.get(url).text

jobs = res.split('## A - C\n\n')[1].split('\n\n## Also see')[0]

jobs = [j[3:] for j in jobs.split('\n') if j.startswith('- [')]

df = pd.DataFrame(columns=['Company', 'URL', 'Location', 'Info'])

for i, job in enumerate(jobs):

company, rest = job.split(']', 1)

url, rest = rest[1:].split(')', 1)

rest = rest.split(' | ')

if len(rest) == 3:

_, location, info = rest

else:

_, location = rest

info = np.NaN

df.loc[i, :] = (company, url, location, info)

df.to_csv('file.csv')

print(df.head())

prints

| index | Company | URL | Location | Info |

|---|---|---|---|---|

| 0 | Able | https://able.co/careers | Lima, PE / Remote | Coding interview, Technical interview (Backlog Refinement System Design), Leadership interview (Behavioural) |

| 1 | Abstract | https://angel.co/abstract/jobs | San Francisco, CA | NaN |

| 2 | Accenture | https://www.accenture.com/us-en/careers | San Francisco, CA / Los Angeles, CA / New York, NY / Kuala Lumpur, Malaysia | Technical phone discussion with architecture manager, followed by behavioral interview focusing on soft skills |

| 3 | Accredible | https://www.accredible.com/careers | Cambridge, UK / San Francisco, CA / Remote | Take home project, then a pair-programming and discussion onsite / Skype round. |

| 4 | Acko | https://acko.com | Mumbai, India | Phone interview, followed by a small take home problem. Finally a F2F or skype pair programming session |

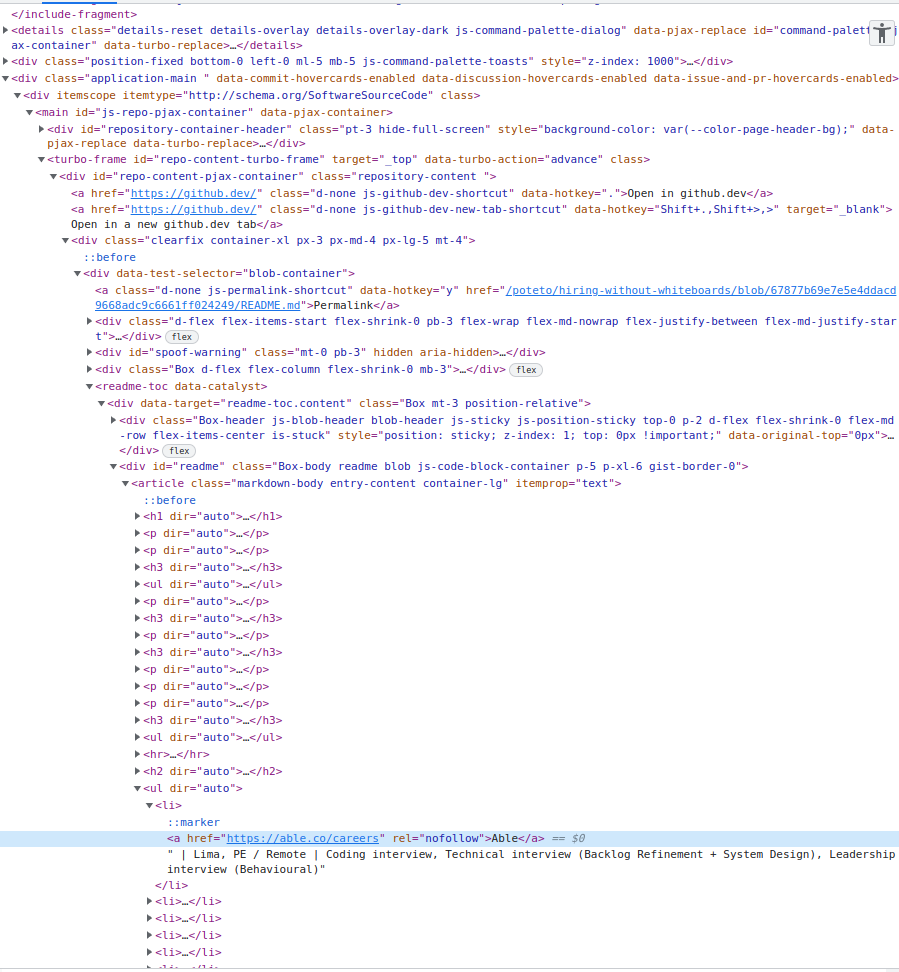

CodePudding user response:

import requests

from bs4 import BeautifulSoup

import pandas as pd

from itertools import chain

def main(url):

r = requests.get(url)

soup = BeautifulSoup(r.text, 'lxml')

goal = list(chain.from_iterable([[(

i['href'],

i.get_text(strip=True),

*(i.next_sibling[3:].split(' | ', 1) if i.next_sibling else ['']*2))

for i in x.select('a')] for x in soup.select(

'h2[dir=auto] ul', limit=9)]))

df = pd.DataFrame(goal)

df.to_csv('data.csv', index=False)

main('https://github.com/poteto/hiring-without-whiteboards/blob/master/README.md')