I have two sets of column vectors X = (X_1 ... X_n), Y = (Y_1 ... Y_n) of same shape.

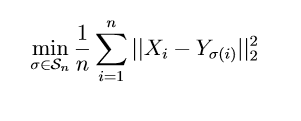

I would like to compute something like this:

i.e the minimal L2 norm between X and Y up to column permutation. Is it possible to do it in less than O(n!)? Is it already implemented in Numpy for instance? Thank you in advance.

CodePudding user response:

Apply scipy.optimize.linear_sum_assignment to the matrix A where Aij = ‖Xi − Yj‖.