We are testing the running times of two lambda functions. They both contain exactly the same code, however, one is deployed as a container image. We are doing this to test whether there are any significant differences in running times.

The code which retrieves 550.000 rows of data from a AWS SQL database

//import * as AWS from 'aws-sdk';

const Knex = require('knex');

//AWS.config.update({ region: 'us-east-1' });

const host = //hidden;

const user = //hidden;

const password=//hidden;

const database=//hidden;

const connection = {

ssl: { rejectUnauthorized: false },

host,

user,

password,

database,

};

// createaconnection

const knex = Knex({

client:'mysql',

connection,

});

exports.handler = async () => {

try {

var limit = 550000

const res=await knex('ALM_AGGR_LQ_201231_210111_0600_A048_V3_75_GAI').select().limit(limit);

console.log("RETRIEVING: " limit);

const response = {

statusCode: 200,

//body: JSON.stringify(res),

};

return response;

}catch(err){

console.error(err);

}

};

We have configured the both versions (script and container) to use 10240MB, however, for some reason we are noticing that the container does not utilize all its memory

Logs

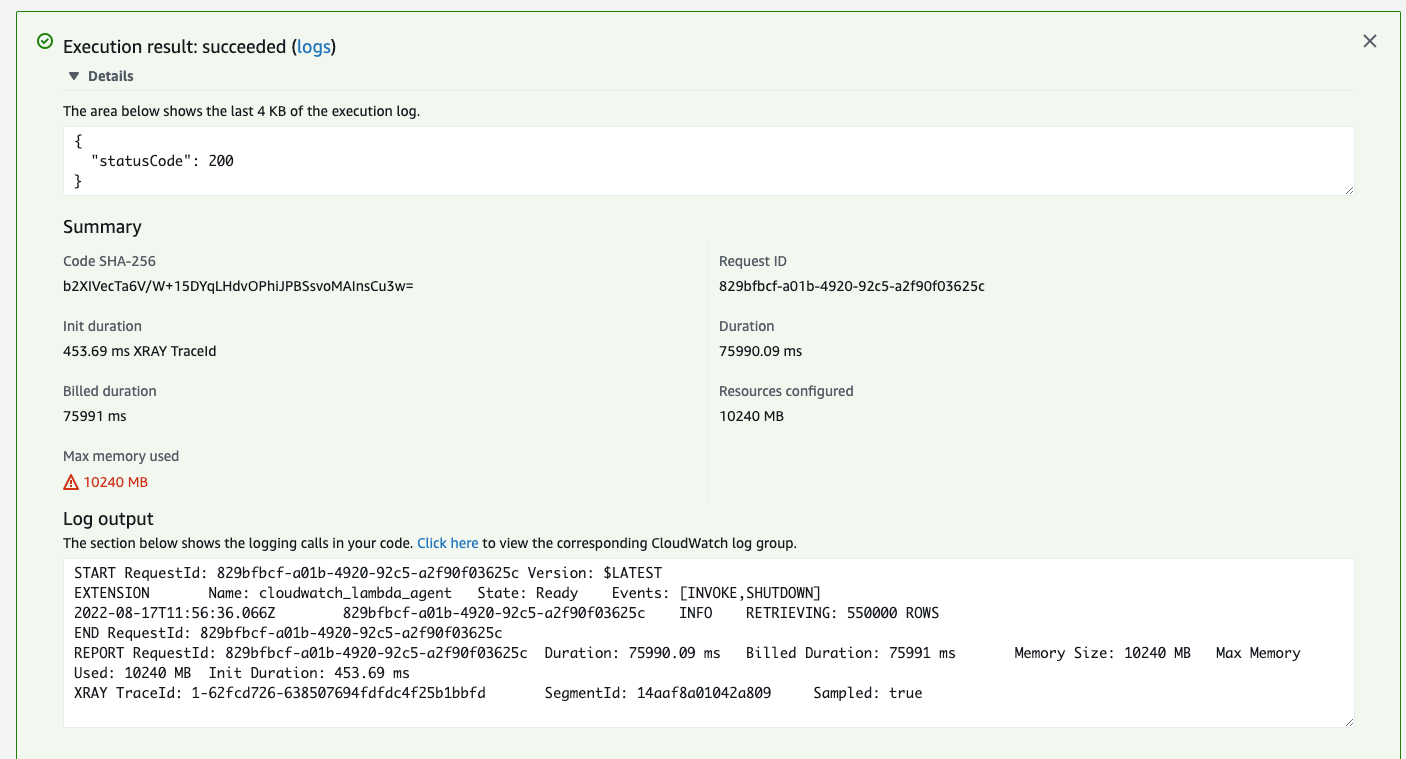

Lambda (not container)

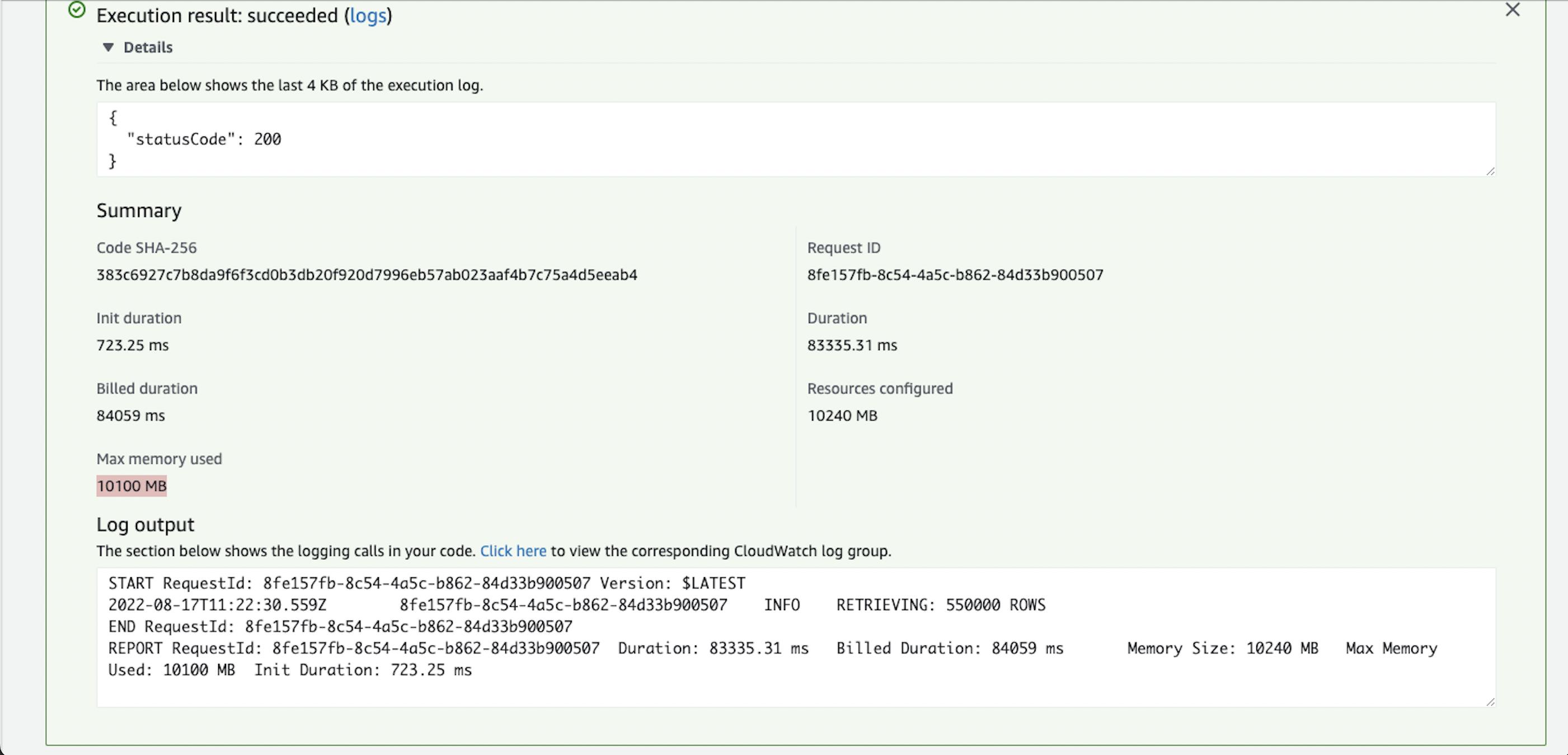

Container

Can anyone explain to us, why the container is utilizing less memory (10097MB) compared to the script version that uses the maximum memory (10240MB) which we assume is the reason that the container version takes longer to execute (83 seconds) compared to the script version (76 seconds)

CodePudding user response:

Even when using the default lambda (running as a script as you say) aws lambda is still loading a container in the backend - its just an optimized container for lambda for that particular language version.

so as for your memory differences, this is the reason why you see the difference - the default aws container is using all the available memory because it is setup to properly take advantage of everything lambda is, and directly work with it. (remember too that the Memory Setting also directly determines the number of cores the lambda has to work with - a higher amount of memory allowed to the lambda = more cores used)

A few notes:

If you are not using some specific compiler/interpreter for your code, you are far better sticking with the default aws images. Unless you want to do something like move from Python to PyPy interpreter for example, then generally the AWS default is going to be better optimized for a lambda than anything you can do on your own (or at least better than the time it would take to make those optimizations yourself)

If you use Provisioned Concurrency properly, there will be practically no difference between your container and a script - Once the lambda is properly warm and running, your Invoke times for subsequent invokes will properly be about the same. The ColdStart times however will be far worse for your custom container - meaning every time your lambda has to start a new version, you will encounter some major cold start delays (significantly reducing your performance times over large scale of requests against your lambda)

Do note too, that a given lambda instance can only handle 1 call at a time. So as your duration is about 75-80seconds, any further calls that come in during that time would require another lambda to be spun up - which with a custom container will mean a longer cold start time - which means longer before a given lambda instance can take a second invocation (with 0 cold start cause its already warm).

As i mentioned, provisioned Concurrency can help with that, but for longer lambda's like this it becomes expensive to keep that much provisioning online. And anytime your invocations spill out of your provisioned concurrency, those cold starts will once again rear up and be a major performance hit on your overall time.