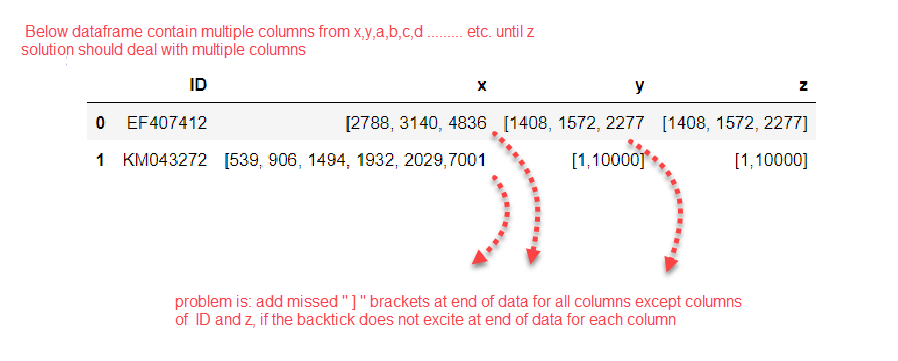

problem is: add missed " ] " brackets at end of data for all columns except columns of ID and z, if the backtick does not excite at end of data for each column as shown below

P.S> dataframe contains multiple columns from x,y,a,b,c,d ......... etc. until z and the solution should deal with multiple columns

dftest = pd.DataFrame({'ID':['EF407412','KM043272']

, 'x': ['[2788, 3140, 4836','[539, 906, 1494, 1932, 2029,7001']

, 'y': ['[1408, 1572, 2277','[1,10000]']

, 'z': ['[1408, 1572, 2277]','[1,10000]']

# df dataframe containes N colemans x,y,z,a,b,c ......etc more than 100 colemans

})

CodePudding user response:

Assuming it is only ID and z columns that you want to skip, try this:

dfcut = dftest[[col for col in dftest if col != 'ID' and col != 'z']]

dfcut = dfcut.applymap(lambda cell: cell ']' if cell[-1] != ']' else cell)

You can then insert newly edited columns to dftest as follows:

dftest[[col for col in dfcut.columns]] = dfcut

CodePudding user response:

With apply and str.replace which IMO is much better performing:

You basically look for the pattern that begins with '[' and has digits and spaces and commas but doesn't end with ']' with a negative look ahead and replace it with the captured value ']'.

dftest.apply(lambda x: x.str.replace(

pat=r'^([[\d\s,] (?!\])$)', repl=lambda m: m.group(1) ']', regex=True))

With applymap (not so performant):

dftest.astype(str).applymap(

lambda x: x ']' if ~(x.endswith(']')) & (x.startswith('[')) else x)

output:

ID x y z

0 EF407412 [2788, 3140, 4836] [1408, 1572, 2277] [1408, 1572, 2277]

1 KM043272 [539, 906, 1494, 1932, 2029,7001] [1,10000] [1,10000]