I have this data:

df = pd.DataFrame({'date': {0: '2012-03-02', 1: '2012-04-01', 2: '2012-04-12', 3: '2012-04-14', 4: '2012-04-21', 5: '2012-05-12', 6: '2012-06-23', 7: '2012-06-25', 8: '2012-06-26', 9: '2012-06-27'},

'type': {0: 'Holiday', 1: 'Other', 2: 'Event', 3: 'Holiday', 4: 'Event', 5: 'Holiday', 6: 'Other', 7: 'Holiday', 8: 'Holiday', 9: 'Event'},

'locale': {0: 'Local', 1: 'Regional', 2: 'National', 3: 'Local', 4: 'National', 5: 'National', 6: 'National', 7: 'Regional', 8: 'Regional', 9: 'Local'}})

And this function:

def preprocess(data_input):

data = data_input.copy()

conditions = [

(data['type'] == 'Holiday') & (data.locale == 'National'),

(data['type'] == 'Holiday') & (data.locale == 'Regional'),

(data['type'] == 'Holiday') & (data.locale == 'Local')

]

values = [3,2,1]

data.loc[:,'Holiday'] = np.select(conditions, values, default=0)

conditions = [

(data['type'] == 'Event') & (data.locale == 'National'),

(data['type'] == 'Event') & (data.locale == 'Regional'),

(data['type'] == 'Event') & (data.locale == 'Local')

]

values = [3,2,1]

data.loc[:,'Events'] = np.select(conditions, values, default=0)

return(data)

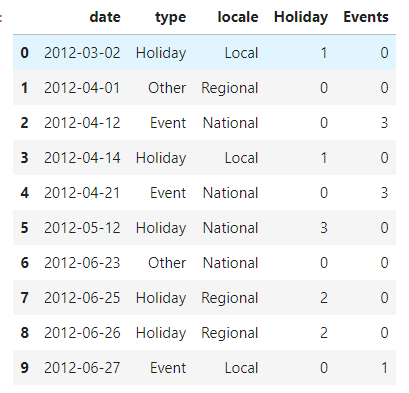

When I run this function over df I will get:

preprocess(df)

Which is the expected result. However, I'm wondering about efficiency and best practices. I feel that both conditions use too many lines of code and that they could be written more elegant and efficiently, but I can't figure out how. I tried with np.where() but I struggled when I found out I would have to filter the data frames in each argument.

Any suggestions?

CodePudding user response:

Overall I think your code looks fine, it's readable and easily understandable. In terms of efficiency, you're using boolean masks for your conditions and not committing any sort of pandas performance anti-pattern that I can see.

One major-ish pandas issue is that you have a redundant call to copy(). This isn't cheap, it results in Python creating a deep copy of your whole dataframe. It's also not necessary, you can just return the modified df rather than making a new copy the whole time and returning it. This is pretty standard style in pandas, it's why pandas operations usually look like df = df.do_a_thing(). The new value of df is returned via do_a_thing() rather than modified in place. (The in_place flag exists for a lot of methods, but it isn't the default for a reason).

One nitpick I'd say is to be explicit when using categorical variables. Your values variable is a categorical variable that's derived conditionally from the dataframe, but you are treating it as a series of integers.

In terms of general software engineering, there is one clear area of improvement that I can see. Your preprocess method is duplicating code for a singular purpose. I have refactored it such that the preprocess method takes the type_column (e.g. 'Holiday' and 'Event') as a parameter, and applies the transformation after that. Now if you have an issue with your preprocess logic, or want to make a change, you'll only need to do it in one area rather than two.

df = pd.DataFrame({'date': {0: '2012-03-02', 1: '2012-04-01', 2: '2012-04-12', 3: '2012-04-14', 4: '2012-04-21', 5: '2012-05-12', 6: '2012-06-23', 7: '2012-06-25', 8: '2012-06-26', 9: '2012-06-27'},

'type': {0: 'Holiday', 1: 'Other', 2: 'Event', 3: 'Holiday', 4: 'Event', 5: 'Holiday', 6: 'Other', 7: 'Holiday', 8: 'Holiday', 9: 'Event'},

'locale': {0: 'Local', 1: 'Regional', 2: 'National', 3: 'Local', 4: 'National', 5: 'National', 6: 'National', 7: 'Regional', 8: 'Regional', 9: 'Local'}})

def preprocess(data, type_column):

conditions = [

(data['type'] == type_column) & (data.locale == 'National'),

(data['type'] == type_column) & (data.locale == 'Regional'),

(data['type'] == type_column) & (data.locale == 'Local')

]

# These values are categorical variables, (i.e. it's dependent on the combination of two columns).

# Better to be explicit about what type they are rather than leaving them as ints.

values = pd.Categorical([3, 2, 1])

data.loc[:, type_column] = np.select(conditions, values, default=0)

return data

df = preprocess(df, 'Holiday')

df = preprocess(df, 'Event')

print(df)

CodePudding user response:

You can try Series.map with Series.where

locale_map = {'National': '3',

'Regional': '2',

'Local': '1'}

df['Holiday'] = df['locale'].map(locale_map).where(df['type'].eq('Holiday'), 0)

df['Events'] = df['locale'].map(locale_map).where(df['type'].eq('Event'), 0)

print(df)

date type locale Holiday Events

0 2012-03-02 Holiday Local 1 0

1 2012-04-01 Other Regional 0 0

2 2012-04-12 Event National 0 3

3 2012-04-14 Holiday Local 1 0

4 2012-04-21 Event National 0 3

5 2012-05-12 Holiday National 3 0

6 2012-06-23 Other National 0 0

7 2012-06-25 Holiday Regional 2 0

8 2012-06-26 Holiday Regional 2 0

9 2012-06-27 Event Local 0 1