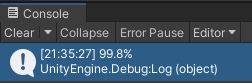

I have the following scenario. I have a game in Unity where a player is provided with varying amount of targets (we'll say 125 as an example). The accuracy is multi-class in that there is Perfect(bullseye), Great, Good, Miss (where miss is the target is missed entirely, no points awarded). I'm trying to find the right way to calculate a correct accuracy percentage in this scenario. If the player hits every target (125) as Perfect, the accuracy would be 100%. If they hit 124 Perfect and 1 Great, while every target was hit the accuracy percentage would still drop (99.8%). What would be the correct way to calculate this? Balanced Accuracy? Weighted Accuracy? Precision?

I'd like to understand the underlying calculation, not just how to implement this in code.

I appreciate any help I can get with this.

CodePudding user response:

This can be calculated by assigning each accuracy a score between 0 and 100 (percentage) and then calculating the average score or