I have an ECS cluster in which some services have Task Placement constraints. However, some seem to work while others don't.

I want a specific service to only launch on ECS instances that have a specific attribute: In this case, task==relay and ecs.instance-type==t2.micro.

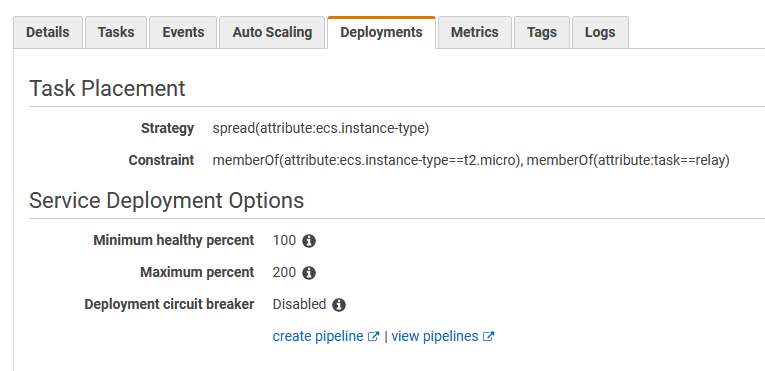

My task placement looks like this:

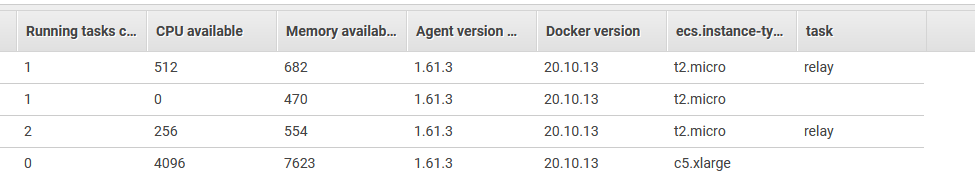

And my ECS registered instances look like this:

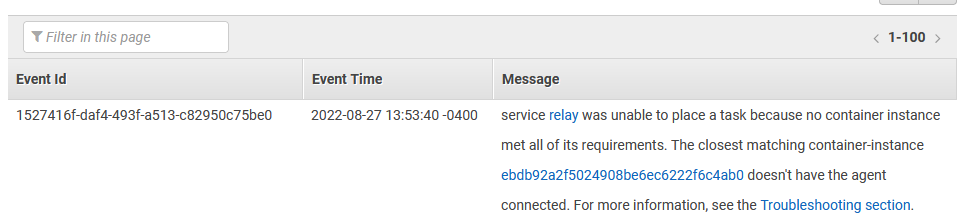

However, when I try to run two tasks of that service, one gets place in it's appropriate instance while the other one tries to be placed in one that doesn't satisfy any of those 2 constraints, giving the following error (the instance is in a warm pool and doesn't have the agent activated, and it's also not a t2.micro instance).

I want both tasks to run in the same t2.micro instance, that has 512 CPU available and 682 Memory available. The task size is 512 CPU Units and 300 MB memory, so it should fit 2 in the same t2.micro, unless i'm not counting on something. Even if that was the case, it should tell me that that the micro instance (that satisfies the constraints) doesn't have enough resources, not that it tried to run it in a totally different instance altogether, correct?

Thank you

CodePudding user response:

This is due to the task placement strategy of your service. The Strategy and Constraint disagree with each other. The current task placement strategy defined tells ECS to evenly spread your tasks across instance types available, while the constraint says that the attribute task should equal relay and instance type should be t2.micro. ECS places one task according to the constraint then moves on to spread the task w.r.t. instance type. Since the constraint restricts that, it's unable to place the second task for you.

Fix to this would be to go for a binpack placement strategy w.r.t. CPU which will leave the least amount of unused CPU while also minimising the number of container instances in use. Refer to doc for more clarity.