How to export each article into different word file using beautifulsoup?

I am trying to scrap all the articles and export them one by one into different word files

suppose if there are 1000 articles i want 1000 word files

here is my code

import requests

from bs4 import BeautifulSoup

import time

#to loop over all 199 pages

for i in range(1,199):

URL = "https://trumpwhitehouse.archives.gov/remarks/page/"

r = requests.get(URL str(i))

soup = BeautifulSoup(r.content,'lxml')

#to get all the links from the first page of news headlines

links=[]

for news in soup.findAll('h2', class_='briefing-statement__title'):

links.append(news.a['href'])

#followed the link from the first page and extract article text

for link in links:

page=requests.get(link)

sp=BeautifulSoup(page.text,"lxml")

article=sp.find("div",class_="page-content").find_all("p")

for d in article:

print(d.get_text())

#sleep time to 3 seconds to overcome blocking

time.sleep(3)

CodePudding user response:

Try to add try-except block when you are writing to text file and you can write custom message if exception occurs or you can print.

import os

import requests

from bs4 import BeautifulSoup

os.mkdir("US_DATA")

os.chdir("US_DATA")

path=os.getcwd()

for i in range(1,199):

URL = "https://trumpwhitehouse.archives.gov/remarks/page/"

r = requests.get(URL str(i))

soup = BeautifulSoup(r.content,'lxml')

#to get all the links from the first page of news headlines

links=[]

for news in soup.findAll('h2', class_='briefing-statement__title'):

links.append(news.find("a")['href'])

#followed the link from the first page and extract article text

for link in links:

page=requests.get(link)

sp=BeautifulSoup(page.text,"lxml")

article=sp.find("div",class_="page-content").find_all("p")

try:

for d in article:

with open(path "//" link.split("/")[-2] ".txt","a",encoding="utf-8") as data:

data.write(d.get_text() "\n")

except:

print(link)

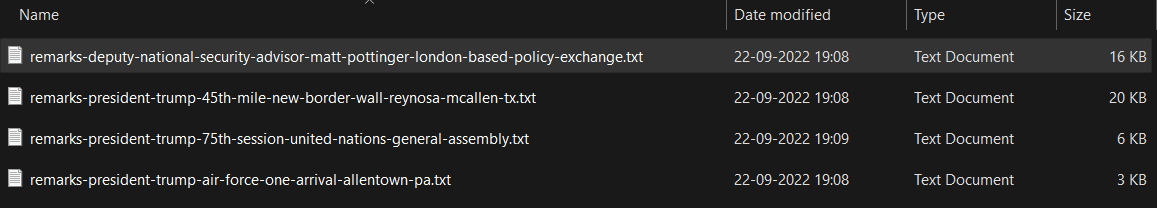

Output: (Local Folder):