Code for reproducing the problem

go version go1.19 darwin/amd64

package main

import (

"bytes"

"log"

"net/http"

_ "net/http/pprof"

"runtime"

"strconv"

"sync"

)

type TestStruct struct {

M []byte

}

var lkMap sync.Mutex

var tMap map[string]*TestStruct

const objectCount = 1024 * 100

func main() {

tMap = make(map[string]*TestStruct)

wg := &sync.WaitGroup{}

wg.Add(objectCount)

func1(wg)

wg.Add(objectCount)

func2(wg)

wg.Wait()

runtime.GC()

log.Println(http.ListenAndServe("localhost:6060", nil))

}

//go:noinline

func func1(wg *sync.WaitGroup) {

ts := []*TestStruct{}

for i := 0; i < objectCount; i {

t := &TestStruct{}

ts = append(ts, t)

lkMap.Lock()

tMap[strconv.Itoa(i)] = t

lkMap.Unlock()

}

for i, t := range ts {

go func(t *TestStruct, idx int) {

t.M = bytes.Repeat([]byte{byte(32)}, 1024)

lkMap.Lock()

delete(tMap, strconv.Itoa(idx))

lkMap.Unlock()

wg.Done()

}(t, i) //pass by reference

}

}

//go:noinline

func func2(wg *sync.WaitGroup) {

ts := []*TestStruct{}

for i := 0; i < objectCount; i {

t := &TestStruct{}

ts = append(ts, t)

lkMap.Lock()

tMap[strconv.Itoa(i objectCount)] = t

lkMap.Unlock()

}

for i, t := range ts {

tmp := t //capture here

idx := i

go func() {

tmp.M = bytes.Repeat([]byte{byte(32)}, 1024)

lkMap.Lock()

delete(tMap, strconv.Itoa(idx objectCount))

lkMap.Unlock()

wg.Done()

}()

}

}

Run this program and using pprof to fetch the heap using the command line:

go tool pprof http://localhost:6060/debug/pprof/heap

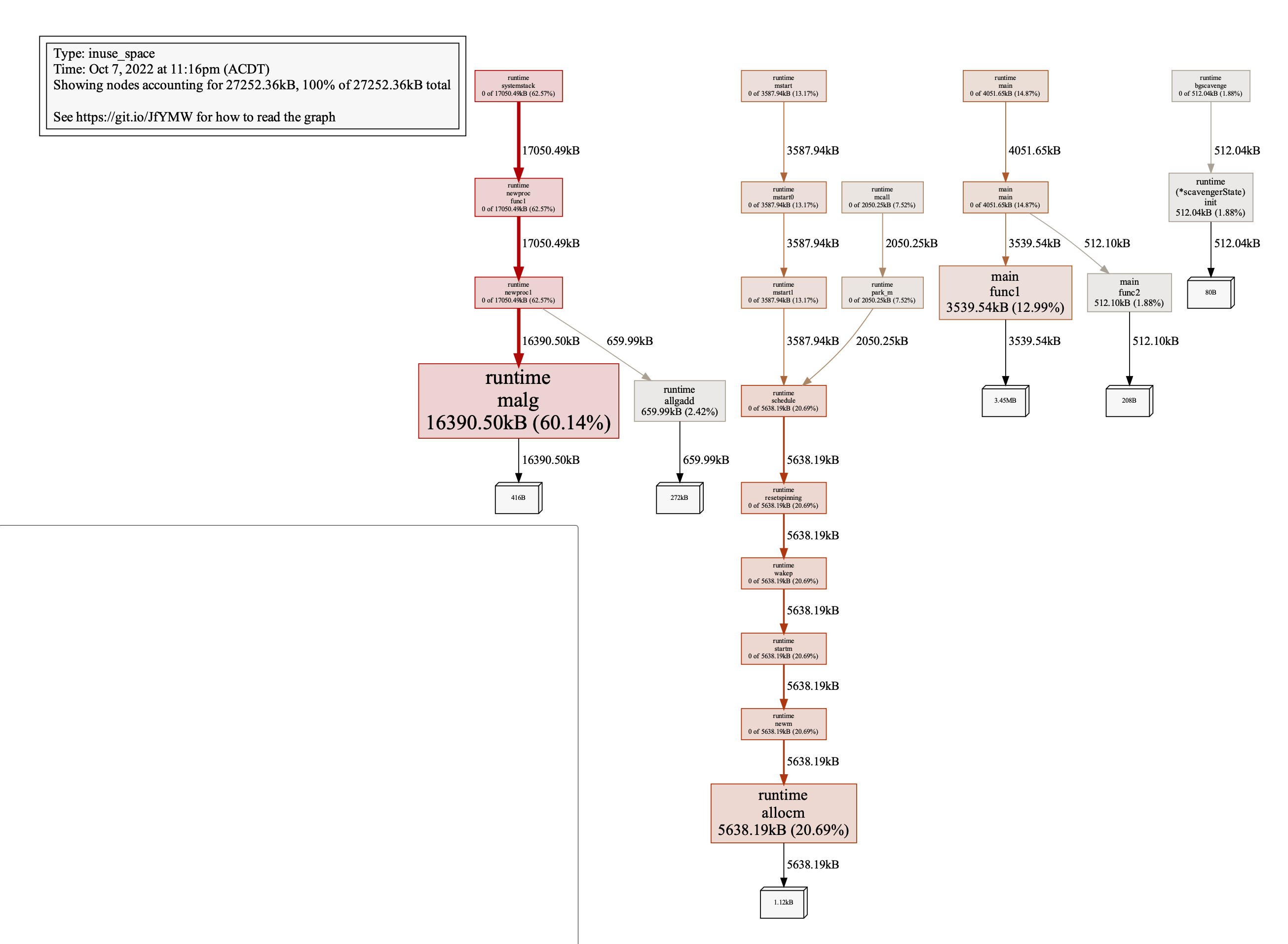

will produce the diagram like this:

As I can see the func1 is clearly leaking. The only difference between func1 and func2 is that func2 uses a local variable to capture the loop variable.

However, if I remove the global map both functions are fine, no one is leaking.

So is there something to do with the global map manipulation?

Thank you for any input

Edit:

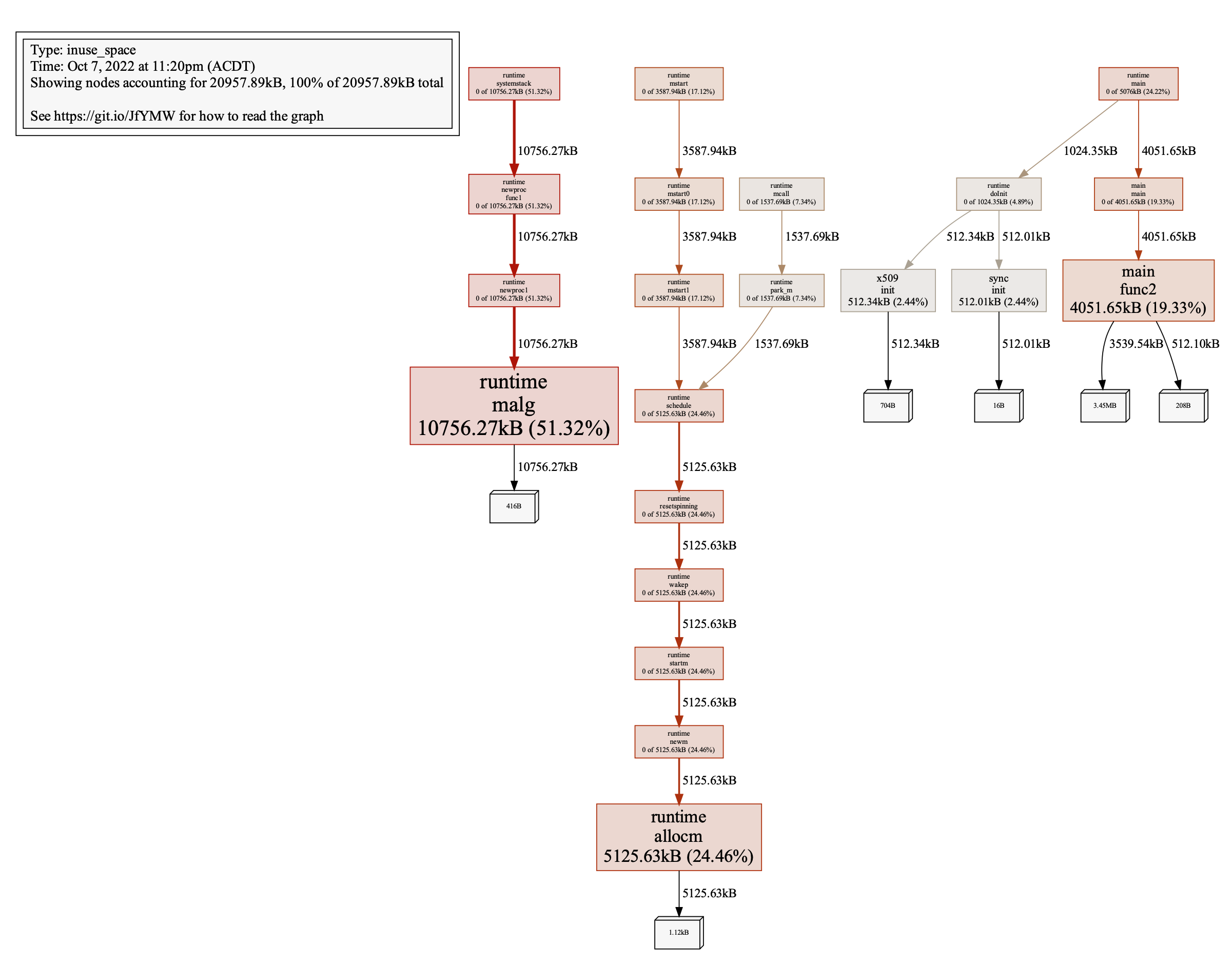

Thanks @nipuna for pointing out that changing the order of func1 and func2 in main function will make func2 show in the report. However func1 is still hoarding the most memory which is 51.32% as func2 is retaining 19.33%

CodePudding user response:

I don't think that profiler diagrams are any strong witnesses in the memory leak trial.

Go runtime can tell about its memory allocation much more than profiler. Try runtime.ReadMemStats to output heap readings.

Here is my experiment: https://go.dev/play/p/279du2yB3BZ

func printAllocInfo(message string) {

var memStats runtime.MemStats

runtime.ReadMemStats(&memStats)

fmt.Println(message,

"Heap Alloc:", memStats.HeapAlloc,

"Heap objects:", memStats.HeapObjects)

}

func main() {

tMap = make(map[string]*TestStruct)

wg := &sync.WaitGroup{}

for i := 0; i < 10; i {

wg.Add(objectCount)

printAllocInfo("Before func2:")

func2(wg)

wg.Wait()

runtime.GC()

printAllocInfo("After func2: ")

}

println("----------------------------")

for i := 0; i < 10; i {

wg.Add(objectCount)

printAllocInfo("Before func1:")

func1(wg)

wg.Wait()

runtime.GC()

printAllocInfo("After func1: ")

}

println("----------------------------")

runtime.GC()

printAllocInfo("after GC: ")

println("----------------------------")

}

The output is

Before func1: Heap Alloc: 260880 Heap objects: 1224

After func1: Heap Alloc: 1317904 Heap objects: 2749

Before func1: Heap Alloc: 1319288 Heap objects: 2757

After func1: Heap Alloc: 1576656 Heap objects: 3425

Before func1: Heap Alloc: 1578040 Heap objects: 3433

After func1: Heap Alloc: 1858408 Heap objects: 4197

Before func1: Heap Alloc: 1859792 Heap objects: 4205

After func1: Heap Alloc: 2210760 Heap objects: 4909

Before func1: Heap Alloc: 2212144 Heap objects: 4917

After func1: Heap Alloc: 2213200 Heap objects: 4799

Before func1: Heap Alloc: 2214584 Heap objects: 4807

After func1: Heap Alloc: 2247480 Heap objects: 5045

Before func1: Heap Alloc: 2248864 Heap objects: 5053

After func1: Heap Alloc: 2298840 Heap objects: 5158

Before func1: Heap Alloc: 2300224 Heap objects: 5166

After func1: Heap Alloc: 2297144 Heap objects: 5047

Before func1: Heap Alloc: 2298528 Heap objects: 5055

After func1: Heap Alloc: 2316712 Heap objects: 5154

Before func1: Heap Alloc: 2318096 Heap objects: 5162

After func1: Heap Alloc: 3083256 Heap objects: 7208

----------------------------

Before func2: Heap Alloc: 3084640 Heap objects: 7216

After func2: Heap Alloc: 3075816 Heap objects: 7057

Before func2: Heap Alloc: 3077200 Heap objects: 7065

After func2: Heap Alloc: 3102896 Heap objects: 7270

Before func2: Heap Alloc: 3104280 Heap objects: 7278

After func2: Heap Alloc: 3127728 Heap objects: 7461

Before func2: Heap Alloc: 3129112 Heap objects: 7469

After func2: Heap Alloc: 3128352 Heap objects: 7401

Before func2: Heap Alloc: 3129736 Heap objects: 7409

After func2: Heap Alloc: 3121152 Heap objects: 7263

Before func2: Heap Alloc: 3122536 Heap objects: 7271

After func2: Heap Alloc: 3167872 Heap objects: 7703

Before func2: Heap Alloc: 3169256 Heap objects: 7711

After func2: Heap Alloc: 3163120 Heap objects: 7595

Before func2: Heap Alloc: 3164504 Heap objects: 7603

After func2: Heap Alloc: 3157376 Heap objects: 7470

Before func2: Heap Alloc: 3158760 Heap objects: 7478

After func2: Heap Alloc: 3160496 Heap objects: 7450

Before func2: Heap Alloc: 3161880 Heap objects: 7458

After func2: Heap Alloc: 3221024 Heap objects: 7754

----------------------------

after GC: Heap Alloc: 3222200 Heap objects: 7750

----------------------------

Looks like you are right - with every iteration the number of object in the heap is constantly growing.

But what if we swap func1 and func2? Let's call func2 before func1: https://go.dev/play/p/ABFq1O11bIl

Before func2: Heap Alloc: 261456 Heap objects: 1227

After func2: Heap Alloc: 1330584 Heap objects: 2529

Before func2: Heap Alloc: 1331968 Heap objects: 2537

After func2: Heap Alloc: 1739280 Heap objects: 3755

Before func2: Heap Alloc: 1740664 Heap objects: 3763

After func2: Heap Alloc: 2251824 Heap objects: 5017

Before func2: Heap Alloc: 2253208 Heap objects: 5025

After func2: Heap Alloc: 2244400 Heap objects: 4802

Before func2: Heap Alloc: 2245784 Heap objects: 4810

After func2: Heap Alloc: 2287816 Heap objects: 5135

Before func2: Heap Alloc: 2289200 Heap objects: 5143

After func2: Heap Alloc: 2726136 Heap objects: 6375

Before func2: Heap Alloc: 2727520 Heap objects: 6383

After func2: Heap Alloc: 2834520 Heap objects: 6276

Before func2: Heap Alloc: 2835904 Heap objects: 6284

After func2: Heap Alloc: 2855496 Heap objects: 6393

Before func2: Heap Alloc: 2856880 Heap objects: 6401

After func2: Heap Alloc: 2873064 Heap objects: 6478

Before func2: Heap Alloc: 2874448 Heap objects: 6486

After func2: Heap Alloc: 2923560 Heap objects: 6913

----------------------------

Before func1: Heap Alloc: 2924944 Heap objects: 6921

After func1: Heap Alloc: 2933416 Heap objects: 6934

Before func1: Heap Alloc: 2934800 Heap objects: 6942

After func1: Heap Alloc: 2916520 Heap objects: 6676

Before func1: Heap Alloc: 2917904 Heap objects: 6684

After func1: Heap Alloc: 2941816 Heap objects: 6864

Before func1: Heap Alloc: 2943200 Heap objects: 6872

After func1: Heap Alloc: 2968184 Heap objects: 7078

Before func1: Heap Alloc: 2969568 Heap objects: 7086

After func1: Heap Alloc: 2955056 Heap objects: 6885

Before func1: Heap Alloc: 2956440 Heap objects: 6893

After func1: Heap Alloc: 2961056 Heap objects: 6893

Before func1: Heap Alloc: 2962440 Heap objects: 6901

After func1: Heap Alloc: 2967680 Heap objects: 6903

Before func1: Heap Alloc: 2969064 Heap objects: 6911

After func1: Heap Alloc: 3005856 Heap objects: 7266

Before func1: Heap Alloc: 3007240 Heap objects: 7274

After func1: Heap Alloc: 3033696 Heap objects: 7514

Before func1: Heap Alloc: 3035080 Heap objects: 7522

After func1: Heap Alloc: 3028432 Heap objects: 7423

----------------------------

after GC: Heap Alloc: 3029608 Heap objects: 7419

----------------------------

Heap of func2 grows almost as fast as with func1, and func1 being in the second place behaves exactly like func2 - adds just 500 objects to the heap.

From these two examples I can't agree that func1 is leaking while func2 is not. I'd say, they are leaking to the same degree.

CodePudding user response:

Thanks for the answer from golang-nuts group, quote here:

I don't think it's related with for-range loops, both should "leak" the same, I bet that's tMap, currently in Go maps do not shrink, even if you delete keys. One way to reduce memory usage of a long-living map is to at some threshold copy over all its elements into a new map, and set the old to map to this copy, then the old map, if not referenced elsewhere, will eventually be gc'ed and cleaned up.

The root cause is the long-lived map

I also found the issue here: https://github.com/golang/go/issues/20135