Before I start, I know there are a lot of questions with the same error but none of them solved the issue for me.

I have a PPO implementation for playing the CarRacing-v2 environment from gym (gym==0.26.0 , tensorflow==2.10.0). I wanted to make it faster and move a bunch of code into a separate function and wrap it with tf.function, however just moving it into a different function created an error. There is no modification in the code other than just moving a part of it to a different function.

This is the working code I had before.

def learn(self):

for epoch in range(self.n_epochs):

# print(f"{epoch = }")

state_arr, action_arr, old_prob_arr, vals_arr,\

reward_arr, dones_arr, batches = \

self.memory.generate_batches()

values = vals_arr

advantage = np.zeros(len(reward_arr), dtype=np.float32)

# print("a_t")

for t in range(len(reward_arr)-1):

discount = 1

a_t = 0

for k in range(t, len(reward_arr)-1):

a_t = discount*(reward_arr[k] self.gamma*values[k 1] * (

1-int(dones_arr[k])) - values[k])

discount *= self.gamma*self.gae_lambda

advantage[t] = a_t

for batch in batches:

# do this into a tf function

# print("batch")

with tf.GradientTape(persistent=True) as tape:

states = tf.convert_to_tensor(state_arr[batch])

old_probs = tf.convert_to_tensor(old_prob_arr[batch])

actions = tf.convert_to_tensor(action_arr[batch])

probs = self.actor(states)

dist = tfp.distributions.Categorical(probs)

new_probs = dist.log_prob(actions)

critic_value = self.critic(states)

critic_value = tf.squeeze(critic_value, 1)

prob_ratio = tf.math.exp(new_probs - old_probs)

weighted_probs = advantage[batch] * prob_ratio

clipped_probs = tf.clip_by_value(prob_ratio,

1-self.policy_clip,

1 self.policy_clip)

weighted_clipped_probs = clipped_probs * advantage[batch]

actor_loss = -tf.math.minimum(weighted_probs,

weighted_clipped_probs)

actor_loss = tf.math.reduce_mean(actor_loss)

returns = advantage[batch] values[batch]

# critic_loss = tf.math.reduce_mean(tf.math.pow(

# returns-critic_value, 2))

critic_loss = keras.losses.MSE(critic_value, returns)

actor_params = self.actor.trainable_variables

actor_grads = tape.gradient(actor_loss, actor_params)

critic_params = self.critic.trainable_variables

critic_grads = tape.gradient(critic_loss, critic_params)

self.actor.optimizer.apply_gradients(

zip(actor_grads, actor_params))

self.critic.optimizer.apply_gradients(

zip(critic_grads, critic_params))

self.memory.clear_memory()

and this is the code split into 2 functions and here is where the error occures

def learn(self):

for epoch in range(self.n_epochs):

# print(f"{epoch = }")

state_arr, action_arr, old_prob_arr, vals_arr,\

reward_arr, dones_arr, batches = \

self.memory.generate_batches()

values = vals_arr

advantage = np.zeros(len(reward_arr), dtype=np.float32)

# print("a_t")

for t in range(len(reward_arr)-1):

discount = 1

a_t = 0

for k in range(t, len(reward_arr)-1):

a_t = discount*(reward_arr[k] self.gamma*values[k 1] * (

1-int(dones_arr[k])) - values[k])

discount *= self.gamma*self.gae_lambda

advantage[t] = a_t

for batch in batches:

self.do_batch(state_arr, old_prob_arr,action_arr, batch, advantage, values)

self.memory.clear_memory()

@tf.function

def do_batch(self, state_arr, old_prob_arr, action_arr, batch, advantage, values):

with tf.GradientTape(persistent=True) as tape:

states = tf.convert_to_tensor(state_arr[batch])

old_probs = tf.convert_to_tensor(old_prob_arr[batch])

actions = tf.convert_to_tensor(action_arr[batch])

probs = self.actor(states)

dist = tfp.distributions.Categorical(probs)

new_probs = dist.log_prob(actions)

critic_value = self.critic(states)

critic_value = tf.squeeze(critic_value, 1)

prob_ratio = tf.math.exp(new_probs - old_probs)

weighted_probs = advantage[batch] * prob_ratio

clipped_probs = tf.clip_by_value(prob_ratio,

1-self.policy_clip,

1 self.policy_clip)

weighted_clipped_probs = clipped_probs * advantage[batch]

actor_loss = -tf.math.minimum(weighted_probs,

weighted_clipped_probs)

actor_loss = tf.math.reduce_mean(actor_loss)

returns = advantage[batch] values[batch]

# critic_loss = tf.math.reduce_mean(tf.math.pow(

# returns-critic_value, 2))

critic_loss = keras.losses.MSE(critic_value, returns)

actor_params = self.actor.trainable_variables

actor_grads = tape.gradient(actor_loss, actor_params)

critic_params = self.critic.trainable_variables

critic_grads = tape.gradient(critic_loss, critic_params)

self.actor.optimizer.apply_gradients(

zip(actor_grads, actor_params))

self.critic.optimizer.apply_gradients(

zip(critic_grads, critic_params))

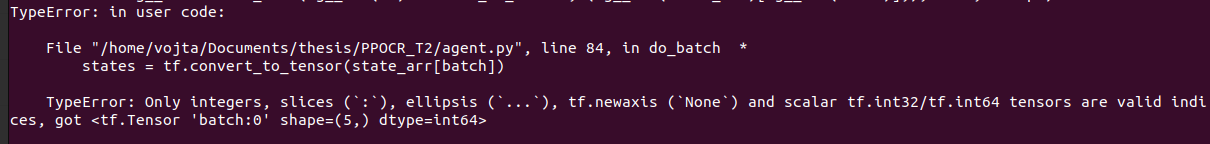

the issue seems to be with batch being a tensor, however it should be a list from my code

def generate_batches(self):

n_states = len(self.states)

batch_start = np.arange(0, n_states, self.batch_size)

indices = np.arange(n_states, dtype=np.int64)

np.random.shuffle(indices)

batches = [indices[i:i self.batch_size] for i in batch_start]

return np.array(self.states),\

np.array(self.actions),\

np.array(self.probs),\

np.array(self.vals),\

np.array(self.rewards),\

np.array(self.dones),\

batches

CodePudding user response:

Functions decorated by tf.function will convert lists into tensors internally. To index a tensor a in one particular axis using another tensor b, you can use tf.gather(a, b) instead of the familiar indexing syntax a[b].

Concretely, try the following modifications in your do_batch function:

state_arr[batch] -> tf.gather(state_arr, batch, axis=0)

old_prob_arr[batch] -> tf.gather(old_prob_arr, batch, axis=0)

action_arr[batch] -> tf.gather(action_arr, batch, axis=0)

advantage[batch] -> tf.gather(advantage, batch, axis=0)

values[batch] -> tf.gather(values, batch, axis=0)