I am trying to use broadcast feature of numpy on my large data. I have list columns that can have hundreds of elements in many rows. I need to filter rows based on presence of columns value in the list column. If number in col_a is present in col_b, I need to filter IN that row.

Sample data:

import pandas as pd

import numpy as np

dt = pd.DataFrame({'id' : ['a', 'a', 'a', 'b', 'b'],

'col_a': [[1],[2],[5],[1],[2]],

'col_b': [[2],[2,4],[2,5,7],[4],[3,2]],

})

dt

id col_a col_b

0 a [1] [2]

1 a [2] [2, 4]

2 a [5] [2, 5, 7]

3 b [1] [4]

4 b [2] [3, 2]

I tried below code to add dimension to col_b and check if the value is present in col_a:

(dt['col_a'] == dt['col_b'][:,None]).any(axis = 1)

but I get below error:

ValueError: ('Shapes must match', (5,), (5, 1))

Could someone please let me know what's the correct approach.

CodePudding user response:

import pandas as pd

import numpy as np

from itertools import product

Parse out columns based on the commas:

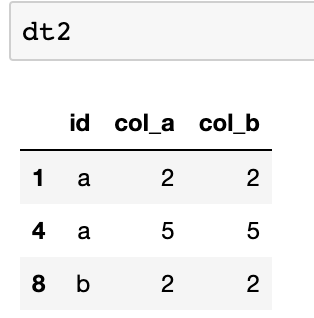

dt2 = pd.DataFrame([j for i in dt.values for j in product(*i)], columns=dt.columns)

Filter to where col_a equals col_b:

dt2 = dt2[dt2['col_a'] == dt2['col_b']]

Results in:

CodePudding user response:

I think you've been told that `numpy` "vectorization" is the key to speeding up your code, but you don't have a good grasp of what this. It isn't something magical that you can apply to any `pandas` task. It's just "shorthand" for making full use of `numpy` array methods, which means, actually learning `numpy`.

But let's explore your task:

In [205]: dt = pd.DataFrame({'id' : ['a', 'a', 'a', 'b', 'b'],

...: 'col_a': [[1],[2],[5],[1],[2]],

...: 'col_b': [[2],[2,4],[2,5,7],[4],[3,2]],

...: })

In [206]: dt

Out[206]:

id col_a col_b

0 a [1] [2]

1 a [2] [2, 4]

2 a [5] [2, 5, 7]

3 b [1] [4]

4 b [2] [3, 2]

In [207]: dt.dtypes

Out[207]:

id object

col_a object

col_b object

dtype: object

Because the columns contain lists, their `dtype` is object; they have references to lists.

Doing things like `==` on columns, pandas `Series` is not the same as doing things with the arrays of their values.

But to focus on the numpy aspect, lets get numpy arrays:

In [208]: a = dt['col_a'].to_numpy()

In [209]: b = dt['col_b'].to_numpy()

In [210]: a

Out[210]:

array([list([1]), list([2]), list([5]), list([1]), list([2])],

dtype=object)

In [211]: b

Out[211]:

array([list([2]), list([2, 4]), list([2, 5, 7]), list([4]), list([3, 2])],

dtype=object)

The fast `numpy` operations use compiled code, and, for the most part, only work with numeric dtypes. Arrays like this, containing references to lists, are basically the same as lists. Math, and other operations like equalty, operate at list comprehension speeds. That may be faster than pandas speeds, but no where like the highly vaunted "vectorized" numpy speeds.

So lets to a list comprehension on the elements of these lists. This is a lot like pandas `apply`, though I think it's faster (pandas apply is notoriously slow).

In [212]: [i in j for i,j in zip(a,b)]

Out[212]: [False, False, False, False, False]

Oops, not matches - must be because `i` from `a` is a list. Let's extract that number:

In [213]: [i[0] in j for i,j in zip(a,b)]

Out[213]: [False, True, True, False, True]

Making col_a contain lists instead of numbers does not help you.

Since `a` and `b` are arrays, we can use `==`, but that essentially the same operation as [212] (timeit is slightly better):

In [214]: a==b

Out[214]: array([False, False, False, False, False])

We could make `b` into a (5,1) array, but why?

In [215]: b[:,None]

Out[215]:

array([[list([2])],

[list([2, 4])],

[list([2, 5, 7])],

[list([4])],

[list([3, 2])]], dtype=object)

What I think you were trying to imitate an array comparison like this, broadcasting a (5,) against a (3,1) to produce a (3,5) truth table:

In [216]: x = np.arange(5); y = np.array([3,5,1])

In [217]: x==y[:,None]

Out[217]:

array([[False, False, False, True, False],

[False, False, False, False, False],

[False, True, False, False, False]])

In [218]: (x==y[:,None]).any(axis=1)

Out[218]: array([ True, False, True])

`isin` can do the same sort of comparision:

In [219]: np.isin(x,y)

Out[219]: array([False, True, False, True, False])

In [220]: np.isin(y,x)

Out[220]: array([ True, False, True])

While this works for numbers, it does not work for the arrays of lists, especially not your case where you want to test the lists in `a` against the corresponding list in `b`. You aren't testing all of `a` against all of `b`.

Since the lists in `a` are all the same size, we can join them into one number array:

In [225]: np.hstack(a)

Out[225]: array([1, 2, 5, 1, 2])

We cannot do the same for b because the lists very in size. As a general rule, when you have lists (or arrays) that vary in size, you cannot do the fast numeric numpy math and comparisons.

We could test (5,) a against (5,1) b, producing a (5,5) truth table:

In [227]: a==b[:,None]

Out[227]:

array([[False, True, False, False, True],

[False, False, False, False, False],

[False, False, False, False, False],

[False, False, False, False, False],

[False, False, False, False, False]])

But that is True for a couple of cells in the first row; that's where the list([2]) from b matches the same list in a.