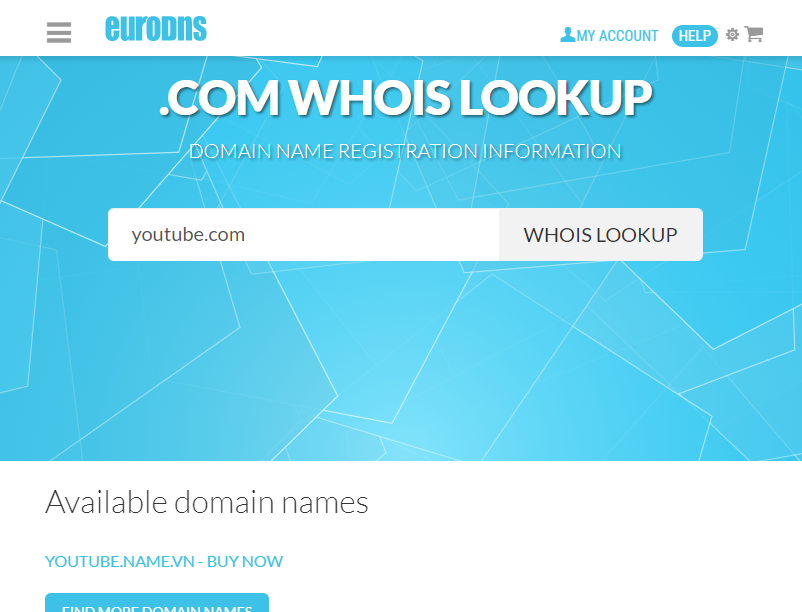

So there is this code that i want to try. if a website exists it outputs available domain names. i used this website

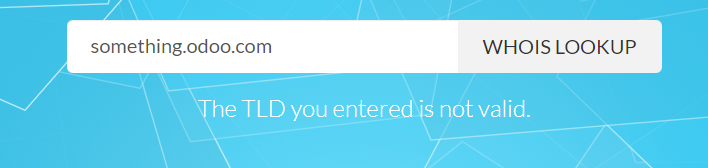

If the website does not exist, currently parked, or registered it says this.

The code that i'm thinking involves selenium and chrome driver input the text and search it up.

from selenium import webdriver

from webdriver_manager.chrome import ChromeDriverManager

cli = ['https://youtube.com', 'https://google.com', 'https://minecraft.net', 'https://something.odoo.com']

Exists = []

for i in cli:

driver.get("https://www.eurodns.com/whois-search/app-domain-name")

Name = driver.find_element(By.CSS_SELECTOR, "input[name='whoisDomainName']")

Name.send_keys(cli)

driver.find_element(By.XPATH,/html/body/div/div[3]/div/div[2]/form/div/div/div/button).click()

Is there a way where for example if website available, exist.append(cli), elif web not valid, print('Not valid') so that it can filter out a website that exists and the website that does not. i was thinking of using beautifulsoup to get outputs, but i'm not sure how to use it properly.

Thank you!

CodePudding user response:

There is no need to use other libraries.

Rather than using XPATHs like that, because it may change the structure of the page. Always try to search for elements by ID, if it exists associated with that particular element (which by their nature should be unique on the page) or by class name (if it appears to be unique) or by name attribute.

Some notes on the algorithm:

We can visit the homepage once and then submit the url from time to time. Thus we save execution time.

Whenever we submit a url, we simply need to verify that that url does not exist (or that it does)

Name the variables in a more conversational/descriptive way.

Pay attention to sending too many requests quickly to the site. It may block you. Perhaps this is not the right approach for your task? Are there no APIs that can be used for such services?

Your code becomes:

from selenium import webdriver

from webdriver_manager.chrome import ChromeDriverManager

from selenium.webdriver.chrome.service import Service

from selenium.webdriver.chrome.options import Options

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

from selenium.common.exceptions import TimeoutException

import time

opts = Options()

# make web scraping 'invisible' if GUI is not required

opts.add_argument("--headless")

opts.add_argument('--no-sandbox')

user_agent = "user-agent=[Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/102.0.5005.63 Safari/537.36]"

opts.add_argument(user_agent)

driver = webdriver.Chrome(service=Service(ChromeDriverManager().install()), options=opts)

urls = ['https://youtube.com', 'https://google.com', 'https://minecraft.net', 'https://something.odoo.com']

exists = []

driver.get("https://www.eurodns.com/whois-search/app-domain-name")

for url in urls:

# send url to textarea

textarea = driver.find_element(By.NAME, "whoisDomainName")

textarea.clear() # make sure to clear textarea

textarea.send_keys(url)

# click 'WHOIS LOOKUP' button

driver.find_element(By.ID, "submitBasic").click()

# try to find error message (wait 3 sec)

try:

WebDriverWait(driver, 3).until(EC.presence_of_element_located((By.CLASS_NAME, 'whoisSearchError')))

print(f'URL {url} is not valid')

except TimeoutException:

print(f'URL {url} is valid')

exists.append(url)

time.sleep(30) # wait 30 seconds to avoid '429 too many requests'

print(f"\nURLs that exist:\n", exists)

Output will be:

URL https://youtube.com is valid

URL https://google.com is valid

URL https://minecraft.net is valid

URL https://something.odoo.com is not valid

URLs that exist:

['https://youtube.com', 'https://google.com', 'https://minecraft.net']