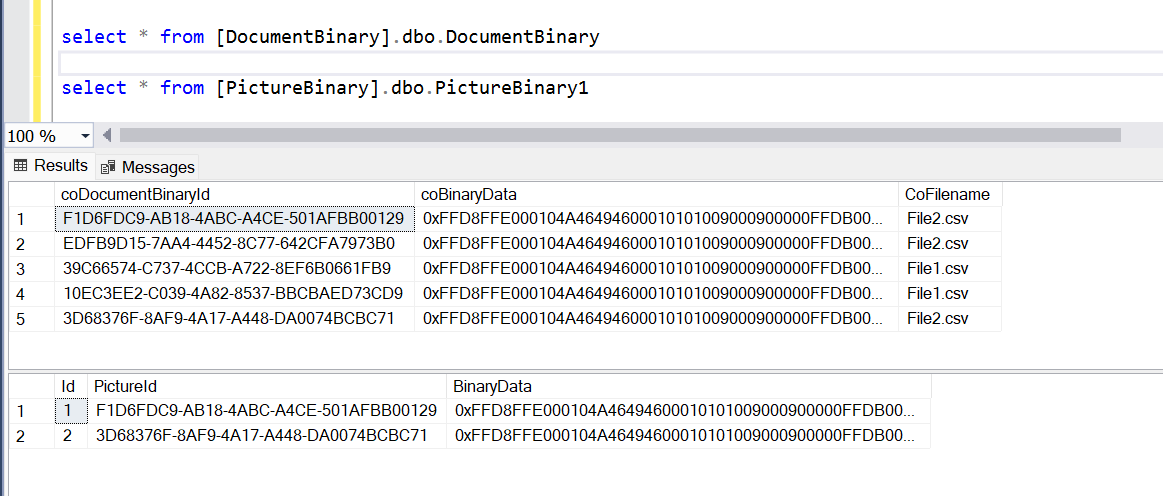

I'm trying to insert into an on-premises SQL database table called PictureBinary:

The source of the binary data is a table in another on-premises SQL database called DocumentBinary:

I have a file with all of the Id's of the DocumentBinary rows that need copying. I feed those into a ForEach activity from a Lookup activity. Each of these files has about 180 rows (there are 50 files fed into a new instance of the pipeline in parallel).

So far everything is working. But then, inside the ForEach I have another Lookup activity that tries to get the binary info to pass into a script that will insert it into the other database.

And then the Script activity would insert the binary data into the table PictureBinary (in the other database).

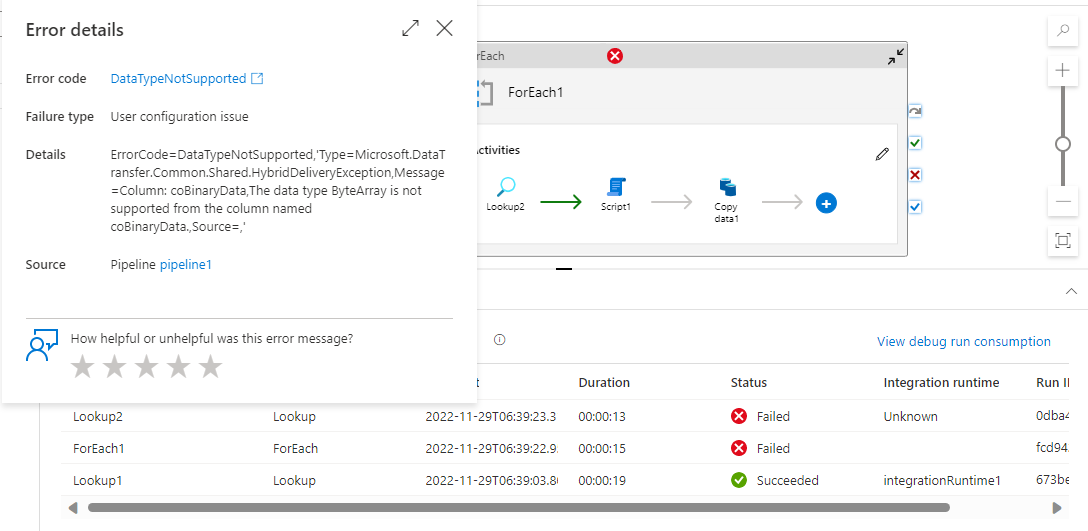

But when I debug the pipeline, I get this error when the binary column Lookup is reached:

ErrorCode=DataTypeNotSupported,'Type=Microsoft.DataTransfer.Common.Shared.HybridDeliveryException,Message=Column: coBinaryData,The data type ByteArray is not supported from the column named coBinaryData.,Source=,'

I know that the accepted way of storing the files would be to store them on the filesystem and just store the file path to the files in the database. But we are using a NOP database that stores the files in varbinary columns.

Also, if there is a better way of doing this, please let me know.

CodePudding user response:

I tried to reproduce your scenario in my environment and got similar error

As per Microsoft document Columns with datatype Byte Array Are not supported in lookup activity is might be the main cause of error.

To workaround this as Follow below steps:

As you explained your case you have a file in which all the Id's of the DocumentBinary rows that need copy in destination are stored. To achieve this, you can simply use Copy activity with the Query where you copy records where the DocumentBinary in column is equal to the Id stored in file

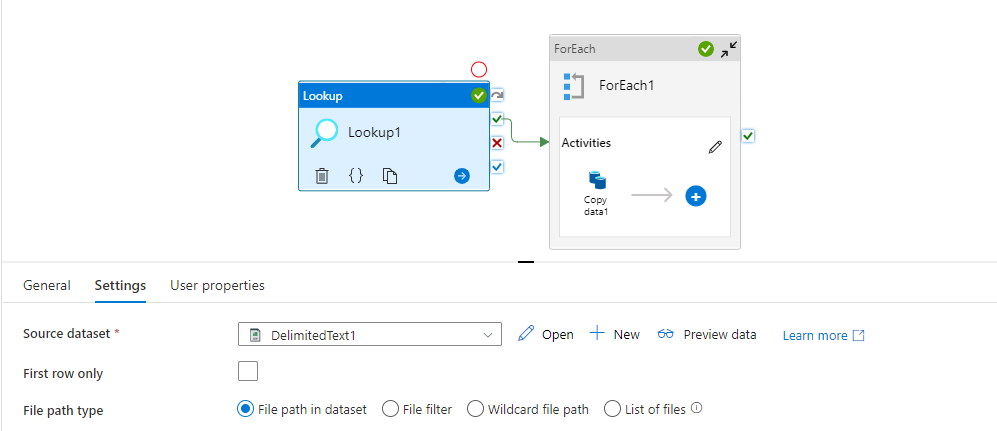

First, I took lookup activity from where I can get Id's of the DocumentBinary rows stored in file

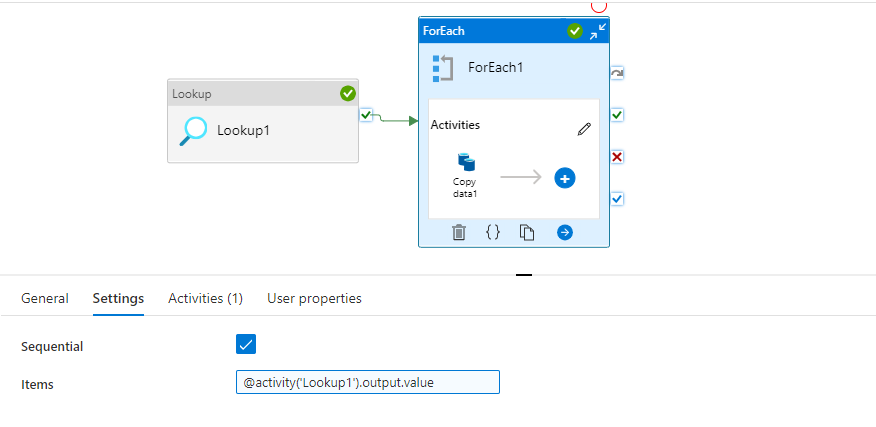

Then I took ForEach I passed the output of lookup activity to ForEach activity.

After this I took Copy activity in forEach activity

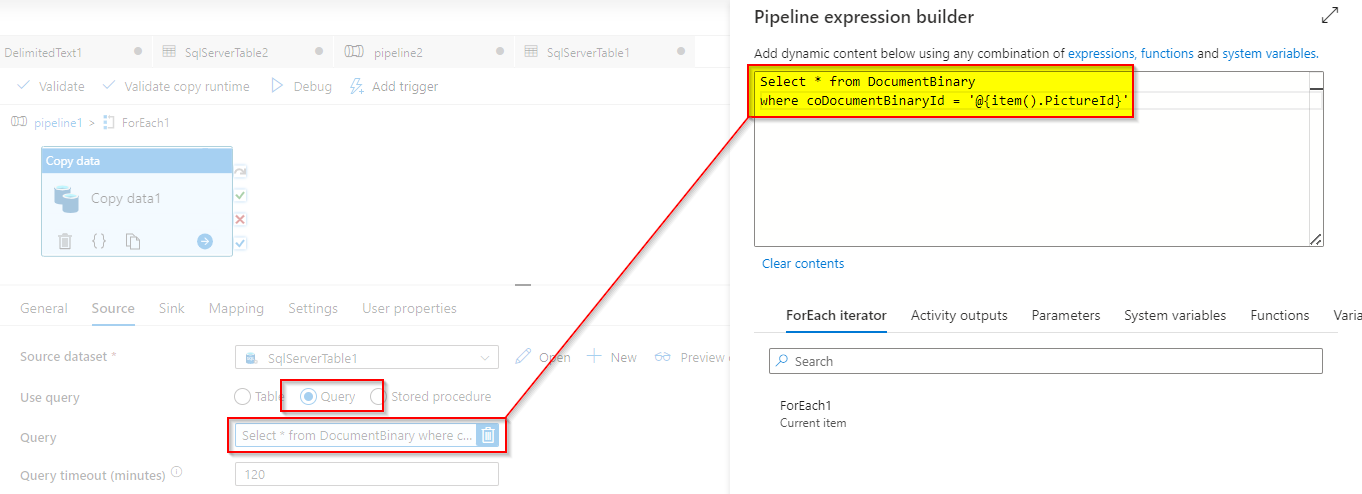

Select * from DocumentBinary

where coDocumentBinaryId = '@{item().PictureId}'

In source of copy activity select Use query as Query and pass above query with your names

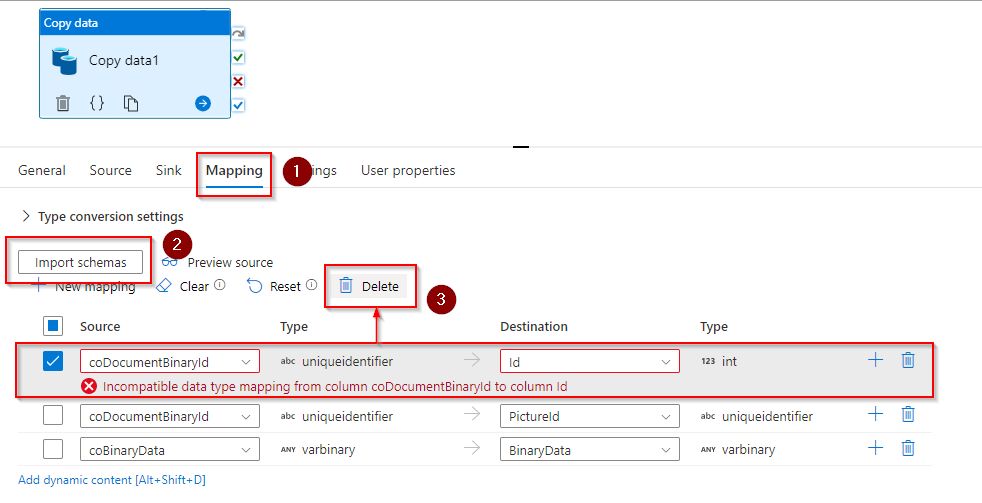

Now go to Mapping Click on Import Schema then delete unwanted columns and map the columns accordingly.

Note: For this, columns in both tables are of similar datatypes either both

uniqueidenntifieror both should beint

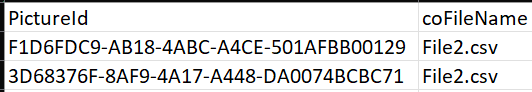

Sample Input in file:

Output (Copied only picture id contend in file from source to destination):