We have in our project one k8s cluster.

We are trying to set up several environments: DEV, TEST, PROD.

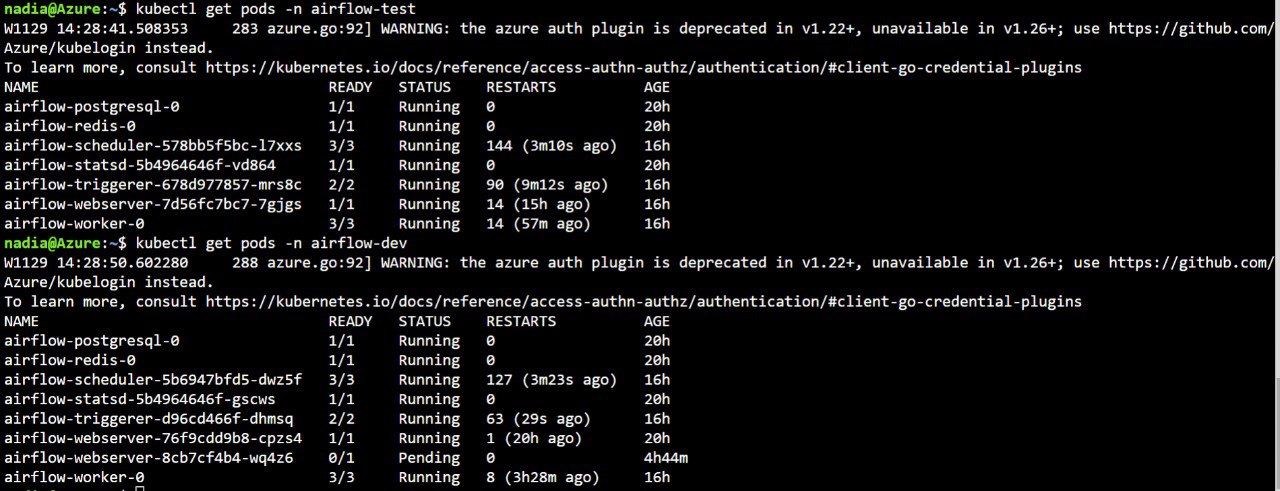

The idea was to install several instances of Airflow in different k8s namespaces. I am doing the fresh installation of Airflow from official Helm chart. Then I am observing very weird behavior of Airflow Scheduler and Worker pods. It seems like they are in conflict. Pods are crashing and then restarting very often.

If I am deleting one of the namespaces and leave only one Airflow in k8s, it works fine.

Has anyone faced the similar issue?

CodePudding user response:

Check the exit code of your pods. Running 3 instances of Airflow might be hungry for resources so you might end up with OOM.

CodePudding user response:

You haven't shared much details about settings you've setup in your Helm chart's values.yaml e.g. PVs, Postgres, Executor and so on. I'm assuming you're using KuberenetesExecutor for your dags.

Can you disable deletion of worker pod? with that pods created for your dags will be in completed or failed state etc, but you can check logs of these dag related pods.

- name: "AIRFLOW__KUBERNETES__DELETE_WORKER_PODS"

value: "True"

Aside from that, how have you setup the Ports for worker/scheduler/triggerer?