I have a field in a table of our db that works like an event-like payload, where all changes to different entities are gathered. See example below for a single field of the object:

'---\nfield_one: 1\nfield_two: 20\nfield_three: 4\nid: 1234\nanother_id: 5678\nsome_text: Hey you\na_date: 2022-11-29\nutc: this_utc\nanother_date: 2022-11-30\nutc: another_utc'

Since accessing this field with pure SQL is a pain, I was thinking of parsing it as a JSON so that it would look like this:

{

"field_one":"1",

"field_two": "20",

"field_three": "4",

"id": "1234",

"another_id": "5678",

"some_text": "Hey you",

"a_date": "2022-11-29",

"utc": "2022-11-29 15:29:28.159296000 Z",

"another_date": "2022-11-30",

"utc": "2022-11-30 13:34:59.000000000 Z"

}

And then just use a Snowflake-native approach to access the values I need.

As you can see, though, there are two fields that are called utc, since one is referring to the first date (a_date), and the second one is referring to the second date (another_date). I believe these are nested in the object, but it's difficult to assess with the format of the field.

This is a problem since I can't differentiate between one utc and another when giving the string the format I need and running a parse_json() function (due to both keys using the same name).

My SQL so far looks like the following:

select

object,

replace(object, '---\n', '{"') || '"}' as first,

replace(first, '\n', '","') as second_,

replace(second_, ': ', '":"') as third,

replace(third, ' ', '') as fourth,

replace(fourth, ' ', '') as last

from my_table

(Steps third and fourth are needed because I have some fields that have extra spaces in them)

And this actually gives me the format I need, but due to what I mentioned around the utc keys, I cannot parse the string as a JSON.

Also note that the structure of the string might change from row to row, meaning that some rows might gather two utc keys, while others might have one, and others even five.

Any ideas on how to overcome that?

CodePudding user response:

Replace only one occurrence with regexp_replace():

with data as (

select '---\nfield_one: 1\nfield_two: 20\nfield_three: 4\nid: 1234\nanother_id: 5678\nsome_text: Hey you\na_date: 2022-11-29\nutc: this_utc\nanother_date: 2022-11-30\nutc: another_utc' o

)

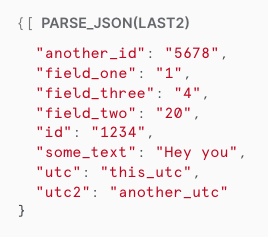

select parse_json(last2)

from (

select o,

replace(o, '---\n', '{"') || '"}' as first,

replace(first, '\n', '","') as second_,

replace(second_, ': ', '":"') as third,

replace(third, ' ', '') as fourth,

replace(fourth, ' ', '') as last,

regexp_replace(last, '"utc"', '"utc2"', 1, 2) last2

from data

)

;

CodePudding user response:

If this string is positional, you can use the split function to turn it into an array and reference the positions by number. For example:

create or replace table T1 (s string);

insert into T1 select '---\nfield_one: 1\nfield_two: 20\nfield_three: 4\nid: 1234\nanother_id: 5678\nsome_text: Hey you\na_date: 2022-11-29\nutc: this_utc\nanother_date: 2022-11-30\nutc: another_utc';

with X as

(

select split(s, '\n') ARR from T1

)

select ARR[1]::string as FIRST

,trim(split_part(ARR[1], ':', 1)) FIRST_NAME

,trim(split_part(ARR[1], ':', 2)) FIRST_VALUE

,ARR[2]::string as SECOND

,trim(split_part(ARR[2], ':', 1)) SECOND_NAME

,trim(split_part(ARR[2], ':', 2)) SECOND_VALUE

,ARR[3]::string as THIRD

,trim(split_part(ARR[3], ':', 1)) THIRD_NAME

,trim(split_part(ARR[3], ':', 2)) THIRD_VALUE

from X

;

| FIRST | FIRST_NAME | FIRST_VALUE | SECOND | SECOND_NAME | SECOND_VALUE | THIRD | THIRD_NAME | THIRD_VALUE |

|---|---|---|---|---|---|---|---|---|

| field_one: 1 | field_one | 1 | field_two: 20 | field_two | 20 | field_three: 4 | field_three | 4 |