I have a dataset of over 1000 txt files which contains information of books

The Project Gutenberg EBook of Apocolocyntosis, by Lucius Seneca

This eBook is for the use of anyone anywhere at no cost and with

almost no restrictions whatsoever. You may copy it, give it away or

re-use it under the terms of the Project Gutenberg License included

with this eBook or online at www.gutenberg.org

Title: Apocolocyntosis

Author: Lucius Seneca

Release Date: November 10, 2003 [EBook #10001]

[Date last updated: April 9, 2005]

Language: English

Character set encoding: ASCII

*** START OF THIS PROJECT GUTENBERG EBOOK APOCOLOCYNTOSIS ***

I'm trying to use pandas to read these files and create a data frame from it getting Title, Author, Release Date, and Language as columns and its values but so far I have been having errors

Reading from a single file

df = pd.read_csv('dataset/10001.txt')

Error

ParserError Traceback (most recent call last)

Input In [30], in <cell line: 1>()

----> 1 df = pd.read_csv('dataset/10001.txt')

File ~\AppData\Local\Packages\PythonSoftwareFoundation.Python.3.9_qbz5n2kfra8p0\LocalCache\local-packages\Python39\site-packages\pandas\util\_decorators.py:311, in deprecate_nonkeyword_arguments.<locals>.decorate.<locals>.wrapper(*args, **kwargs)

305 if len(args) > num_allow_args:

306 warnings.warn(

307 msg.format(arguments=arguments),

308 FutureWarning,

309 stacklevel=stacklevel,

310 )

--> 311 return func(*args, **kwargs)

File ~\AppData\Local\Packages\PythonSoftwareFoundation.Python.3.9_qbz5n2kfra8p0\LocalCache\local-packages\Python39\site-packages\pandas\io\parsers\readers.py:680, in read_csv(filepath_or_buffer, sep, delimiter, header, names, index_col, usecols, squeeze, prefix, mangle_dupe_cols, dtype, engine, converters, true_values, false_values, skipinitialspace, skiprows, skipfooter, nrows, na_values, keep_default_na, na_filter, verbose, skip_blank_lines, parse_dates, infer_datetime_format, keep_date_col, date_parser, dayfirst, cache_dates, iterator, chunksize, compression, thousands, decimal, lineterminator, quotechar, quoting, doublequote, escapechar, comment, encoding, encoding_errors, dialect, error_bad_lines, warn_bad_lines, on_bad_lines, delim_whitespace, low_memory, memory_map, float_precision, storage_options)

665 kwds_defaults = _refine_defaults_read(

666 dialect,

667 delimiter,

(...)

676 defaults={"delimiter": ","},

677 )

678 kwds.update(kwds_defaults)

--> 680 return _read(filepath_or_buffer, kwds)

File ~\AppData\Local\Packages\PythonSoftwareFoundation.Python.3.9_qbz5n2kfra8p0\LocalCache\local-packages\Python39\site-packages\pandas\io\parsers\readers.py:581, in _read(filepath_or_buffer, kwds)

578 return parser

580 with parser:

--> 581 return parser.read(nrows)

File ~\AppData\Local\Packages\PythonSoftwareFoundation.Python.3.9_qbz5n2kfra8p0\LocalCache\local-packages\Python39\site-packages\pandas\io\parsers\readers.py:1254, in TextFileReader.read(self, nrows)

1252 nrows = validate_integer("nrows", nrows)

1253 try:

-> 1254 index, columns, col_dict = self._engine.read(nrows)

1255 except Exception:

1256 self.close()

File ~\AppData\Local\Packages\PythonSoftwareFoundation.Python.3.9_qbz5n2kfra8p0\LocalCache\local-packages\Python39\site-packages\pandas\io\parsers\c_parser_wrapper.py:225, in CParserWrapper.read(self, nrows)

223 try:

224 if self.low_memory:

--> 225 chunks = self._reader.read_low_memory(nrows)

226 # destructive to chunks

227 data = _concatenate_chunks(chunks)

File ~\AppData\Local\Packages\PythonSoftwareFoundation.Python.3.9_qbz5n2kfra8p0\LocalCache\local-packages\Python39\site-packages\pandas\_libs\parsers.pyx:805, in pandas._libs.parsers.TextReader.read_low_memory()

File ~\AppData\Local\Packages\PythonSoftwareFoundation.Python.3.9_qbz5n2kfra8p0\LocalCache\local-packages\Python39\site-packages\pandas\_libs\parsers.pyx:861, in pandas._libs.parsers.TextReader._read_rows()

File ~\AppData\Local\Packages\PythonSoftwareFoundation.Python.3.9_qbz5n2kfra8p0\LocalCache\local-packages\Python39\site-packages\pandas\_libs\parsers.pyx:847, in pandas._libs.parsers.TextReader._tokenize_rows()

File ~\AppData\Local\Packages\PythonSoftwareFoundation.Python.3.9_qbz5n2kfra8p0\LocalCache\local-packages\Python39\site-packages\pandas\_libs\parsers.pyx:1960, in pandas._libs.parsers.raise_parser_error()

ParserError: Error tokenizing data. C error: Expected 2 fields in line 60, saw 3

CodePudding user response:

The following code shows how you can tackle the data extraction for one file.

Providing they are all in the same format, then this should be pretty efficient.

re.compile: provides the regex to use to find the item of interest- had to do some extra manipulation with

release_datebecause of extra text on that line. - you could add a for-loop to navigate through the 1000s of books.

Code:

import re

import pandas as pd

with open('dataset/10001.txt', 'r') as text_file:

text = text_file.read()

# These can be reused for each book

title = re.compile(r'Title: (.*)\n')

author = re.compile(r'Author: (.*)\n')

release_date = re.compile(r'Release Date: (.*)\s')

book_title = title.search(text).group(1)

book_author = author.search(text).group(1)

book_release = release_date.search(text).group(1).split(' [')[0]

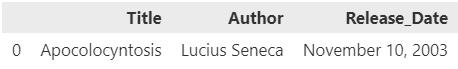

df = pd.DataFrame({"Title": [book_title], "Author": [book_author], "Release_Date": [book_release]})

print(df)

Output:

data.txt

The Project Gutenberg EBook of Apocolocyntosis, by Lucius Seneca

This eBook is for the use of anyone anywhere at no cost and with

almost no restrictions whatsoever. You may copy it, give it away or

re-use it under the terms of the Project Gutenberg License included

with this eBook or online at www.gutenberg.org

Title: Apocolocyntosis

Author: Lucius Seneca

Release Date: November 10, 2003 [EBook #10001]

[Date last updated: April 9, 2005]

Language: English

Character set encoding: ASCII

*** START OF THIS PROJECT GUTENBERG EBOOK APOCOLOCYNTOSIS ***