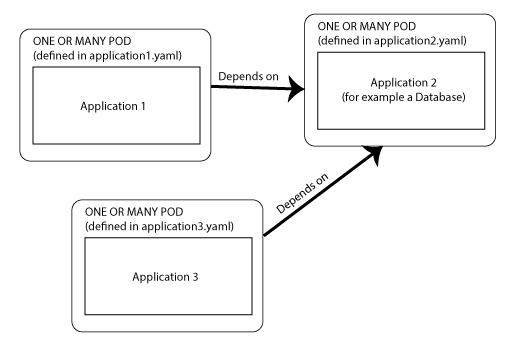

i'm deploying an api application with k3s and i would to know if is it possible to define a depency to another application (potentially already run with is own manifest) in a kubernetes manifest of an application.

If the dependency isn't running when the dependend application is launched, the dependency should be run through its own manifest I join a schema below.

Thank you in advance for your answers.

CodePudding user response:

You can manage dependencies by using the initContainers in your Application 1 and Application 3 and readinessProbe and livenessProbe in your Application 2.

The initContainers in your Application 1 and 3 will check whether the Application 2 is live and the readinessProbe and livenessProbe in your Application 2 will ensure that your Application 2 is fully up and ready to serve.

Init Container: Init containers solve challenges associated with first-run initialization of applications. It’s common for services to depend on the successful completion of a setup script before they can fully start up.

Liveness probe: This probe is mainly used to determine if the container is in the Running state. For example, it can detect service deadlocks, slow responses, and other situations.

Readiness probe: This probe is mainly used to determine if the service is already working normally. Readiness probes cannot be used in init containers. If the pod restarts, all of its init containers must be run again.

Reference: https://www.alibabacloud.com/blog/kubernetes-demystified-solving-service-dependencies_594110

CodePudding user response:

It's somewhat an opinionated question. I think it's best if your application is able to start even if the database is not available.

You can indicate with a readiness probe that your apps are not ready, so they won't get any traffic (through a service with default config) unless they can reach the database.

This will avoid problems with specific startup order of things, which can become difficult to manage as your stack grows.

Consider this pod as a minimal example:

apiVersion: v1

kind: Pod

metdata:

name: app

spec:

containers:

- name: myapp

image: myapp

readinessProbe:

httpGet:

path: /readyz

port: 8080

Now you implement in your application a check, if the DB is available and if not, you respond with something like 503 to indicate that this pod is not ready. For example:

func main() {

db, err := sql.Open("postgres", os.Getenv("PG_DSN"))

if err != nil {

panic(err)

}

http.HandleFunc("/readyz", func(w http.ResponseWriter, r *http.Request) {

if err := db.Ping(); err != nil {

log.Println("db ping failed: ", err)

w.WriteHeader(http.StatusServiceUnavailable)

}

})

http.ListenAndServe(":8080", nil)

}

Additionally or alternatively, you can move this check to the startup probe. Which runs when a pod is started until it suceeds one time. Afterwards the liveness probe takes over (if one exists). That can help you to avoid situations where you provided a wrong configuration and would never be able to connect, even if the database is running.

For example, you could have the app fail if the probe didn't succeed after 10 minutes:

spec:

containers:

- name: myapp

startupProbe:

failureThreshold: 36

periodSeconds: 10

successThreshold: 1

httpGet:

path: /readyz

port: 8080

Of course, you can also combine different types of probes. See: https://kubernetes.io/docs/tasks/configure-pod-container/configure-liveness-readiness-startup-probes/