I have some buckets in Amazon S3 which new objects with different types (json, jpg, mp4, sql files, PDF, log, and so on) are ingested to them each second.

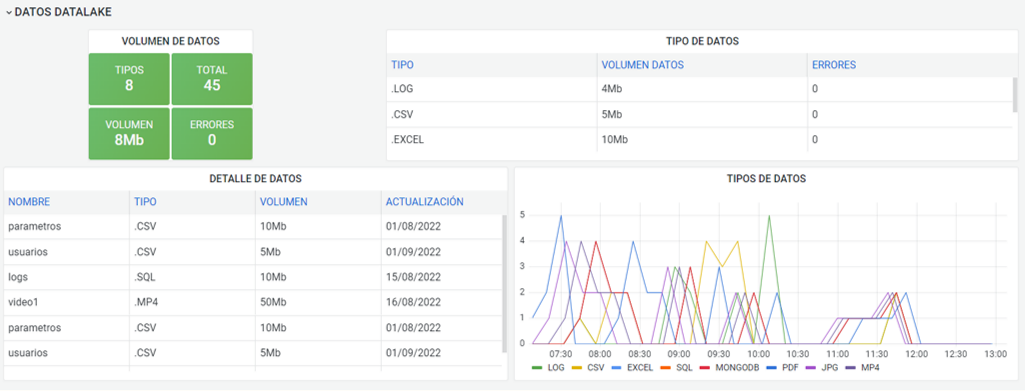

I want to have a dashboard like the attached picture in you say QuickSight or Grafana which I could show the last status of my S3, including the total number and volume of each object type and a historical trend.

I could obtain the number and volume of objects by Glue Crawlers, but the problem is that I couldn't find any effective solution which able to extract every object types.

Glue crawlers don't recognize all types(for example jpg), and some other solutions like using AWS CLI or a python script to iterate the whole S3 each time to generate a report of all objects is not cost and time effective.

I was trying to utilize S3 Tags concept, but then I didn't know how to extract tags and have them as you say columns and query against them in for example Athena.

So, the question is what is an effective solution to obtain all objects info, especially the extension/type, to demonstrate in Quicksight.

CodePudding user response:

Use an event-driven approach. Subscribe an SQS queue to putObject/deleteObject S3 notification events for the bucket. Then create a process to consume the SQS queue messages to update a running tab of the file extensions (say, in a DynamoDB table or relational DB hooked up to QuickSight). This queue consumer could be a lambda function triggered by the queue or just a simple application on any compute platform like EC2, ECS scheduled tasks, or whatever!

This way, you don't have to repeatedly scan all objects to get the counts. You only have to periodically consume the SQS queue messages to update your counts for all the new object additions/deletions.

You only have to process the queue as frequently as you need for your report freshness (up to the SQS max message retention period of 14 days) and each run should be short, since it should just be the delta of events since the last run. So you could process in near-realtime if you wanted or as little as every 14 days. The event messages contain a timestamp, so data resolution could still be maintained even if you only process the queue periodically.