I am to run a DAG that downloads .parquet file and copy it into a gcp storage bucket.

The upload task fails due to the following error:

google.auth.exceptions.DefaultCredentialsError: File /.google/credentials/google_credentials.json was not found.

For some context:

- I am running Airflow on Docker (

and to the best of my know the volume mounting and env setting are correct.

Yet Airflow could not find the file.

I tried a few solution that didn't yield any success:

- Changed the volume mounting to indicate explicitly the path and the json file name

- ran

export GOOGLE_APPLICATION_CREDENTIALS=<path/filename.json>andgcloud auth activate-service-account --key-file $GOOGLE_APPLICATION_CREDENTIALS(looks like that this command dosen't override the docker-compose settings as I create the task that check the GOOGLE_APPLICATION_CREDENTIALS variable and it still /.google/credentials/google_credentials.json) - Tried to find

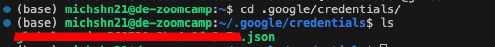

/.google/credentialsdirectory on Airflow worker but couldn't - Tried to bypass that by setting a Airflow GCP connection (using this guide) on the UI but keep getting bad request error

What am I missing here?

CodePudding user response:

First step try to rename the file in the container by replacing the mounting path by

~/.google/credentials/<filename.json>:/.google/credentials/google_credentials.json:ro, then create an Airflow connection by adding the following env var (replace<your google project name>by the project name):export AIRFLOW_CONN_GOOGLE_CLOUD_DEFAULT='google-cloud-platform://?key_path=/.google/credentialsgoogle_credentials.json&scope=https://www.googleapis.com/auth/cloud-platform&project=<your google project name>&num_retries=5'