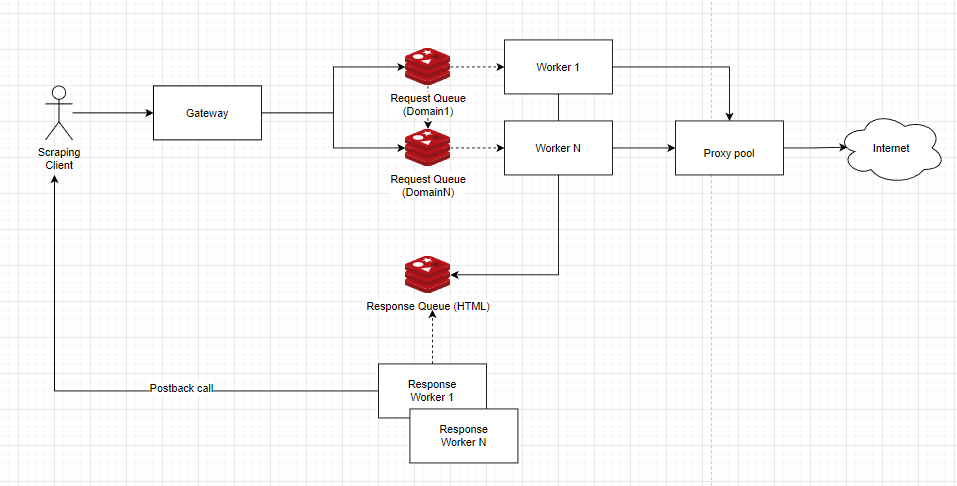

Suppose I want to implement this architecture deployed on Kubernetes cluster:

Gateway Simple RESTful HTTP microservice accepting scraping tasks (URLs to scrape along with postback urls)

Request Queues - Redis (or other message broker) queues created dynamically per unique domain (when new domain is encountered, gateway should programmatically create new queue. If queue for domain already exists - just place message in it.

Response Queue - Redis (or other message broker) queue used to post Worker results as scraped HTML pages along with postback URLs.

Workers - worker processes which should spin-up at runtime when new queue is created and scale-down to zero when queue is emptied.

Response Workers - worker processes consuming response queue and sending postback results to scraping client. (should be available to scale down to zero).

I would like to deploy the whole solution as dockerized containers on Kubernetes cluster.

So my main concerns/questions would be:

Creating Redis or other message broker queues dynamically at run-time via code. Is it viable? Which broker is best for that purpose? I would prefer Redis if possible since I heard it's the easiest to set up and also it supports massive throughput, ideally my scraping tasks should be short-lived so I think Redis would be okay if possible.

Creating Worker consumers at runtime via code - I need some kind of Kubernetes-compatible technology which would be able to react on newly created queue and spin up Worker consumer container which would listen to that queue and later on would be able to scale up/down based on the load of that queue. Any suggestions for such technology? I've read a bit about KNative, and it's Eventing mechanism, so would it be suited for this use-case? Don't know if I should continue investing my time in reading it's documentation.

Best tools for Redis queue management/Worker management: I would prefer C# and Node.JS tooling. Something like Bull for Node.JS would be sufficient? But ideally I would want to produce queues and messages in Gateway by using C# and consume them in Node.JS (Workers).

CodePudding user response:

If you mean vertical scaling it definitely won't be a viable solution, since it requires pod restarts. Horizontal scaling is somewhat viable when compared to vertical scaling, however you need to consider a fact that even for spinning up your nodes or pods it takes some time and it is always suggested to have proper resources in place for serving your upcoming traffic else this delay will affect some features of your application and there might be a business impact. Just having auto scalers isn’t an option; you should also have proper metrics in place for monitoring your application.

This documentation details how to scale your redis and worker pods respectively using the KEDA mechanism. KEDA stands for Kubernetes Event-driven Autoscaling, KEDA is a plugins which sits on top of existing kubernetes primitives (such as Horizontal pod autoscaler) to scale any number of kubernetes containers based on the number of events which needs to be processed.