We are attempting to import data from Netsuite into Azure Synapse Analytics via SuiteAnalytics Connect (ODBC) - this works on all but one table (transactionLine) where the connection always times out at 2 hours

From speaking to Oracle it appears the the two hour limit is fixed and cannot be increased.

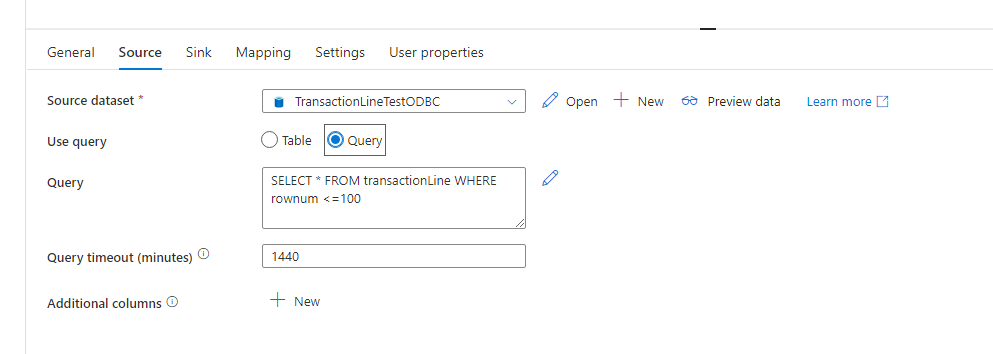

I have a Copy Data activity set up in a pipeline, when I set "Use Query" to "Table" it times out but when I set it to "Query" and limit it to a small number of rows (e.g. 100) it works fine (see screenshot)

Is there a built in way to import this data in batches, say 1000 rows at a time, without creating dozens of separate Copy Data activities?

CodePudding user response:

For your pipeline to work when the large no of records or to create a Batches to import data you can use the following approach.

- If there are large no of records and you want to batch them in 1000 rows first use the lookup activity and the get the count of all records and store it in the set variable with below dynamic expression or if you know the number you and directly add it in set variable activity.

#to get ftom lookup

@string(activity('Lookup1').output.count)

#to add manually

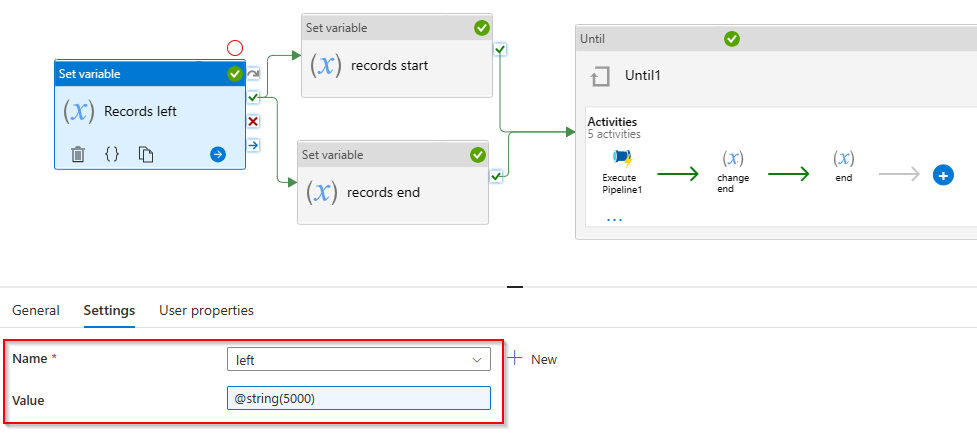

@string(5000)

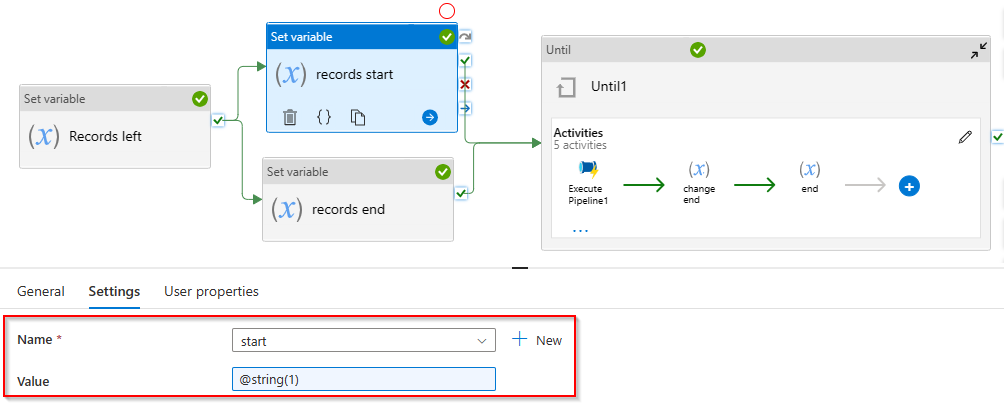

- I took another variable to set the starting of records processed as

start.

@string(1)

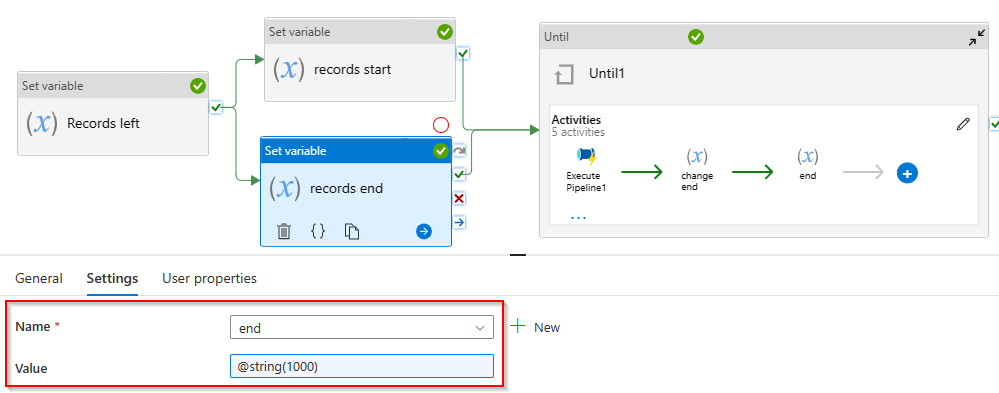

- I took another variable to set the ending of records processed as

end.

@string(1000)

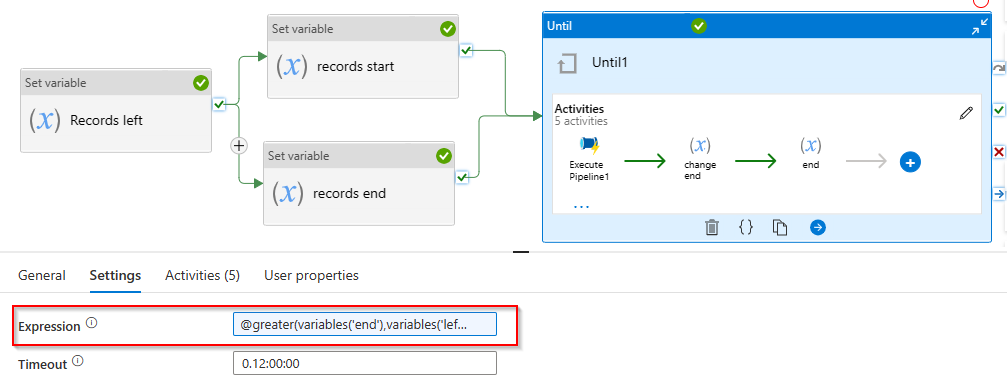

- Now use

untilactivity to process until theendvalue is greater thanleft.

@greater(variables('end'),variables('left'))

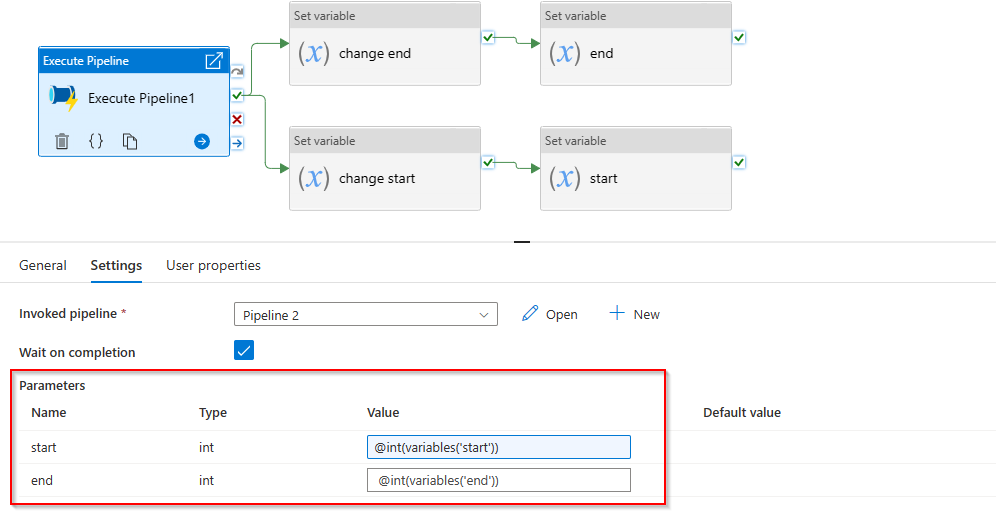

- Now, use the

execute pipelineactivity and pass the variable values to that pipeline parameters and with help of this parameters you can query the data and import it in batches.

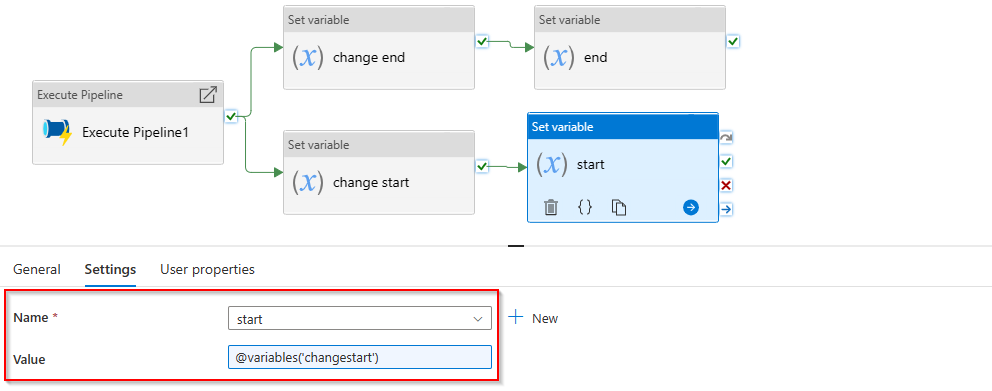

- Now we have to update the values of

startandendwhere we increment the value ofstartand and also increment the value ofend. - Since we can't self-reference a variable, we have to take a temporary reference variable and then update it.

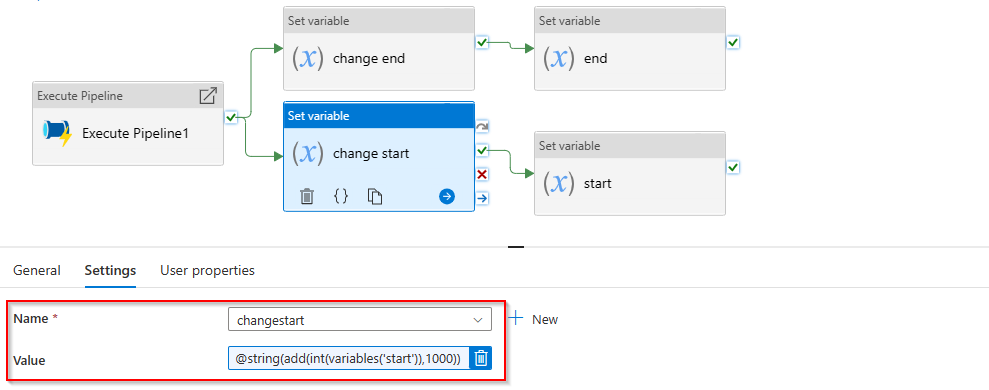

- To update the value of

start, I have taken aschangestartvariable with following value.

@string(add(int(variables('start')),1000))

- Assign the above

changestartvalue tostartvariable.

- Follow similarly for

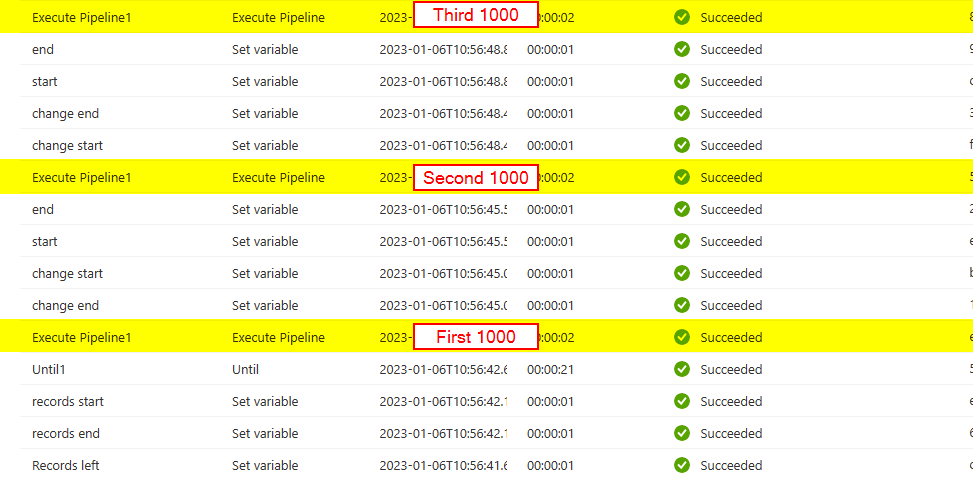

endas well. The following is a debug output when there are 5000 records.