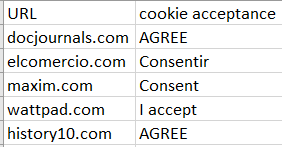

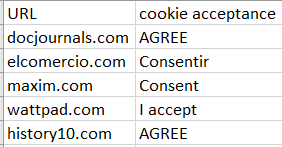

I have a list of domains that I would like to loop over and screenshot using selenium. However, the cookie consent column means the full page is not viewable. Most of them have different consent buttons - what is the best way of accepting these? Or is there another method that could achieve the same results?

urls for reference: docjournals.com, elcomercio.com, maxim.com, wattpad.com, history10.com

CodePudding user response:

You'll need to click accept individually for every website. You can do that, using

from selenium.webdriver.common.by import By

driver.find_element(By.XPATH, "your_XPATH_locator").click()

CodePudding user response:

To get around the XPATH selectors varying from page to page you can use

driver.current_url and use the url to figure out which selector you need to use.

Or alternatively if you iterate over them anyways you can do it like this:

page_1 = {

'url' : 'docjournals.com'

'selector' : 'example_selector_1'

}

page_2 = {

'url' = 'elcomercio.com'

'selector' : 'example_selector_2'

}

pages = [page_1, page_2]

for page in pages:

driver.get(page.url)

driver.find_element(By.XPATH, page.selector).click()

CodePudding user response:

From the snapshot

as you can observe diffeent urls have different consent buttons, they may vary with respect to:

- innerText

- tag

- attributes

- implementation (iframe / shadowRoot)

Conclusion

There can't be a generic solution to accept/deny the cookie concent as at times:

- You may need to induce WebDriverWait for the element_to_be_clickable() and click on the concent.

- You may need to switch to an iframe. See: Unable to locate cookie acceptance window within iframe using Python Selenium

- You may need to traverse within a shadowRoot. See: How to get past a cookie agreement page using Python and Selenium?