Written in other languages would like to get the English word frequency analysis to run after the above situation to do

The import sys, re, collections, me

The from me. Stem. Wordnet import WordNetLemmatizer

The from me. Tokenize import word_tokenize

# patterns that 2 find or/and the replace particular chars or words

# to find chars that are not a letter, a blank or a quotation

Running the pat_letter=re.com (r '[^ a zA - Z \'] + ')

# to find the 's following the pronouns. Re. I is refers to ignore case

Pat_is=re.com running (" (it | he | she | that | this | s | here) (\ 's) ", re. I)

# to find the 's following the letters

Pat_s=re.com running (" (? <=[a zA - Z]) \ 's ")

# to find the 'following the words ending by s

Pat_s2=re.com running (" (? <=s) \ 's?" )

# to find the abbreviation of the not

Pat_not=re.com running (" (? <=[a zA - Z]) n \ 't ")

# to find the abbreviation of order

Pat_would=re.com running (" (? <=[a zA - Z]) \ 'd ")

# to find the abbreviation of will

Pat_will=re.com running (" (? <=[a zA - Z]) \ 'll ")

# to find the abbreviation of am

Pat_am=re.com running (" (? # to find the abbreviation of motorcycle

Pat_are=re.com running (" (? <=[a zA - Z]) \ 're ")

# to find the abbreviation of have

Pat_ve=re.com running (" (? <=[a zA - Z]) \ 've ")

LMTZR=WordNetLemmatizer ()

Def get_words (file) :

With the open (file) as f:

Words_box=[]

Pat=re.com running (r '[^ a zA - Z \'] + ')

For the line in f:

# if re. Match (r '*' [a zA - Z], the line) :

# words_box. The extend (line. The strip () strip \., '(' \' \ '). The lower (). The split ())

# words_box. The extend (pat) sub (' ', line). Strip (). The lower (). The split ())

Words_box. The extend (merge (replace_abbreviations (line). The split ()))

Return collections. Counter (words_box)

Def merge (words) :

New_words=[]

For the word in words:

If word:

Tag=me. Pos_tag (word_tokenize (word)) # tag is like [(' bigger ', 'JJR)]

Pos=get_wordnet_pos (tag [0] [1])

If pos:

Lemmatized_word=LMTZR. Lemmatize (word, pos)

New_words. Append (lemmatized_word)

The else:

New_words. Append (word)

Return new_words

Def get_wordnet_pos (treebank_tag) :

If treebank_tag. Startswith (' J ') :

Return me. Corpus. Wordnet. ADJ

Elif treebank_tag. Startswith (' V ') :

Return me. Corpus. Wordnet. VERB

Elif treebank_tag. Startswith (' N ') :

Return me. Corpus. Wordnet. NOUN

Elif treebank_tag. Startswith (' R ') :

Return me. Corpus. Wordnet. ADV

The else:

Return '

Def replace_abbreviations (text) :

New_text=text

New_text=pat_letter. Sub (' ', the text). Strip (). The lower ()

New_text=pat_is. Sub (r "\ 1 is," new_text)

New_text=pat_s. Sub (" ", new_text)

New_text=pat_s2. Sub (" ", new_text)

New_text=pat_not. Sub (" not ", new_text)

New_text=pat_would. Sub (" order ", new_text)

New_text=pat_will. Sub (" will ", new_text)

New_text=pat_am. Sub (" am ", new_text)

New_text=pat_are. Sub (" motorcycle ", new_text)

New_text=pat_ve. Sub (" have ", new_text)

New_text=new_text. Replace (' \ ', ' ')

Return new_text

Def append_ext (words) :

New_words=[]

For the item in words:

Word, count=item

Tag=me. Pos_tag (word_tokenize (word)) [0] [1] # tag is like [(' bigger ', 'JJR)]

New_words. Append ((word count, the tag))

Return new_words

Def write_to_file (words, file='results. TXT') :

F=open (file, 'w')

For the item in words:

For the field in the item:

F.w rite (STR (field) + ', ')

F.w rite (' \ n ')

Print (' results. TXT)

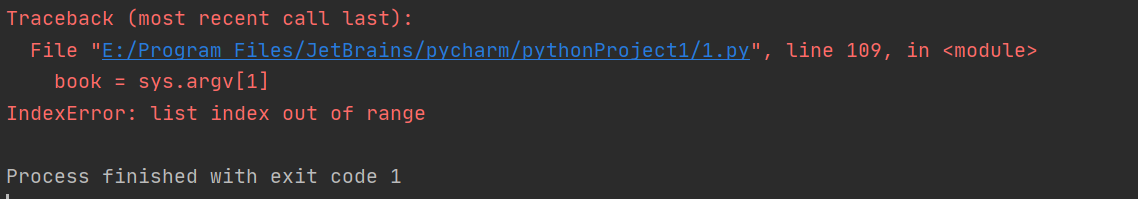

If __name__=="__main__ ':

The book=sys. Argv [1]

Print (' counting... ')

Words=get_words (book)

Print (' writing the file... ')

Write_to_file (append_ext (words. Most_common ()))