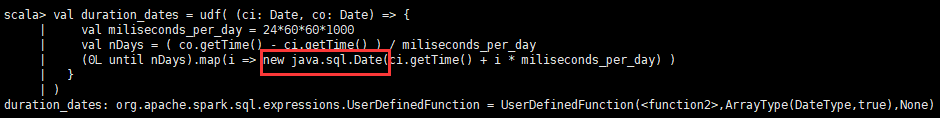

Val duration_dates=udf ((ci: Date, co: Date)=& gt; {

Val miliseconds_per_day=24 * 60 * 60 * 1000

Val nDays=(Co. getTime () - ci. GetTime ())/miliseconds_per_day

(0 until l nDays). The map (I=& gt; New Date (ci) getTime () + I * miliseconds_per_day))

}

)

I am a Spark even beginner Scala programming, now meet a problem, there is a prototype udf, now perform error

The App & gt; The Exception in the thread "main" Java. Lang. UnsupportedOperationException: Schema for the type of Java. Util. The Date is not supported

Please help to see where there is a problem, thank you

CodePudding user response:

Hello, according to your question, I use the spark - shell tried, that's true,Then find the official information is checked, and give a solution, as follows,

The solution:

Reason:

The Spark and SQL DataFrames support the following data types:

a Datetime type

TimestampType: Represents values comprising values of fields year, month, day, hour, minute, and second.

DateType: Represents values comprising values of fields year, month, day.

TimestampType Java. SQL. Timestamp TimestampType

DateType Java. SQL. Date DateType

CodePudding user response:

In addition, if you want to use, then please use the Java. SQL. The Timestamp